k8s常用资源之rc资源(四)

k8s常用资源之rc资源

1.k8s资源常见操作

创建一个资源

kubectl create -f xxx.yaml

查看一个资源

kubectl get pod | rc

查看一个资源的详细过程

kubectl describe pos pod_name

删除一个资源

kubectl delete pod pod_name

kubectl delete -f xxx.yaml

编辑一个资源的配置文件

kubectl edit pod pod_name

2.rc资源

rc保证指定数量的pod始终存活,rc通过标签选择器来关联pod

rc创建的pod资源会均匀的分布在每个节点上,假如一个节点挂了,会立刻迁移到正常的节点上,始终保持pod资源存活,假如指定pod资源个数为5个,当挂了一个pod会立刻在创建一个新的pod,当多了一个pod会立马删除

删除多余pod资源时会删除存活时间最短的哪一个

rc在管理pod时通过 selector中定义的标签来管理pod的数量,如果pod标签与rc标签一致,rc则认为这是自己开启的pod会对它进行管理,当少的时候增加,多的时候删除

2.1.运行一个rc

1)辨析eyml文件

[root@k8s-master rc]# vim k8s_rc.yml

apiVersion: v1 //api版本

kind: ReplicationController //资源类型这里选择rc

metadata: //属性

name: nginxrc //rc资源的名称

spec:

replicas: 5 //启动几个pod资源

selector: //rc资源的标签

app: myweb

template: //定义pod资源的目标

metadata:

labels:

app: myweb //标签

spec:

containers:

- name: myweb //容器的名称

image: 192.168.81.240/k8s/nginx:1.15 //容器仓库地址

ports:

- containerPort: 80 //端口

2)创建rc资源

[root@k8s-master rc]# kubectl create -f k8s_rc.yml

replicationcontroller "nginxrc" created

3)查看rc资源

[root@k8s-master rc]# kubectl get rc

NAME DESIRED CURRENT READY AGE

nginxrc 5 5 5 16m

DESIRED表示要创建几个pod

CURRENT表示当前起来几个

READY表示准备好了几个

4)查看启动的pod,一共有5个

[root@k8s-master rc]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginxrc-5nd7q 1/1 Running 0 23s

nginxrc-f0np6 1/1 Running 0 24s

nginxrc-n7pg4 1/1 Running 0 23s

nginxrc-q0zln 1/1 Running 0 24s

nginxrc-tvzvj 1/1 Running 0 24s

5)查看pod资源是否均匀

[root@k8s-master rc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx2 1/1 Running 0 2d 172.16.77.2 192.168.81.230

nginxrc-5nd7q 1/1 Running 0 7m 172.16.46.3 192.168.81.220

nginxrc-f0np6 1/1 Running 0 7m 172.16.46.4 192.168.81.220

nginxrc-ldtnz 1/1 Running 0 35s 172.16.77.5 192.168.81.230

nginxrc-n7pg4 1/1 Running 0 7m 172.16.77.3 192.168.81.230

nginxrc-q0zln 1/1 Running 0 7m 172.16.77.4 192.168.81.230

test 2/2 Running 3 51m 172.16.46.2 192.168.81.220

2.2.删除一个rc运行的pod资源

1)删除

[root@k8s-master rc]# kubectl delete pod nginxrc-tvzvj

pod "nginxrc-tvzvj" deleted

2)删除完再查看,发现还是启动了5个,始终保证有5个

[root@k8s-master rc]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginxrc-5nd7q 1/1 Running 0 6m

nginxrc-f0np6 1/1 Running 0 6m

nginxrc-ldtnz 1/1 Running 0 9s

nginxrc-n7pg4 1/1 Running 0 6m

nginxrc-q0zln 1/1 Running 0 6m

2.3.node节点异常自动迁移

1)模拟node节点故障

[root@k8s-master rc]# kubectl delete node 192.168.81.230

node "192.168.81.230" deleted

2)查看pod资源是否迁移

[root@k8s-master rc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginxrc-27rlw 1/1 Running 0 1m 172.16.46.5 192.168.81.220

nginxrc-2scm1 1/1 Running 0 1m 172.16.46.7 192.168.81.220

nginxrc-5nd7q 1/1 Running 0 8m 172.16.46.3 192.168.81.220

nginxrc-f0np6 1/1 Running 0 8m 172.16.46.4 192.168.81.220

nginxrc-n3q8j 1/1 Running 0 1m 172.16.46.6 192.168.81.220

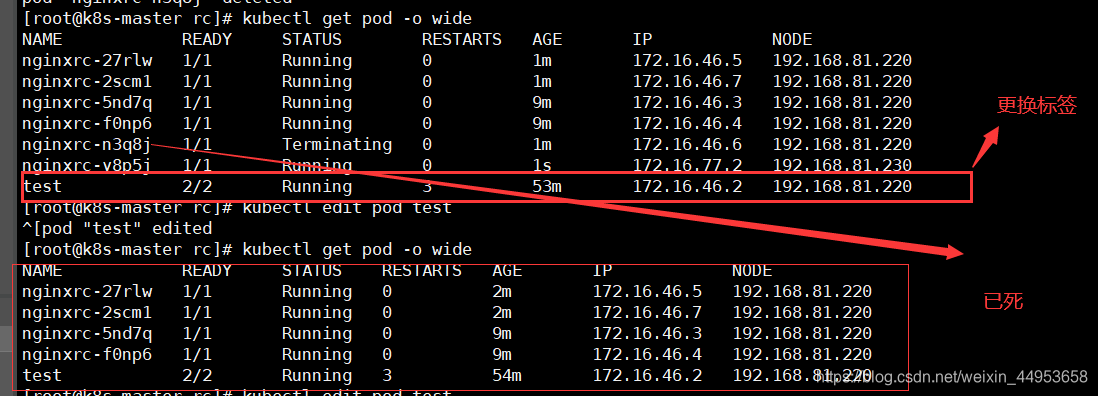

2.4.模拟增加pod资源观察删除的资源

1)修改test pod资源的标签

标签一致rc才会进行统一管理,始终保持指定的pod数量

将此pod的标签更换为nginxrc一样的标签

修改第十行

[root@k8s-master rc]# kubectl edit pod test

app: myweb

pod "test" edited

2)观察

换之前的pod情况

[root@k8s-master rc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginxrc-27rlw 1/1 Running 0 1m 172.16.46.5 192.168.81.220

nginxrc-2scm1 1/1 Running 0 1m 172.16.46.7 192.168.81.220

nginxrc-5nd7q 1/1 Running 0 9m 172.16.46.3 192.168.81.220

nginxrc-f0np6 1/1 Running 0 9m 172.16.46.4 192.168.81.220

nginxrc-v8p5j 1/1 Running 0 1s 172.16.77.2 192.168.81.230

test 2/2 Running 3 53m 172.16.46.2 192.168.81.220

换之后的pod情况

[root@k8s-master rc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginxrc-27rlw 1/1 Running 0 2m 172.16.46.5 192.168.81.220

nginxrc-2scm1 1/1 Running 0 2m 172.16.46.7 192.168.81.220

nginxrc-5nd7q 1/1 Running 0 9m 172.16.46.3 192.168.81.220

nginxrc-f0np6 1/1 Running 0 9m 172.16.46.4 192.168.81.220

test 2/2 Running 3 54m 172.16.46.2 192.168.81.220

删除多余pod资源时会删除存活时间最短的那一个

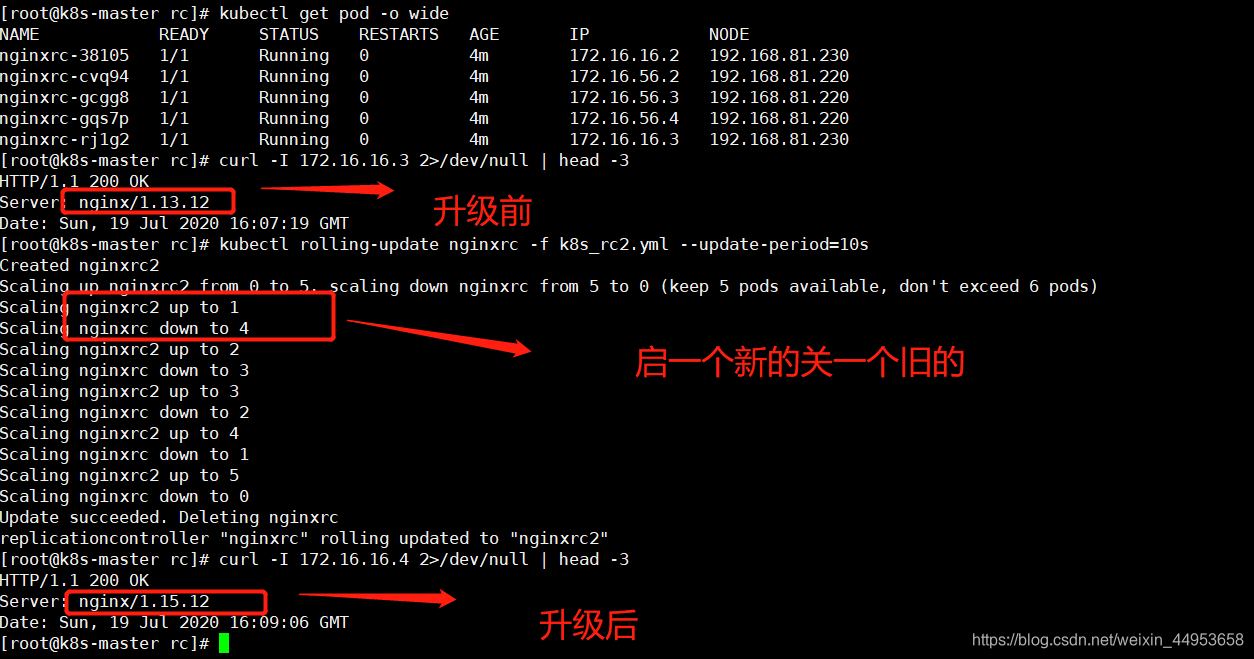

2.5.rc一键升级和自动回滚

一键升级其实就是把之前的pod一个个停掉,然后在根据新的配置启动新pod

回滚就是把新版本退回到旧版本

语法配置:kubectl rolling-update nginxrc -f k8s_rc2.yml --update-period=10s

rolling-update:升级回滚都需要的关键字

nginxrc:表示对哪个资源进行升级或回滚

-f:指定yaml文件

–update-period=10s:默认是1分钟,我们用10s升级,1s回滚

2.5.1.将nginx1.13版本的rc资源升级为nginx1.15

1)查看旧版本的pod容器

[root@k8s-master rc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginxrc-ff9bc 1/1 Running 0 13m 172.16.16.2 192.168.81.230

nginxrc-h36p6 1/1 Running 0 13m 172.16.56.3 192.168.81.220

nginxrc-rqq14 1/1 Running 0 13m 172.16.16.3 192.168.81.230

nginxrc-ttqww 1/1 Running 0 13m 172.16.56.2 192.168.81.220

nginxrc-vqv6t 1/1 Running 0 13m 172.16.56.4 192.168.81.220

nginxrc2-cdvbf 1/1 Running 0 6s 172.16.16.4 192.168.81.230

[root@k8s-master rc]# curl -I 172.16.56.4

HTTP/1.1 200 OK

Server: nginx/1.13.12

2)将nginx1.13升级为1.15

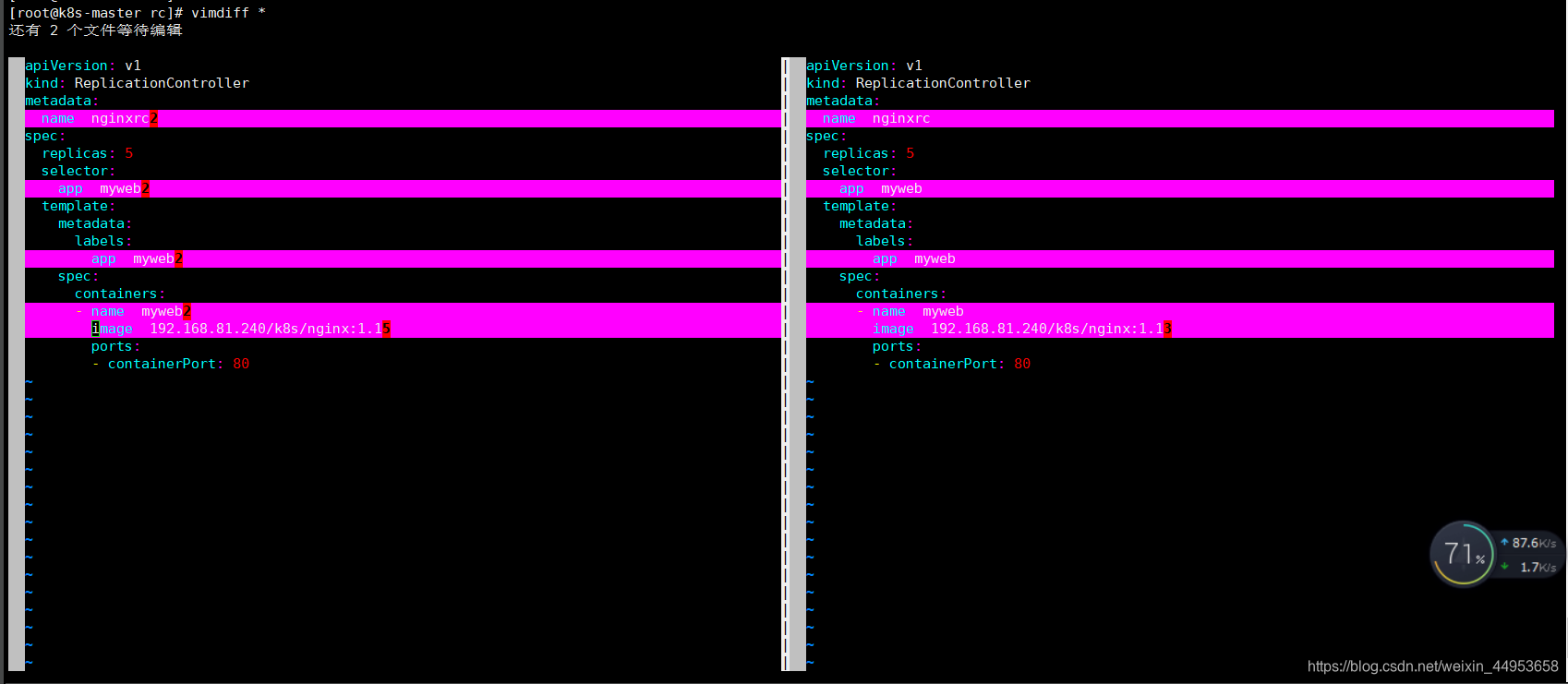

编写1.15版本的yaml

[root@k8s-master rc]# vim k8s_rc2.yml

apiVersion: v1

kind: ReplicationController

metadata:

name: nginxrc2

spec:

replicas: 5

selector:

app: myweb2

template:

metadata:

labels:

app: myweb2

spec:

containers:

- name: myweb2

image: 192.168.81.240/k8s/nginx:1.15

ports:

- containerPort: 80

3)一键升级,启动一个新的停止一个旧的

[root@k8s-master rc]# kubectl rolling-update nginxrc -f k8s_rc2.yml --update-period=10s

Continuing update with existing controller nginxrc2.

Scaling up nginxrc2 from 1 to 5, scaling down nginxrc from 5 to 0 (keep 5 pods available, don't exceed 6 pods)

Scaling nginxrc down to 4

Scaling nginxrc2 up to 2

Scaling nginxrc down to 3

Scaling nginxrc2 up to 3

Scaling nginxrc down to 2

Scaling nginxrc2 up to 4

Scaling nginxrc down to 1

Scaling nginxrc2 up to 5

Scaling nginxrc down to 0

Update succeeded. Deleting nginxrc

replicationcontroller "nginxrc" rolling updated to "nginxrc2

4)查看nginx版本

[root@k8s-master rc]# curl -I 172.16.56.3

HTTP/1.1 200 OK

Server: nginx/1.15.12

操作步骤详图

升级前后yaml文件比对

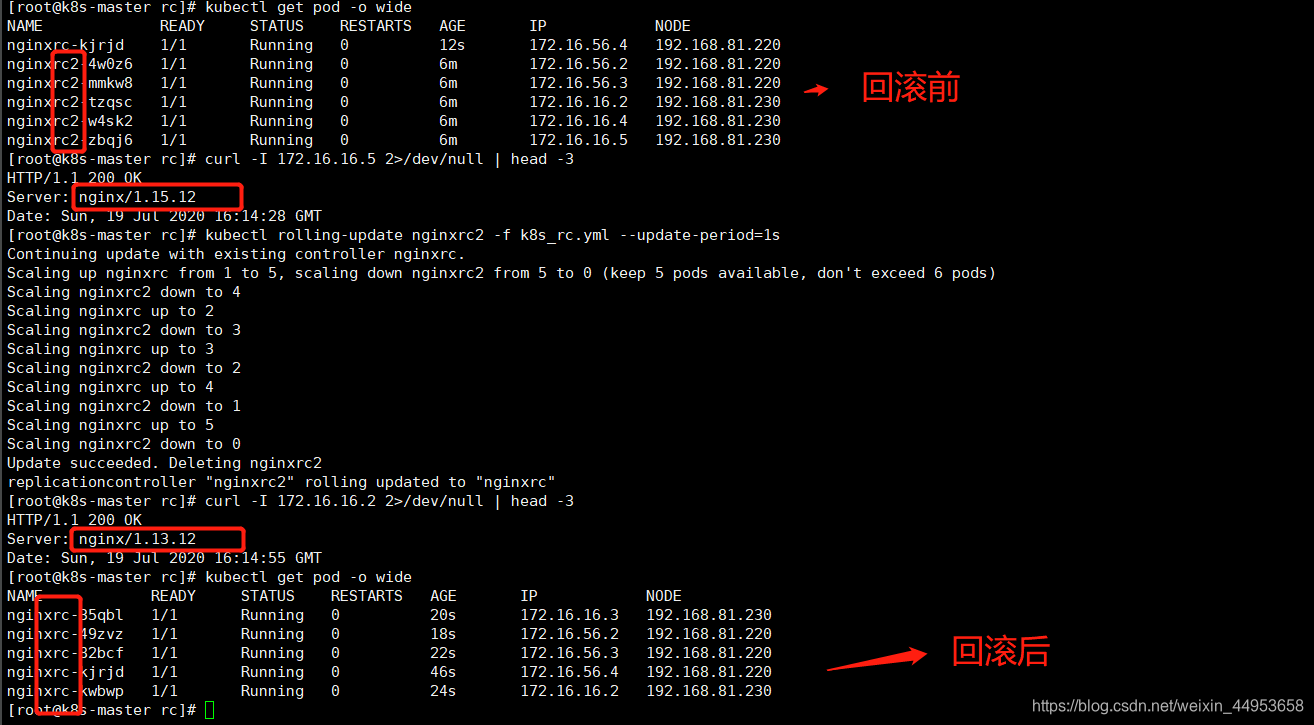

2.5.2.将nginx1.15版本回滚到nginx1.13

[root@k8s-master rc]# kubectl rolling-update nginxrc2 -f k8s_rc.yml --update-period=1s

Continuing update with existing controller nginxrc.

Scaling up nginxrc from 1 to 5, scaling down nginxrc2 from 5 to 0 (keep 5 pods available, don't exceed 6 pods)

Scaling nginxrc2 down to 4

Scaling nginxrc up to 2

Scaling nginxrc2 down to 3

Scaling nginxrc up to 3

Scaling nginxrc2 down to 2

Scaling nginxrc up to 4

Scaling nginxrc2 down to 1

Scaling nginxrc up to 5

Scaling nginxrc2 down to 0

Update succeeded. Deleting nginxrc2

replicationcontroller "nginxrc2" rolling updated to "nginxrc"

[root@k8s-master rc]# curl -I 172.16.16.2 2>/dev/null | head -3

HTTP/1.1 200 OK

Server: nginx/1.13.12

Date: Sun, 19 Jul 2020 16:14:55 GMT

目录 返回

首页