kubernetes架构详解及部署配置(一)

k8s基本概述

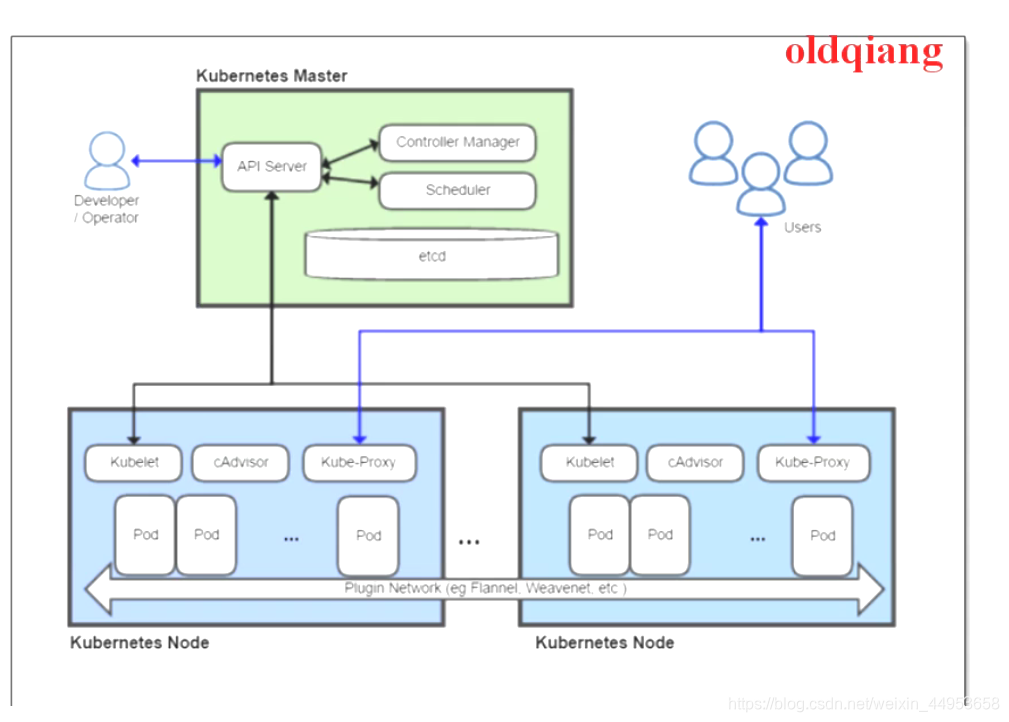

1.k8s架构和组件

- 1)在k8s中分为两种角色,一个是k8s master,一个是k8s node,master属于控制节点,真正工作的是node节点

- 2)k8s中用到的数据库是etcd,etcd是一个缓存数据库

- 3)k8s-master中需要安装的服务有api-server和controller manager和scheduler和etcd,其中最核心的服务是apiserver,其中apiserver用来管理整个k8s集群,controllermanager用来负责容器出现故障时进行迁移,scheduler用来分配创建容器时使用的node节点,etcd用来保存数据。

- 4)k8s-node节点需要安装kubelet和kube-proxy,其中kubelet用来调用docker,kube-proxy用来做端口映射

- 5)当需要创建一个容器时,k8s是这样工作的,首先apiserver会去调用scheduler调度器,scheduler会帮我们选好在哪个node上创建容器,当容器创建好之后,也不需要我们自己去启动,apiserver会调用node节点上的kubelet组件,kubelet会调用docker来启动容器。

- 6)当外界用户访问容器上的业务时,并不是去访问master而是直接访问node节点,通过访问node节点上的kube-porxy,最后在访问到容器上。

- 7)node节点上的kube-proxy主要作用是端口映射,把外界访问的端口映射到容器中

- 8)controller manager每秒都会检测容器的健康状态,当有容器挂掉后,controller manager组件会将这个容器迁移到其他node节点,保证服务的高可用

2.k8s集群的安装

2.1.资源划分

| IP | 角色 |

|---|---|

| 192.168.81.210 | k8s-master |

| 192.168.81.220 | k8s-node1 |

| 192.168.81.230 | k8s-node2 |

2.2.首要配置

设置各自的主机名

[root@localhost ~]# hostnamectl set-hostname k8s-master

[root@localhost ~]# hostnamectl set-hostname k8s-node1

[root@localhost ~]# hostnamectl set-hostname k8s-node2

关闭防火墙selinux

[root@k8s-master ~]# systemctl stop firewalld

[root@k8s-master ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@k8s-master ~]# sed -ri '/^SELINUX/c SELINUX=disabled' /etc/sysconfig/selinux

[root@k8s-master ~]# sed -ri '/^SELINUX/c SELINUX=disabled' /etc/selinux/config

[root@k8s-master ~]# setenforce 0

添加hosts解析,3台都做

[root@k8s-master ~]# vim /etc/hosts

192.168.81.210 k8s-master

192.168.81.220 k8s-node1

192.168.81.230 k8s-node2

配置yum源,3台都做

[root@k8s-master ~]# rm -rf /etc/yum.repos.d/*

[root@k8s-master ~]# curl -o /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo ;curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

2.3.安装docker1.12.6

三台全部安装

由于k8s版本问题,docker最新版1.13不太稳定,因此采用1.12版本

http://vault.centos.org/7.4.1708/extras/x86_64/Packages/在这个页面找到docker1.12的安装包

1)下载软件包

wget http://vault.centos.org/7.4.1708/extras/x86_64/Packages/docker-client-1.12.6-71.git3e8e77d.el7.centos.x86_64.rpm

wget http://vault.centos.org/7.4.1708/extras/x86_64/Packages/docker-common-1.12.6-71.git3e8e77d.el7.centos.x86_64.rpm

wget http://vault.centos.org/7.4.1708/extras/x86_64/Packages/docker-1.12.6-71.git3e8e77d.el7.centos.x86_64.rpm

2)安装软件

k8s-master

[root@k8s-master soft]# yum -y localinstall docker-common-1.12.6-71.git3e8e77d.el7.centos.x86_64.rpm

[root@k8s-master soft]# yum -y localinstall docker-client-1.12.6-71.git3e8e77d.el7.centos.x86_64.rpm

[root@k8s-master soft]# yum -y localinstall docker-1.12.6-71.git3e8e77d.el7.centos.x86_64.rpm

k8s-node1

[k8s-node1 soft]# yum -y localinstall docker-common-1.12.6-71.git3e8e77d.el7.centos.x86_64.rpm

[k8s-node1 soft]# yum -y localinstall docker-client-1.12.6-71.git3e8e77d.el7.centos.x86_64.rpm

[k8s-node1 soft]# yum -y localinstall docker-1.12.6-71.git3e8e77d.el7.centos.x86_64.rpm

k8s-node2

[k8s-node2 soft]# yum -y localinstall docker-common-1.12.6-71.git3e8e77d.el7.centos.x86_64.rpm

[k8s-node2 soft]# yum -y localinstall docker-client-1.12.6-71.git3e8e77d.el7.centos.x86_64.rpm

[k8s-node2 soft]# yum -y localinstall docker-1.12.6-71.git3e8e77d.el7.centos.x86_64.rpm

2.4.安装etcd

k8s-matser安装即可

1.安装etcd数据库

[root@k8s-master ~]# yum -y install etcd

2.配置etcd数据库

[root@k8s-master ~]# vim /etc/etcd/etcd.conf

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.81.210:2379"

3.启动etcd

[root@k8s-master ~]# systemctl restart etcd

[root@k8s-master ~]# systemctl enable etcd

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /usr/lib/systemd/system/etcd.service.

4.设置key

[root@k8s-master ~]# etcdctl set testdir/testkey01 jiangxl

jiangxl

[root@k8s-master ~]# etcdctl get testdir/testkey01

jiangxl

[root@k8s-master ~]# etcdctl ls

/testdir

5.检查etcd

[root@k8s-master ~]# etcdctl -C http://192.168.81.210:2379 cluster-health

member 8e9e05c52164694d is healthy: got healthy result from http://192.168.81.210:2379

cluster is healthy

2.5.安装master

master节点主要服务apiserver、kube-controller-master、kube-scheduler

1.安装k8s-master服务

[root@k8s-master ~]# yum install kubernetes-master -y

2.配置apiserver文件

[root@k8s-master ~]# vim /etc/kubernetes/apiserver

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0" //api server监听地址

KUBE_API_PORT="--port=8080" //api server的监听端口

KUBELET_PORT="--kubelet-port=10250" //与node节点的kubelet通信的端口

KUBE_ETCD_SERVERS="--etcd-servers=http://192.168.81.210:2379" //配置etcd服务的监听地址

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"

3.配置config文件

主从都需要修改这个文件

[root@k8s-master ~]# vim /etc/kubernetes/config

KUBE_MASTER="--master=http://192.168.81.210:8080" //master所在服务器的ip和端口

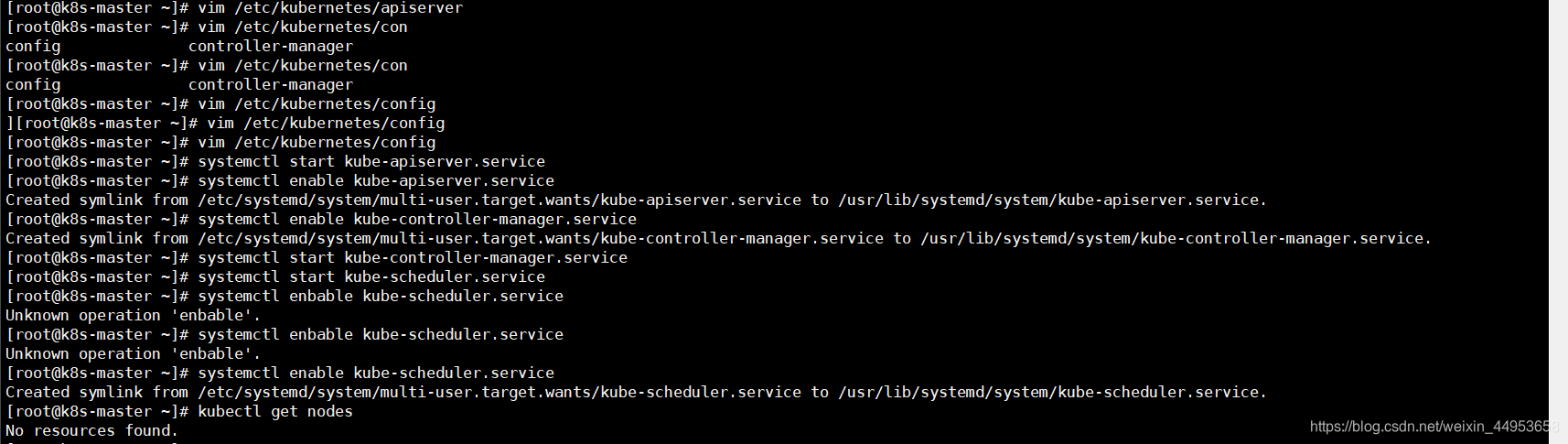

4.启动kube-apiserver、kube-controller-manager、kube-scheduler

[root@k8s-master ~]# systemctl start kube-apiserver.service

[root@k8s-master ~]# systemctl enable kube-apiserver.service

[root@k8s-master ~]# systemctl start kube-controller-manager.service

[root@k8s-master ~]# systemctl enable kube-controller-manager.service

[root@k8s-master ~]# systemctl start kube-scheduler.service

[root@k8s-master ~]# systemctl enable kube-scheduler.service

5.验证k8s master

[root@k8s-master ~]# kubectl get nodes

No resources found.

出现这一步表示k8smaster安装完成

2.6.安装node节点

node节点主要服务是kubelet和kube-proxy

2.6.1.node1配置

1.安装kubernetes-node

[root@k8s-node1 ~]# yum -y install kubernetes-node

2.配置config文件

[root@k8s-node1 ~]# vim /etc/kubernetes/config

KUBE_MASTER="--master=http://192.168.81.210:8080" //master api的地址

3.配置kubelet配置文件

[root@k8s-node1 ~]# vim /etc/kubernetes/kubelet

KUBELET_ADDRESS="--address=0.0.0.0" //kubelet监听地址

KUBELET_PORT="--port=10250" //kubelet监听端口

KUBELET_HOSTNAME="--hostname-override=192.168.81.220" //节点在集群中的名字

KUBELET_API_SERVER="--api-servers=http://192.168.81.210:8080" //master api的地址

4.启动kubelet、kube-proxy服务

root@k8s-node1 ~]# systemctl start kubelet.service

[root@k8s-node1 ~]# systemctl enable kubelet.service

[root@k8s-node1 ~]# systemctl start kube-proxy.service

[root@k8s-node1 ~]# systemctl enable kube-proxy.service

5.在master节点查看node1节点

[root@k8s-master ~]# kubectl get node

NAME STATUS AGE

192.168.81.220 Ready 11s

2.6.2.node2配置

1.安装kubernetes-node

[root@k8s-node1 ~]# yum -y install kubernetes-node

2.配置config文件

[root@k8s-node1 ~]# vim /etc/kubernetes/config

KUBE_MASTER="--master=http://192.168.81.210:8080" //master api的地址

3.配置kubelet配置文件

[root@k8s-node1 ~]# vim /etc/kubernetes/kubelet

KUBELET_ADDRESS="--address=0.0.0.0" //kubelet监听地址

KUBELET_PORT="--port=10250" //kubelet监听端口

KUBELET_HOSTNAME="--hostname-override=192.168.81.230" //节点在集群中的名字

KUBELET_API_SERVER="--api-servers=http://192.168.81.210:8080" //master api的地址

4.启动kubelet、kube-proxy服务

root@k8s-node1 ~]# systemctl start kubelet.service

[root@k8s-node1 ~]# systemctl enable kubelet.service

[root@k8s-node1 ~]# systemctl start kube-proxy.service

[root@k8s-node1 ~]# systemctl enable kube-proxy.service

5.在master节点查看node1节点

[root@k8s-master ~]# kubectl get node

NAME STATUS AGE

192.168.81.220 Ready 29m

192.168.81.230 Ready 3m

2.7.配置flannel网络

所有节点都需要配置flannel网络,因为容器需要跨主机进行通讯,前面我们学了docker的macvlan和overlay网络今天学习下flannel网络

2.7.1.所有节点安装配置flannel

[root@k8s-master ~]# yum -y install flannel

[root@k8s-master ~]# vim /etc/sysconfig/flanneld

FLANNEL_ETCD_ENDPOINTS="http://192.168.81.210:2379" //etcd服务器的地址

FLANNEL_ETCD_PREFIX="/atomic.io/network" //etcd服务器上面要创建的索引目录

2.7.2.master节点配置

1.创建etcd键值

[root@k8s-master ~]# etcdctl mk /atomic.io/network/config '{ "Network": "172.16.0.0/16" }'

{ "Network": "172.16.0.0/16" }

2.重启服务

root@k8s-master ~]# systemctl start flanneld

[root@k8s-master ~]# systemctl enable flanneld

[root@k8s-master ~]# systemctl restart kube-apiserver

[root@k8s-master ~]# systemctl restart kube-controller-manager

[root@k8s-master ~]# systemctl restart kube-scheduler

2.7.3.node节点配置

node节点配置都一致

重启服务

[root@k8s-node1 ~]# systemctl start flanneld

[root@k8s-node1 ~]# systemctl enable flanneld

[root@k8s-node1 ~]# systemctl restart docker

[root@k8s-node1 ~]# systemctl restart kubelet

[root@k8s-node1 ~]# systemctl restart kube-proxy

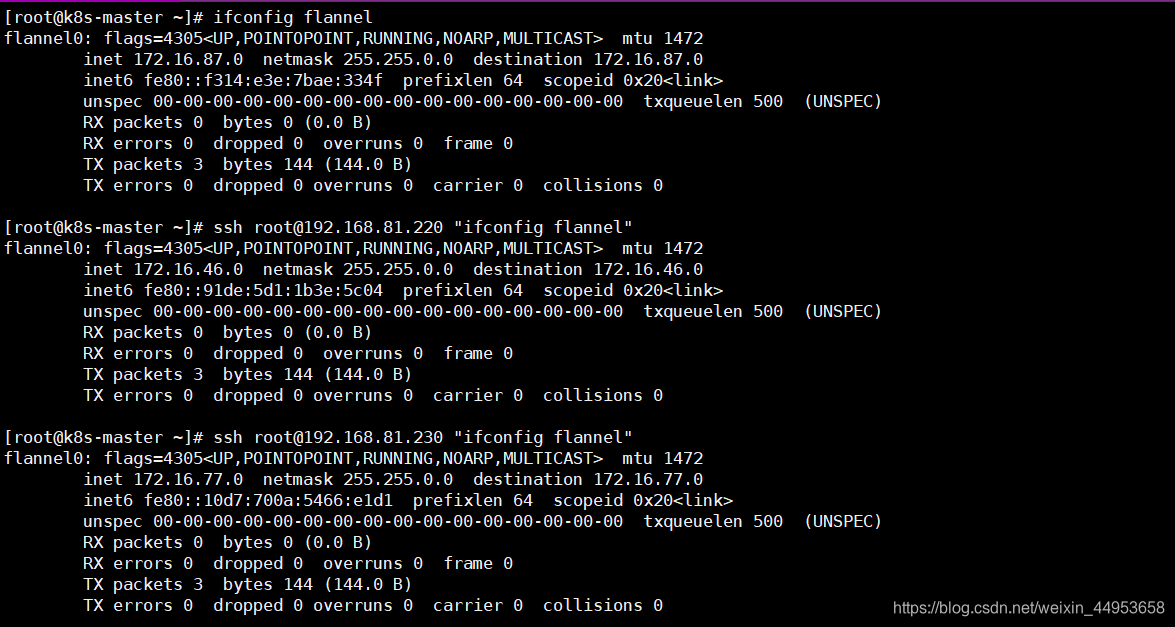

2.7.4.flannel网络

安装完flannel网络后每个机器上都会多出来一块flannel0的网卡

每台机器的第三位ip不一致因为我们采用的掩码是16位的

2.7.5.测试flannel网络跨主机通信

1)所有节点安装busybox镜像

[root@k8s-master ~]# docker pull busybox

Using default tag: latest

Trying to pull repository docker.io/library/busybox ...

latest: Pulling from docker.io/library/busybox

91f30d776fb2: Pull complete

Digest: sha256:9ddee63a712cea977267342e8750ecbc60d3aab25f04ceacfa795e6fce341793

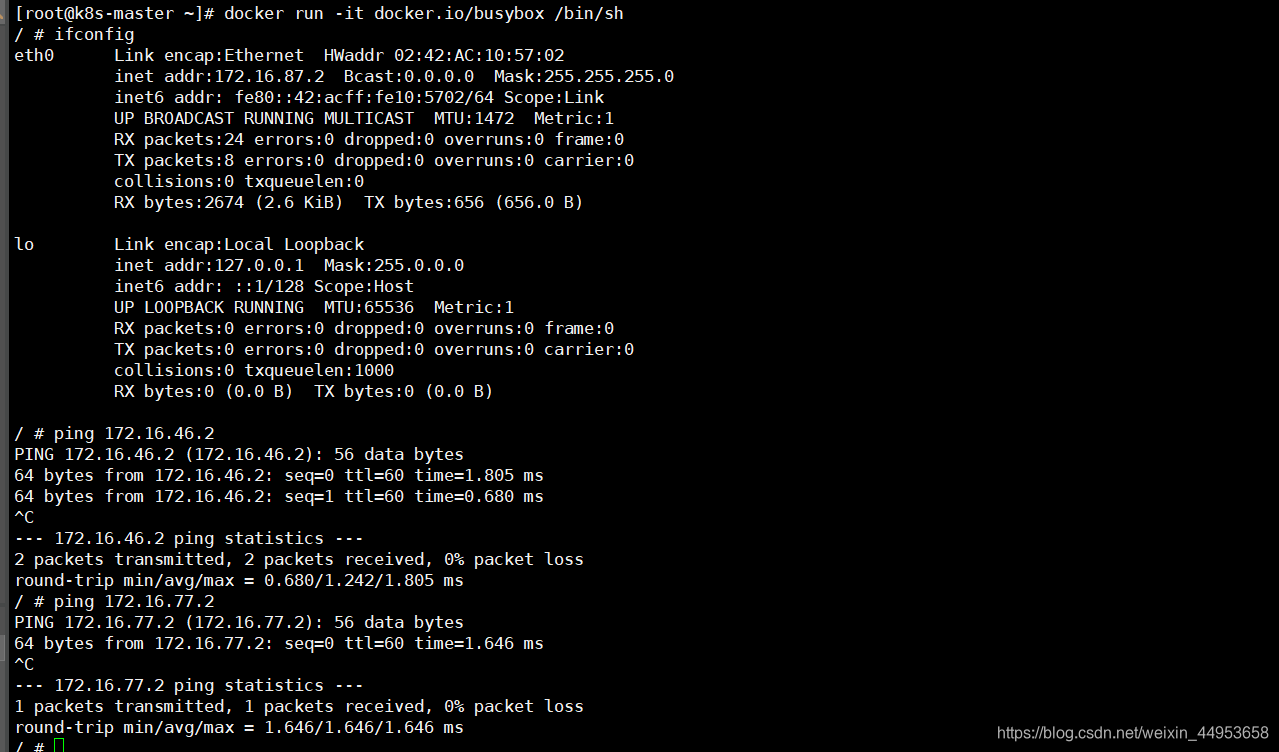

2)运行容器并测试网络

[root@k8s-master ~]# docker run -it docker.io/busybox /bin/sh

[root@k8s-node1 ~]# docker run -it docker.io/busybox /bin/sh

[root@k8s-node2 ~]# docker run -it docker.io/busybox /bin/sh

2.8.将master配置为registry镜像仓库

如果没有镜像仓库的话,我们每次需要安装一个容器就要去官网pull一个镜像,非常麻烦,因此我们搭建一个镜像仓库将所有镜像都存放在仓库里面

2.8.1.master配置

1)修改docker配置文件,增加镜像加速和镜像仓库的地址

root@k8s-master ~]# vim /etc/sysconfig/docker

OPTIONS='--selinux-enabled --log-driver=journald --signature-verification=false --registry-mirror=https://registry.docker-cn.com --insecure-registry=192.168.81.210:5000'

2)重启docker

[root@k8s-master ~]# systemctl restart docker

3)安装registry镜像仓库

[root@k8s-master ~]# mkdir /data/myregistry -p

[root@k8s-master ~]# docker run -d -p 5000:5000 --restart=always --name registry -v /data/myregistry/:/var/lib/registry registry

2.8.2.node节点配置

1)pull一个nginx1.13和1.15版本的镜像

[root@k8s-master ~]# docker pull nginx:1.13

[root@k8s-master ~]# docker pull nginx:1.15

2)重启docker

[root@k8s-node1 ~]# systemctl restart docker

[root@k8s-node2 ~]# systemctl restart docker

3)镜像打标签

[root@k8s-node1 ~]# docker tag docker.io/nginx:1.15 192.168.81.210:5000/nginx:1.15

4)上传镜像

[root@k8s-node1 ~]# docker push 192.168.81.210:5000/nginx:1.15

2.9.harbor代替registry

安装harbor

安装harbor之前必须安装docker和docker-compose

[root@docker03 ~]# tar xf harbor-offline-installer-v1.5.1.tgz

[root@docker03 ~]# cd harbor/

修改harbor配置文件,只需要修改ip和密码即可

[root@docker03 harbor]# vim harbor.cfg

hostname = 192.168.81.230

harbor_admin_password = admin

[root@docker03 harbor]# ./install.sh

访问http://192.168.81.230/harbor/projects

修改docker篇日志文件

[root@k8s-master ~]# vim /etc/sysconfig/docker

OPTIONS='--selinux-enabled --log-driver=journald --signature-verification=false --registry-mirror=https://registry.docker-cn.com --insecure-registry=192.168.81.210'

[root@k8s-master ~]# systemctl retsart docker

上传镜像

[root@k8s-master ~]# docker tag docker.io/nginx:1.15 192.168.81.240/nginx:1.15

[root@k8s-master ~]# docker login 192.168.81.240

Username: admin

Password:

Login Succeeded

[root@k8s-master ~]# docker tag docker.io/nginx:1.15 192.168.81.240/k8s/nginx:1.15

[root@k8s-master ~]# docker push 192.168.81.240/k8s/nginx:1.15

The push refers to a repository [192.168.81.240/k8s/nginx]

332fa54c5886: Pushed

6ba094226eea: Pushed

6270adb5794c: Pushed

1.15: digest: sha256:e770165fef9e36b990882a4083d8ccf5e29e469a8609bb6b2e3b47d9510e2c8d size: 948

目录 返回

首页