使用sealos搭建kubernetes1.19.6高可用集群并利用helm安装rancher高可用集群

使用sealos部署k8s-1.19高可用

文章目录

- 使用sealos部署k8s-1.19高可用

-

- 1.环境准备

- 2.使用sealos部署k8s1.19高可用集群

-

- 2.1.环境准备

- 2.2.准备k8s1.19离线包

- 2.3.部署k8s高可用集群

- 2.4.查看集群节点信息

- 3.sealos基本操作

-

- 3.1.创建一个高可用集群

- 3.2.在现有集群增加master节点

- 3.3.在现有集群增加node节点

- 3.4.删除指定master节点

- 3.5.删除指定node节点

- 3.6.清理集群

- 3.7.备份集群

- 4.部署Harbor镜像仓库

- 5.部署ingress-nginx

- 6.使用Helm在k8s中部署自签证书的rancher

-

- 6.1.准备所用工具

- 6.2.生成字签证书

- 6.3.创建rancher所在的namespace

- 6.4.添加helm源

- 6.5.创建rancher和ingress所需的secret证书认证

- 6.6.helm创建rancher高可用

- 6.7.查看k8s里运行的rancher pod

- 6.9.查看rancher的所有资源状态

- 6.10.访问rancher

- 6.11.解决rancher仪表盘变红问题

- 5.selaos部署k8s高可用集群报错合集

-

- 5.1.kubelet启动报错解决

sealos旨在做一个简单干净轻量级稳定的kubernetes安装工具,能很好的支持高可用安装。

sealos一条命令就可以实现k8s高可用。

sealos特性与优势:

- 支持离线安装,工具与资源包(二进制程序 配置文件 镜像 yaml文件等)分离,这样不同版本替换不同离线包即可

- 百年证书

- 使用简单

- 支持自定义配置

- 内核负载,极其稳定,因为简单所以排查问题也极其简单

- 不依赖ansible haproxy keepalived, 一个二进制工具,0依赖

- 资源包放在阿里云oss上,再也不用担心网速

- dashboard ingress prometheus等APP 同样离线打包,一键安装

- etcd一键备份(etcd原生api调用)。支持上传至oss,实现异地备份, 用户无需关心细节。

sealos clean --all清楚集群时,只有sealos安装的组件才会自动删除,否则将会存留在系统

1.环境准备

| IP | 主机名 |

|---|---|

| 192.168.16.106 | k8s-master1 |

| 192.168.16.105 | k8s-master2 |

| 192.168.16.107 | k8s-node1 |

| 192.168.16.104 | k8s-node2 |

2.使用sealos部署k8s1.19高可用集群

2.1.环境准备

1.设置主机名

hostnamectl set-hostname k8s-master1

hostnamectl set-hostname k8s-master2

hostnamectl set-hostname k8s-node1

hostnamectl set-hostname k8s-node2

2.关闭selinux及防火墙

setenforce 0

sed -ri '/^SELINUX=/c SELINUX=disabled' /etc/sysconfig/selinux

sed -ri '/^SELINUX=/c SELINUX=disabled' /etc/selinux/config

systemctl stop firewalld

systemctl disable firewalld

2.2.准备k8s1.19离线包

1.sealos官网获取软件包

[root@k8s-master1 ~/soft]# ll

总用量 507472

-rw-r--r-- 1 root root 475325715 4月 27 15:21 kube1.19.6.tar.gz

-rw-r--r-- 1 root root 44322816 4月 29 13:47 sealos

2.将kube1.19.6.tar.gz复制到所有k8s节点

[root@k8s-master1 ~/soft]# for i in {4,5,7}

do

scp -r /root/soft root@192.168.16.10${i}:/root

done

3.安装sealos

[root@k8s-master1 ~/soft]# chmod +x sealos && mv sealos /usr/bin

[root@k8s-master1 ~/soft]# sealos version

Version: 3.3.9-rc.3

Last Commit: 4db4953

Build Date: 2021-04-10T11:25:04Z

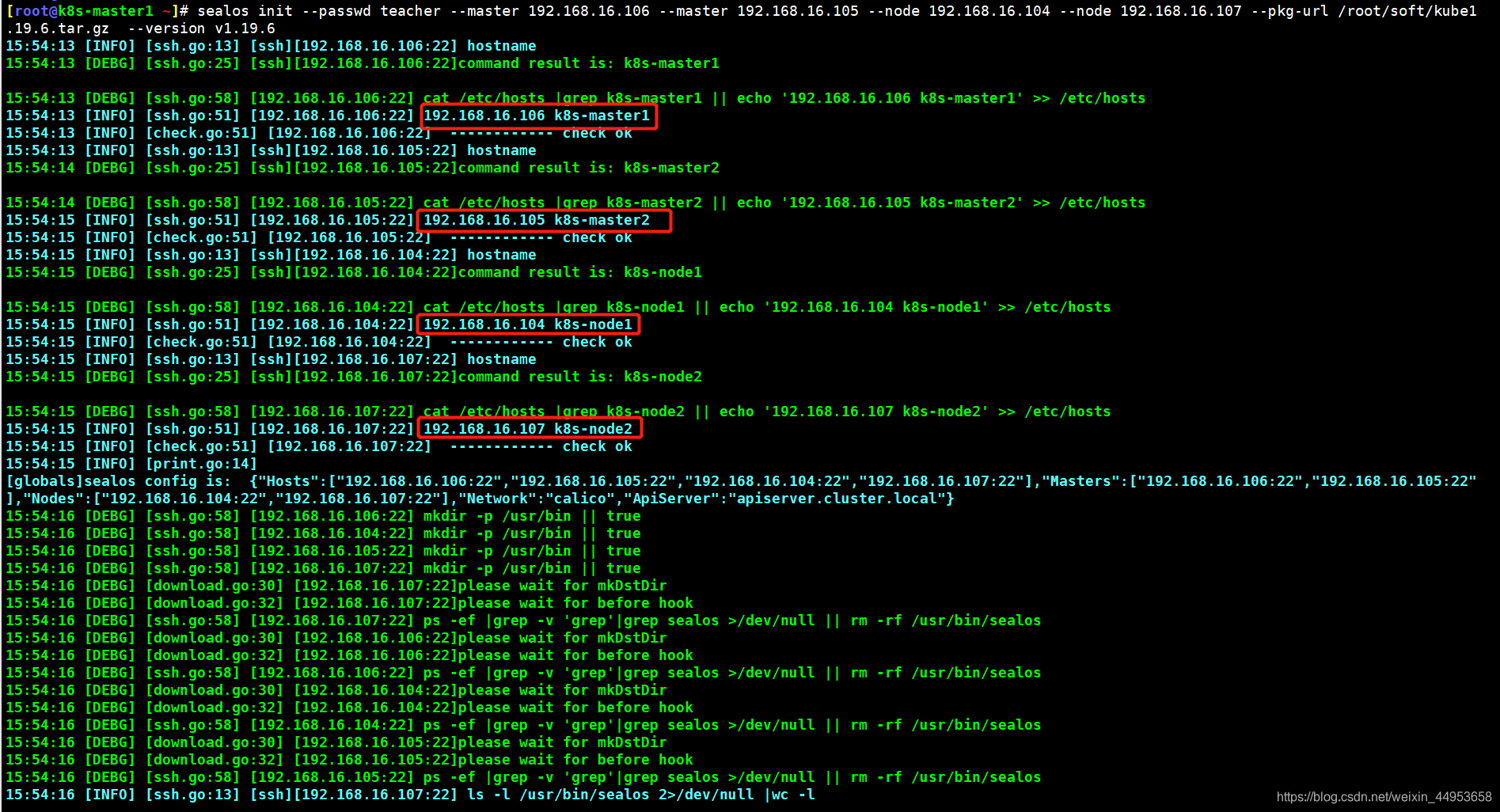

2.3.部署k8s高可用集群

[root@k8s-master1 ~]# sealos init --passwd '123456' --master 192.168.16.106 --master 192.168.16.105 --node 192.168.16.104 --node 192.168.16.107 --pkg-url /root/soft/kube1.19.6.tar.gz --version v1.19.6

部署完成

2.4.查看集群节点信息

[root@k8s-master1 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master1 Ready master 3m50s v1.19.6

k8s-master2 Ready master 3m11s v1.19.6

k8s-node1 Ready <none> 2m17s v1.19.6

k8s-node2 Ready <none> 2m19s v1.19.6

#详细信息

[root@k8s-master1 ~]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master1 Ready master 2m55s v1.19.6 192.168.16.106 <none> CentOS Linux 7 (Core) 3.10.0-862.el7.x86_64 docker://19.3.12

k8s-master2 Ready master 2m18s v1.19.6 192.168.16.105 <none> CentOS Linux 7 (Core) 3.10.0-862.el7.x86_64 docker://19.3.12

k8s-node1 Ready <none> 83s v1.19.6 192.168.16.104 <none> CentOS Linux 7 (Core) 3.10.0-862.el7.x86_64 docker://19.3.12

k8s-node2 Ready <none> 85s v1.19.6 192.168.16.107 <none> CentOS Linux 7 (Core) 3.10.0-862.el7.x86_64 docker://19.3.12

3.sealos基本操作

3.1.创建一个高可用集群

sealos init --passwd '123456' \

--master 192.168.0.2 --master 192.168.0.3 --master 192.168.0.4 \

--node 192.168.0.5 \

--pkg-url /root/kube1.20.0.tar.gz \

--version v1.20.0

3.2.在现有集群增加master节点

#增加一个master节点

sealos join --master 192.168.0.6

#增加多个master节点

sealos join --master 192.168.0.6 --master 192.168.0.7

#增加多个master节点,IP是连续的

sealos join --master 192.168.0.6-192.168.0.9 #或者多个连续IP

3.3.在现有集群增加node节点

#增加一个master节点

sealos join --node 192.168.0.6

#增加多个master节点

sealos join --node 192.168.0.6 --node 192.168.0.7

#增加多个master节点,IP是连续的

sealos join --node 192.168.0.6-192.168.0.9 #或者多个连续IP

3.4.删除指定master节点

#删除一个master节点

sealos clean --master 192.168.0.6

#删除多个master节点

sealos clean --master 192.168.0.6 --master 192.168.0.7

#删除多个master节点,IP是连续的

sealos clean --master 192.168.0.6-192.168.0.9 #或者多个连续IP

3.5.删除指定node节点

#删除一个master节点

sealos clean --node 192.168.0.6

#删除多个master节点

sealos clean --node 192.168.0.6 --node 192.168.0.7

#删除多个master节点,IP是连续的

sealos clean --node 192.168.0.6-192.168.0.9 #或者多个连续IP

3.6.清理集群

sealos clean --all

3.7.备份集群

sealos etcd save

4.部署Harbor镜像仓库

1.下载harbor

https://github.com/goharbor/harbor/releases/download

2.解压harbor

[root@k8s-master1 ~]# tar xf harbor-offline-installer-v1.6.1.tgz

[root@k8s-master1 ~]# cd harbor/

3.配置harbor

[root@k8s-master1 ~/harbor]# vim harbor.cfg

hostname = harbor.jiangxl.com

harbor_admin_password = admin

4.部署harbor

[root@k8s-master1 ~/harbor]# ./install.sh

访问http://harbor.jiangxl.com/harbor/projects

账号密码admin

5.部署ingress-nginx

1.获取ingress-nginx yaml文件

[root@k8s-master1 ~/k8s1.19/ingress]# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.30.0/deploy/static/mandatory.yaml

[root@k8s-master1 ~/k8s1.19/ingress]# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.30.0/deploy/static/provider/baremetal/service-nodeport.yaml

2.调整mandatory.yaml中的镜像地址为国内镜像

[root@k8s-master1 ~/k8s1.19/ingress]# vim mandatory.yaml

image: registry.cn-beijing.aliyuncs.com/google_registry/nginx-ingress-controller:0.30.0

#在220行左右

3.调整ingress svc暴露端口,映射主机的443和80端口

[root@k8s-master1 ~/ingress]# vim service-nodeport.yaml

apiVersion: v1

kind: Service

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

protocol: TCP

nodePort: 80

- name: https

port: 443

targetPort: 443

protocol: TCP

nodePort: 443

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

3.创建资源

[root@k8s-master ~/k8s1.19/ingress]# kubectl apply -f ./

namespace/ingress-nginx created

configmap/nginx-configuration created

configmap/tcp-services created

configmap/udp-services created

serviceaccount/nginx-ingress-serviceaccount created

clusterrole.rbac.authorization.k8s.io/nginx-ingress-clusterrole created

role.rbac.authorization.k8s.io/nginx-ingress-role created

rolebinding.rbac.authorization.k8s.io/nginx-ingress-role-nisa-binding created

clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress-clusterrole-nisa-binding created

deployment.apps/nginx-ingress-controller created

limitrange/ingress-nginx created

service/ingress-nginx created

4.查看资源状态

[root@k8s-master1 ~/ingress]# kubectl get all -n ingress-nginx

NAME READY STATUS RESTARTS AGE

pod/nginx-ingress-controller-766867958b-vp7fc 1/1 Running 0 36s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ingress-nginx NodePort 10.111.10.249 <none> 80:80/TCP,443:443/TCP 36s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-ingress-controller 1/1 1 1 36s

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-ingress-controller-766867958b 1 1 1 36s

6.使用Helm在k8s中部署自签证书的rancher

使用helm部署rancher高可用集群,并通过ingress暴露

rancher本身就是ingress暴露,无需再创建ingress资源,只需要准备好ingress所需要的的证书文件就可以了

6.1.准备所用工具

1.下载helm

https://sourceforge.net/projects/helm.mirror/files/latest/download

2.解压使用helm

[root@k8s-master ~/soft]# tar xf helm.tar.gz

[root@k8s-master ~/soft]# cp helm /usr/bin/

[root@k8s-master ~/soft]# helm

3.上传TLS证书生成软件

[root@k8s-master ~/soft]# tar xf TLS.tar.gz -C /root/

[root@k8s-master ~]# cd TLS/

[root@k8s-master1 ~/TLS]#

总用量 18852

-rw-r--r-- 1 root root 294 3月 10 15:11 ca-config.json

-rw-r--r-- 1 root root 960 3月 10 15:11 ca.csr

-rw-r--r-- 1 root root 212 3月 10 15:11 ca-csr.json

-rw------- 1 root root 1675 3月 10 15:11 ca-key.pem

-rw-r--r-- 1 root root 1273 3月 10 15:11 ca.pem

-rwxr-xr-x 1 root root 999 3月 10 15:11 certs.sh

-rwxr-xr-x 1 root root 10376657 10月 2 2019 cfssl

-rwxr-xr-x 1 root root 6595195 10月 2 2019 cfssl-certinfo

-rwxr-xr-x 1 root root 2277873 10月 2 2019 cfssljson

-rwxr-xr-x 1 root root 344 10月 3 2019 cfssl.sh

-rw-r--r-- 1 root root 195 3月 10 15:11 rancher-csr.json

-rw-r--r-- 1 root root 976 3月 10 15:11 rancher.teacher.com.cn.csr

-rw------- 1 root root 1675 3月 10 15:11 rancher.teacher.com.cn-key.pem

-rw-r--r-- 1 root root 1326 3月 10 15:11 rancher.teacher.com.cn.pem

4.删除不需要的证书文件

#删除的文件都是上次使用时生成的

[root@k8s-master ~/soft/TLS]# rm -rf ca*

[root@k8s-master ~/soft/TLS]# rm -rf rancher*

[root@k8s-master ~/soft/TLS]# ll

总用量 18816

-rwxr-xr-x 1 root root 999 3月 10 15:11 certs.sh

-rwxr-xr-x 1 root root 10376657 10月 2 2019 cfssl

-rwxr-xr-x 1 root root 6595195 10月 2 2019 cfssl-certinfo

-rwxr-xr-x 1 root root 2277873 10月 2 2019 cfssljson

-rwxr-xr-x 1 root root 344 10月 3 2019 cfssl.sh

6.2.生成字签证书

1.准备生成证书所需要的的命令

[root@k8s-master ~/TLS]# cat cfssl.sh

#curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl

#curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson

#curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo

cp -rf cfssl cfssl-certinfo cfssljson /usr/local/bin

chmod +x /usr/local/bin/cfssl*

[root@k8s-master ~/soft/TLS]# ./cfssl.sh

2.编辑证书所使用的域名

[root@k8s-master ~/soft/TLS]# vim certs.sh

cat > rancher-csr.json <<EOF

{

"CN": "rancher.jiangxl.com", #改成自己的域名

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes rancher-csr.json | cfssljson -bare rancher.jiangxl.com #改成自己的域名

[root@k8s-master ~/soft/TLS]# ./certs.sh

2021/05/07 15:08:05 [INFO] generating a new CA key and certificate from CSR

2021/05/07 15:08:05 [INFO] generate received request

2021/05/07 15:08:05 [INFO] received CSR

2021/05/07 15:08:05 [INFO] generating key: rsa-2048

2021/05/07 15:08:05 [INFO] encoded CSR

2021/05/07 15:08:05 [INFO] signed certificate with serial number 101654790097278429805640175100193242973661583912

2021/05/07 15:08:05 [INFO] generate received request

2021/05/07 15:08:05 [INFO] received CSR

2021/05/07 15:08:05 [INFO] generating key: rsa-2048

2021/05/07 15:08:06 [INFO] encoded CSR

2021/05/07 15:08:06 [INFO] signed certificate with serial number 256114560036375503495441829622686649009249955560

2021/05/07 15:08:06 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

6.3.创建rancher所在的namespace

[root@k8s-master ~]# kubectl create ns cattle-system

namespace/cattle-system created

6.4.添加helm源

[root@k8s-master ~]# helm repo add rancher-latest https://releases.rancher.com/server-charts/latest

"rancher-latest" has been added to your repositories

[root@k8s-master ~]# helm repo ls

NAME URL

rancher-latest https://releases.rancher.com/server-charts/latest

6.5.创建rancher和ingress所需的secret证书认证

如果使用ingress暴露rancher则执行下面的命令创建证书

1.生成ingress所需要使用的证书secret

[root@k8s-master1 ~/TLS]# kubectl -n cattle-system create secret tls tls-rancher-ingress --cert=rancher.jiangxl.com.pem --key=rancher.jiangxl.com-key.pem

secret/tls-rancher-ingress created

2.创建rancher所需要的的证书

[root@k8s-master1 ~/TLS]# cp /etc/kubernetes/pki/ca.crt /etc/kubernetes/pki/cacerts.pem

[root@k8s-master1 ~/TLS]# kubectl -n cattle-system create secret generic tls-ca --from-file=cacerts.pem=/etc/kubernetes/pki/cacerts.pem

secret/tls-ca created

6.6.helm创建rancher高可用

1.创建rancher

[root@k8s-master1 ~/TLS]# helm install rancher rancher-latest/rancher \

--namespace cattle-system \

--set hostname=rancher.jiangxl.com \

--set ingress.tls.source=tls-rancher-ingress \ #填写6.5中创建ingress secret

--set privateCA=true

NAME: rancher

LAST DEPLOYED: Sat May 8 14:31:27 2021

NAMESPACE: cattle-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Rancher Server has been installed.

NOTE: Rancher may take several minutes to fully initialize. Please standby while Certificates are being issued and Ingress comes up.

Check out our docs at https://rancher.com/docs/rancher/v2.x/en/

Browse to https://rancher.jiangxl.com

Happy Containering!

2.查看创建的rancher

[root@k8s-master1 ~/TLS]# helm ls -n cattle-system

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

rancher cattle-system 1 2021-05-08 14:31:27.494706017 +0800 CST deployed rancher-2.5.8 v2.5.8

6.7.查看k8s里运行的rancher pod

[root@k8s-master1 ~/TLS]# kubectl get all -n cattle-system

NAME READY STATUS RESTARTS AGE

pod/rancher-5bd5d98557-k5cd7 1/1 Running 0 38s

pod/rancher-5bd5d98557-pmhzh 1/1 Running 0 38s

pod/rancher-5bd5d98557-vgtwq 1/1 Running 0 38s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/rancher ClusterIP 10.108.97.195 <none> 80/TCP,443/TCP 38s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/rancher 3/3 3 3 38s

NAME DESIRED CURRENT READY AGE

replicaset.apps/rancher-5bd5d98557 3 3 3 38s

6.9.查看rancher的所有资源状态

[root@k8s-master1 ~/ingress]# kubectl get pod,deploy,svc,ingress,secret -n cattle-system

NAME READY STATUS RESTARTS AGE

pod/rancher-5bd5d98557-5tphq 1/1 Running 0 11m

pod/rancher-5bd5d98557-bjcjh 1/1 Running 0 11m

pod/rancher-5bd5d98557-rhkpp 1/1 Running 0 11m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/rancher 3/3 3 3 11m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/rancher ClusterIP 10.111.131.250 <none> 80/TCP,443/TCP 11m

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress.extensions/rancher <none> rancher.jiangxl.com 10.111.10.249 80, 443 11m

NAME TYPE DATA AGE

secret/default-token-clf8n kubernetes.io/service-account-token 3 12m

secret/rancher-token-lhgn2 kubernetes.io/service-account-token 3 11m

secret/serving-cert kubernetes.io/tls 2 61s

secret/sh.helm.release.v1.rancher.v1 helm.sh/release.v1 1 11m

secret/tls-ca Opaque 1 2m39s

secret/tls-rancher kubernetes.io/tls 2 61s

secret/tls-rancher-ingress kubernetes.io/tls 2 12m

secret/tls-rancher-internal-ca kubernetes.io/tls 2 61s

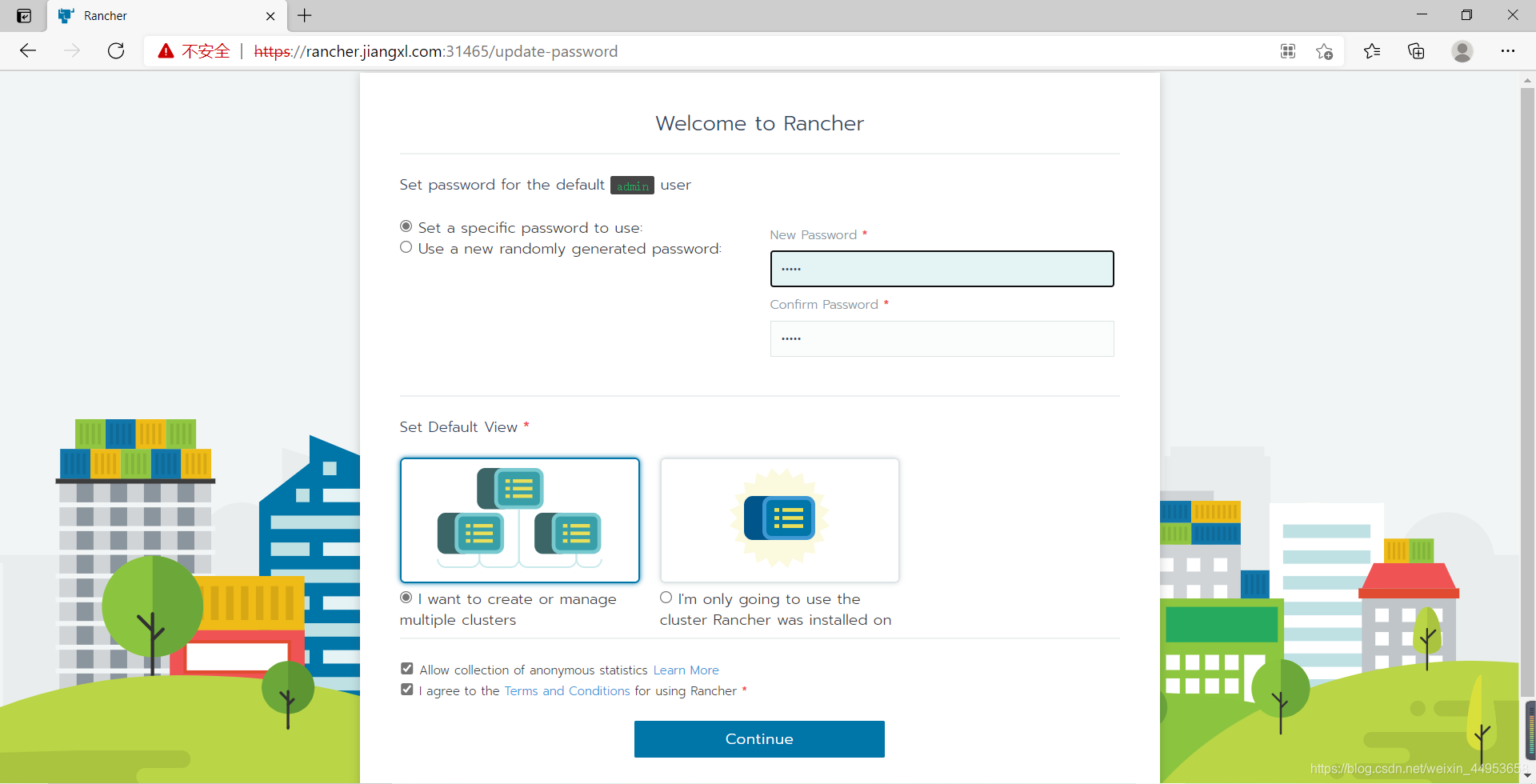

6.10.访问rancher

我们是通过ingress绑定域名暴露的rancher,因此要通过https://集群任意节点ip:ingress-https端口进行访问

1)查出ingress https的端口

[root@k8s-master1 ~/k8s1.19/ingress]# kubectl get svc -n ingress-nginx NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ingress-nginx NodePort 10.99.98.227 <none> 80:80/TCP,443:443/TCP 166m2)访问https://rancher.jiangxl.com

1)设置密码

3)登陆rancher

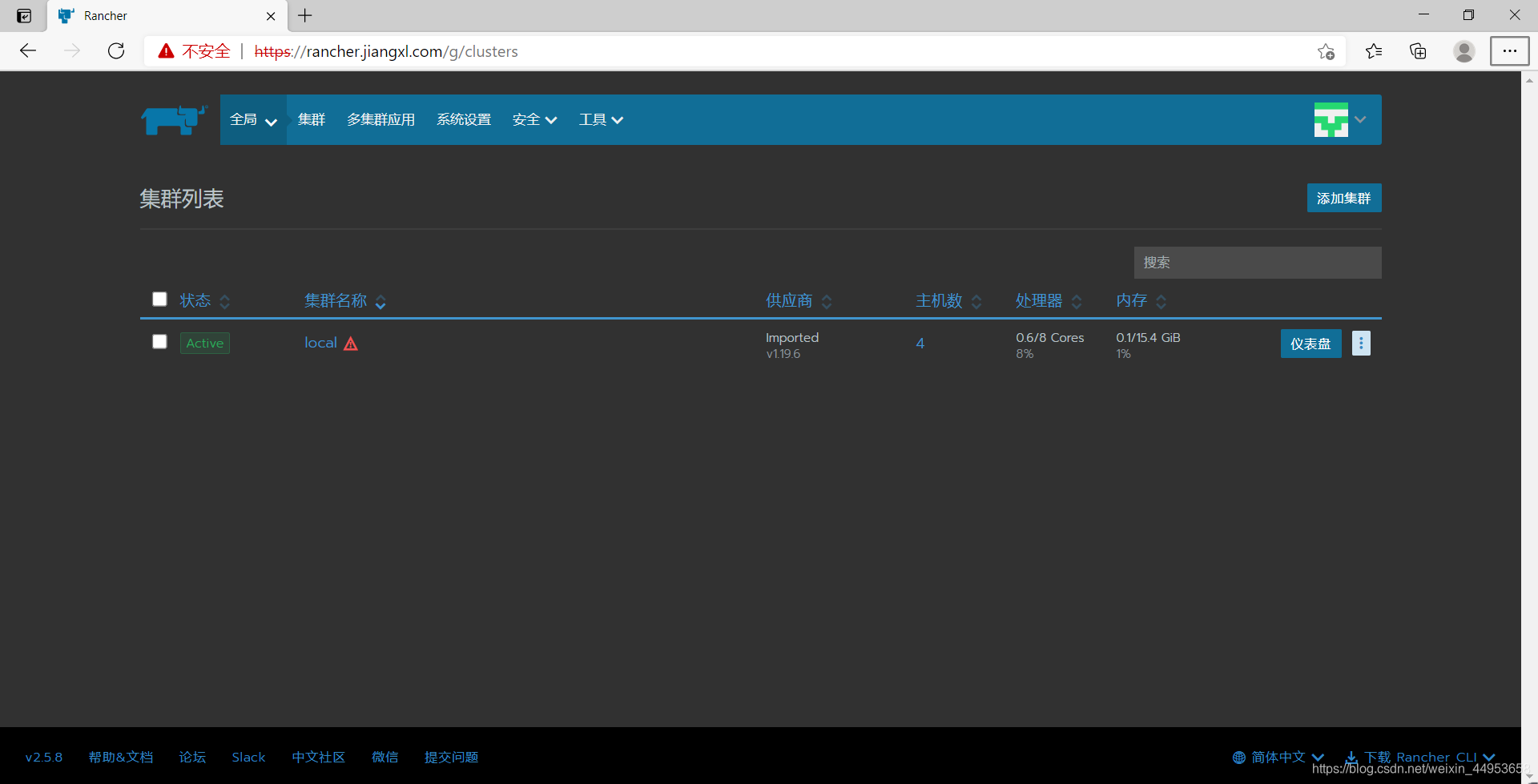

4)查看集群

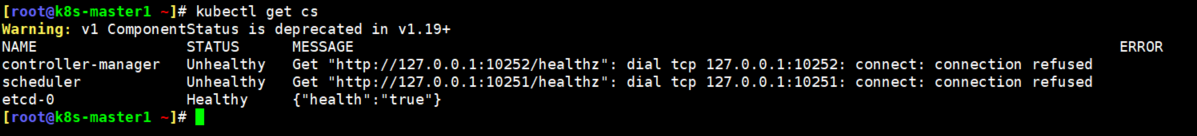

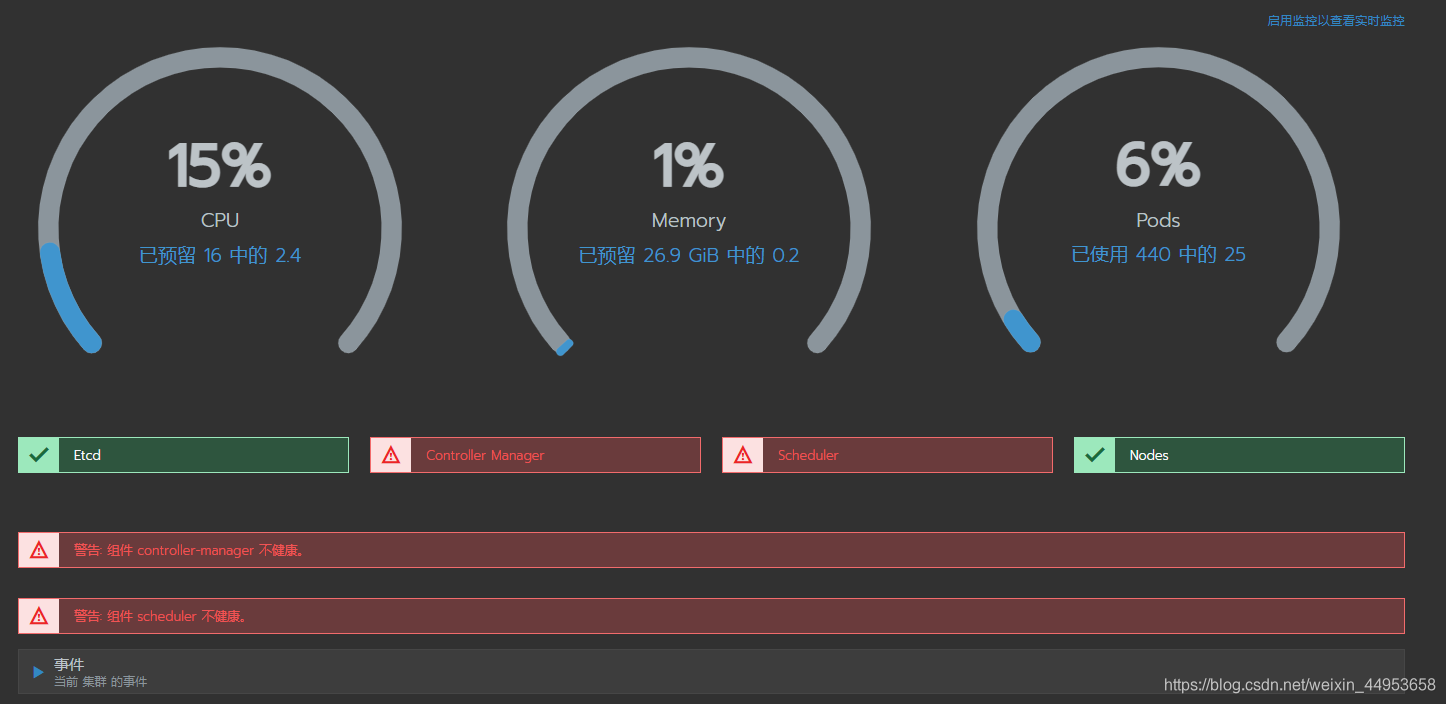

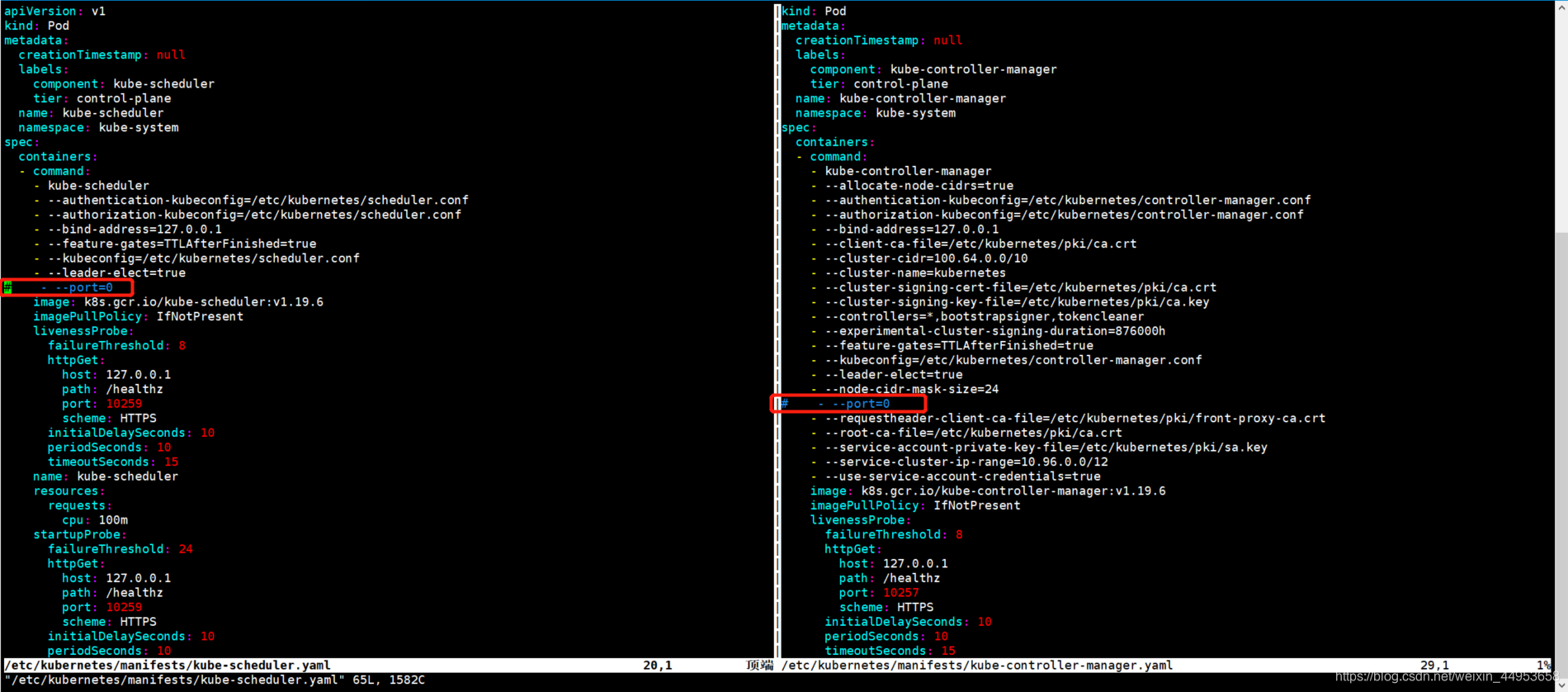

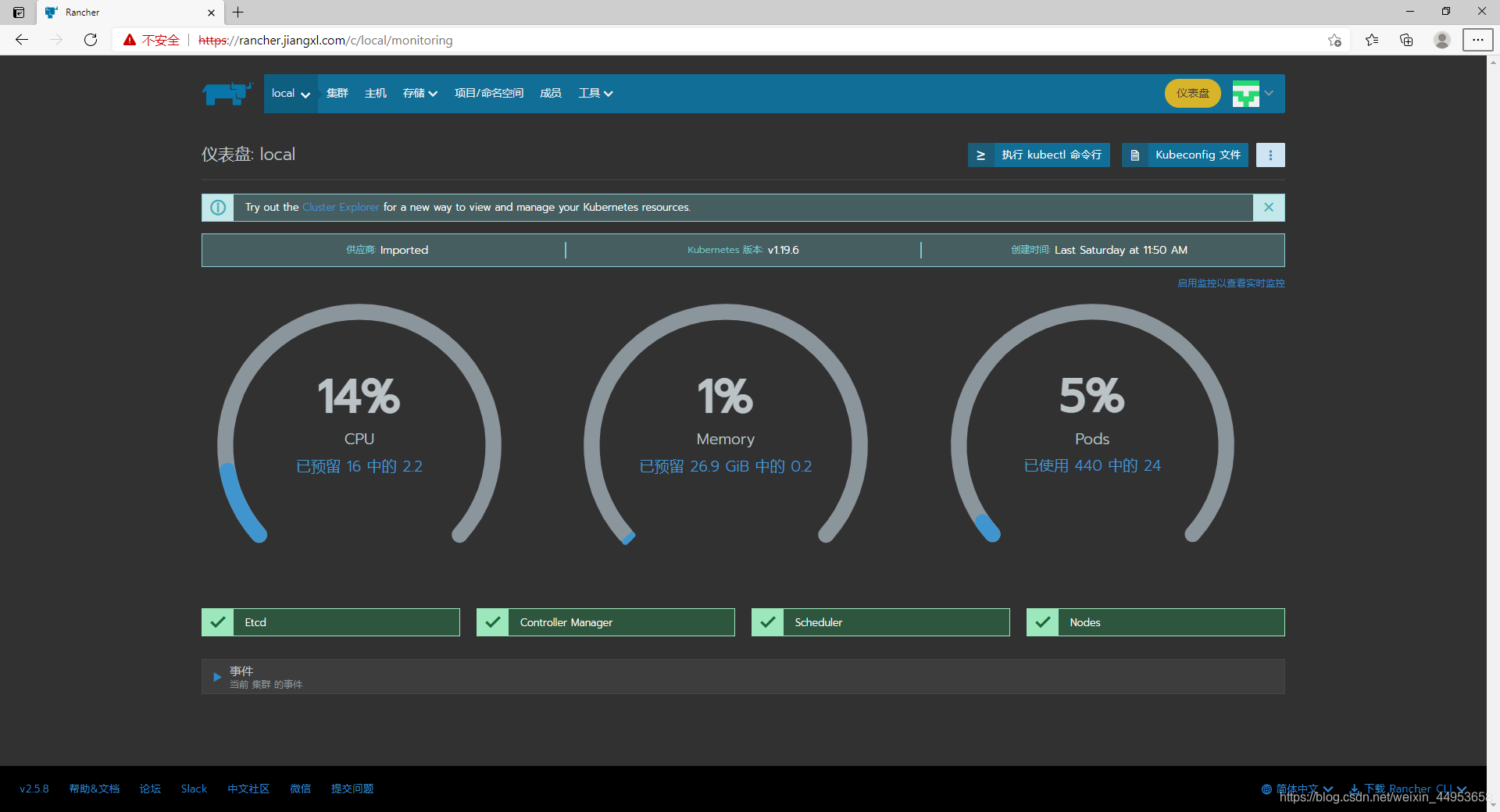

6.11.解决rancher仪表盘变红问题

最近通过kubeadm安装K8s,组件controller-manager 和scheduler状态 Unhealthy

[root@k8s-master1 ~]# netstat -lnpt | egrep '10251|10252' 端口也不存在rancher上显示也比较难看

解决方法如下:

在所有master节点都要操作

vim /etc/kubernetes/manifests/kube-scheduler.yaml

把port=0那行注释

vim /etc/kubernetes/manifests/kube-controller-manager.yaml

把port=0行注释

systemctl restart kubelet

仪表盘已经正常

5.selaos部署k8s高可用集群报错合集

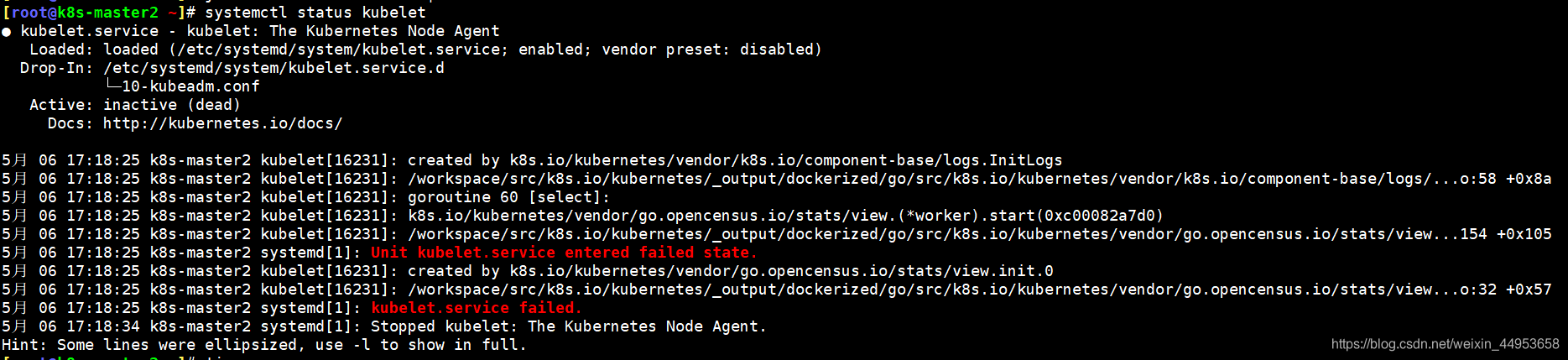

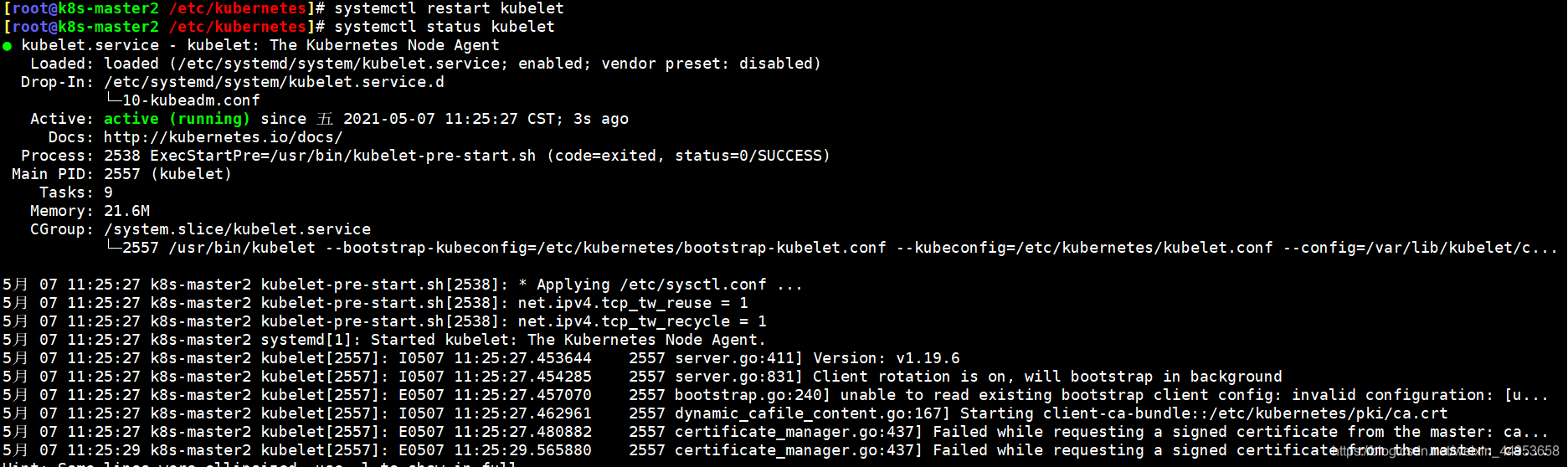

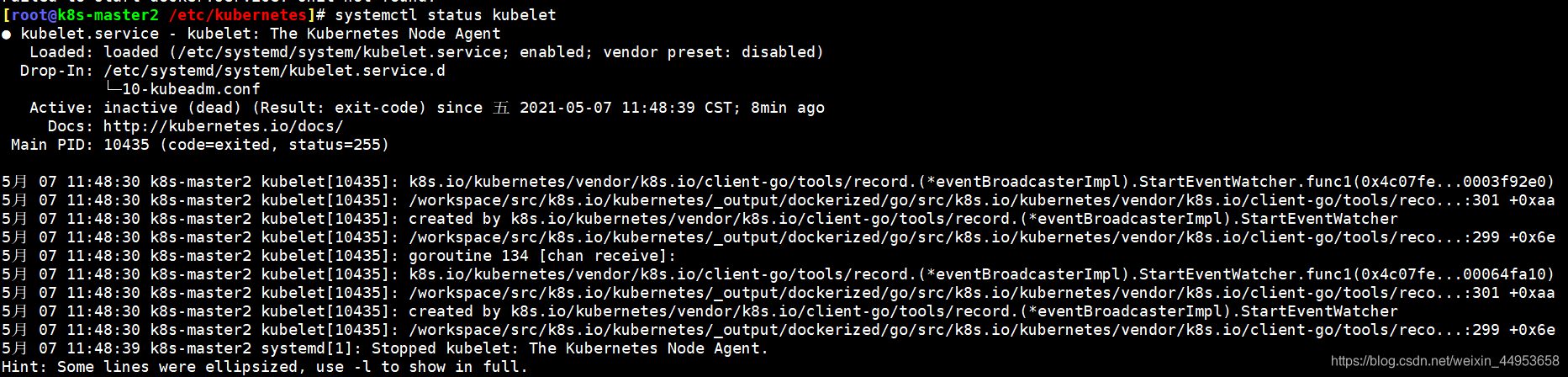

5.1.kubelet启动报错解决

k8s-master2原来装个k8s1.15版本,由于种种原因,再次使用sealos安装高版本的k8s时就会报错,错误内容如下:

[root@k8s-master2 ~]# systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/etc/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /etc/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: inactive (dead)

Docs: http://kubernetes.io/docs/

5月 06 17:18:25 k8s-master2 kubelet[16231]: created by k8s.io/kubernetes/vendor/k8s.io/component-base/logs.InitLogs

5月 06 17:18:25 k8s-master2 kubelet[16231]: /workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/k8s.io/component-base/logs/...o:58 +0x8a

5月 06 17:18:25 k8s-master2 kubelet[16231]: goroutine 60 [select]:

5月 06 17:18:25 k8s-master2 kubelet[16231]: k8s.io/kubernetes/vendor/go.opencensus.io/stats/view.(*worker).start(0xc00082a7d0)

5月 06 17:18:25 k8s-master2 kubelet[16231]: /workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/go.opencensus.io/stats/view...154 +0x105

5月 06 17:18:25 k8s-master2 systemd[1]: Unit kubelet.service entered failed state.

5月 06 17:18:25 k8s-master2 kubelet[16231]: created by k8s.io/kubernetes/vendor/go.opencensus.io/stats/view.init.0

5月 06 17:18:25 k8s-master2 kubelet[16231]: /workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/go.opencensus.io/stats/view...o:32 +0x57

5月 06 17:18:25 k8s-master2 systemd[1]: kubelet.service failed.

5月 06 17:18:34 k8s-master2 systemd[1]: Stopped kubelet: The Kubernetes Node Agent.

Hint: Some lines were ellipsized, use -l to show in full.

排查思路: 首先查看下kubelet的日志,日志记录的东西都是最详细的

[root@k8s-master2 ~]# journalctl -xeu kubelet | less

经过仔细排查发现少了一个bootstrap-kubelet.conf的文件,全新的机器安装k8s集群是不需要bootstrap-kubelet.conf这个文件的,会产生这种报错都是因为这个机器之前装个k8s

解决方法: bootstrap-kubelet.conf这个文件就是/etc/kubernetes/admin.conf文件,只需要cp一下即可

[root@k8s-master2 ~]# cd /etc/kubernetes/

[root@k8s-master2 /etc/kubernetes]# cp admin.conf bootstrap-kubelet.conf

[root@k8s-master2 /etc/kubernetes]# systemctl restart kubelet

重启后问题就解决了

如果该机器彻底删除了docker和k8s,依旧使用sealos部署k8s报错如下,那么可能就是docker没有安装成功,可以检查下docker的安装包全不全和docker服务有没有启动,docker都安装失败,kubelet一定会报错,因为kubeadm安装的k8s,所有组件都是以pod形式运行。

目录 返回

首页