k8s1.22 二进制安装

1. 下载安装包

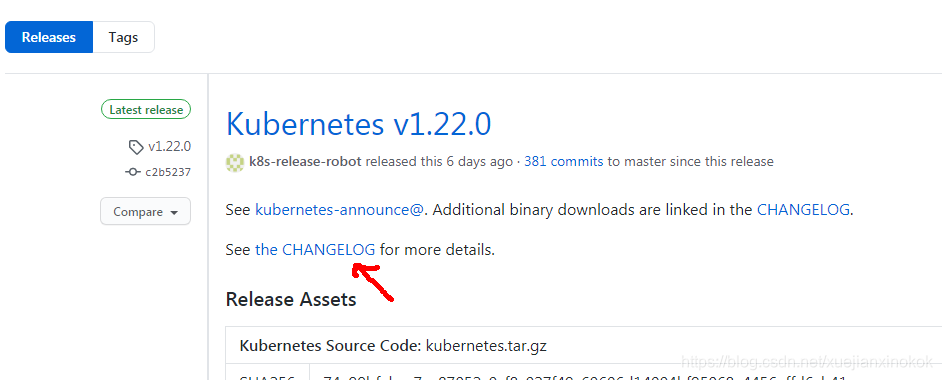

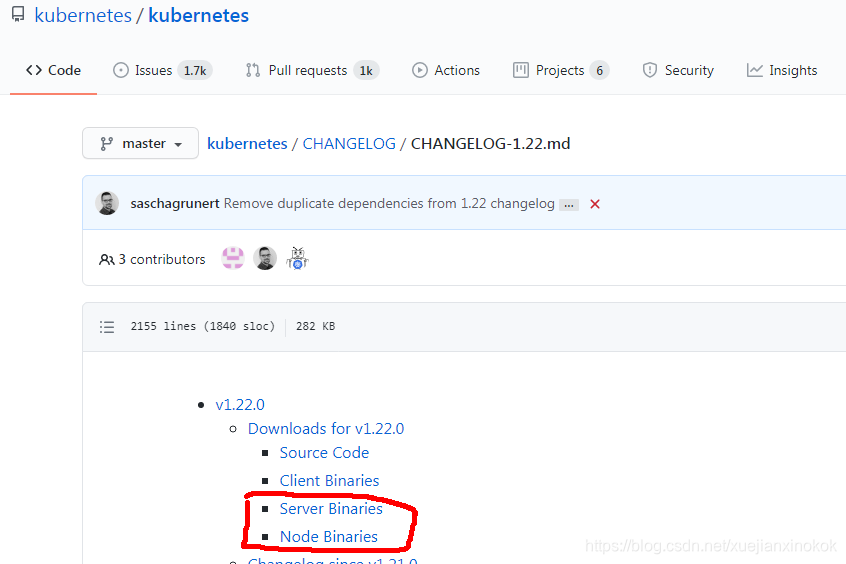

下载k8s

Release Kubernetes v1.22.0 · kubernetes/kubernetes · GitHub 点击 the CHANGELOG

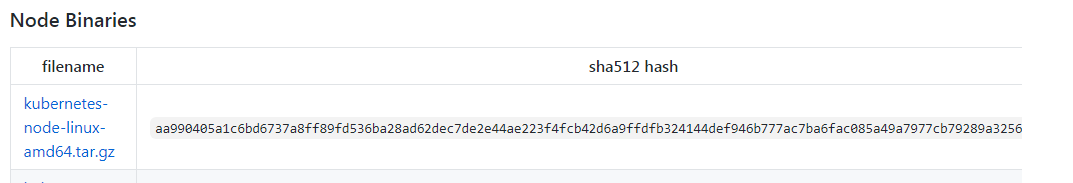

选择下载Server ,Node 二进制包

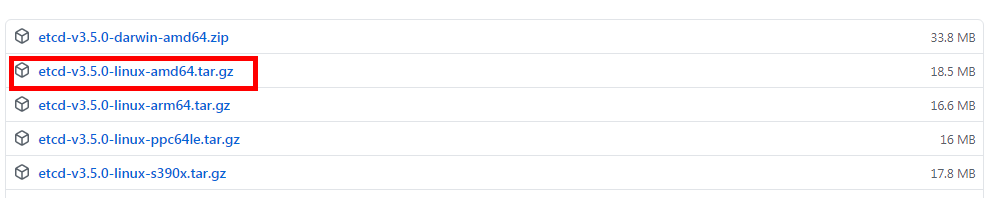

下载etcd 3.5

Release v3.5.0 · etcd-io/etcd · GitHub

下载如下版本

3. 设置host 配置,在以下三台服务器上设置 hosts 配置如下

[root@k8s01 back]# more /etc/hosts

172.16.10.138 k8s01

172.16.10.137 k8s02

172.16.10.139 k8s03

4. 下载之前的用kubeadm 安装的版本

#!/bin/bash

kubeadm reset -f

modprobe -r ipip

lsmod

rm -rf ~/.kube/

rm -rf /etc/kubernetes/

rm -rf /etc/systemd/system/kubelet.service.d

rm -rf /etc/systemd/system/kubelet.service

rm -rf /usr/bin/kube*

rm -rf /etc/cni

rm -rf /opt/cni

rm -rf /var/lib/etcd

rm -rf /var/etcd

yum clean all

yum remove kube*

5. 安装配置etcd

在k8s01,k8s02,k8s03 3台机器上

拷贝 etcd,etcdctl,etcdutl 3个执行文件到 /usr/bin

cp etcd* /usr/bin

创建 etcd systemd服务 配置文件 放到 /usr/lib/systemd/system/etcd.service

[root@k8s01 etcd-v3.5.0-linux-amd64]# cat /usr/lib/systemd/system/etcd.service

# /usr/lib/systemd/system/etcd.service

[Unit]

Description=etcd key-value store

Documentation=https://github.com/etcd-io/etcd

After=network.target

[Service]

EnvironmentFile=/etc/etcd/etcd.conf

ExecStart=/usr/bin/etcd

Restart=always

[Install]

WantedBy=multi-user.target

创建CA证书

选项-nodes不是英文单词“nodes”,而是“no DES”。 当作为参数给出时,这意味着OpenSSL不会encryptionPKCS#12文件中的私钥。

rm -rf /etc/kubernetes/pki/

mkdir -p /etc/kubernetes/pki/

cd /etc/kubernetes/pki/

openssl genrsa -out ca.key 2048

openssl req -x509 -new -nodes -key ca.key -subj "/CN=172.16.10.138" -days 36500 -out ca.crt

创建证书配置文件和证书

证书配置文件内容如下

[root@k8s01 back]# cat /etc/etcd/etcd_ssl.cnf

# etcd_ssl.cnf

[ req ]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[ req_distinguished_name ]

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[ alt_names ]

IP.1 = 172.16.10.138

IP.2 = 172.16.10.137

IP.3 = 172.16.10.139

DNS.1 = k8s01

DNS.2 = k8s01

DNS.3 = k8s03

# end etcd_ssl.cnf

mkdir -p /etc/etcd/pki/

cd /etc/etcd/pki/

openssl genrsa -out etcd_server.key 2048

openssl req -new -key etcd_server.key -config /etc/etcd/etcd_ssl.cnf -subj "/CN=etcd-server" -out etcd_server.csr

openssl x509 -req -in etcd_server.csr -CA /etc/kubernetes/pki/ca.crt -CAkey /etc/kubernetes/pki/ca.key -CAcreateserial -days 36500 -extensions v3_req -extfile /etc/etcd/etcd_ssl.cnf -out etcd_server.crt

openssl genrsa -out etcd_client.key 2048

openssl req -new -key etcd_client.key -config /etc/etcd/etcd_ssl.cnf -subj "/CN=etcd-client" -out etcd_client.csr

openssl x509 -req -in etcd_client.csr -CA /etc/kubernetes/pki/ca.crt -CAkey /etc/kubernetes/pki/ca.key -CAcreateserial -days 36500 -extensions v3_req -extfile /etc/etcd/etcd_ssl.cnf -out etcd_client.crt

创建etcd 数据目录

mkdir -p /etc/etcd/data

创建k8s01 的配置文件

[root@k8s01 pki]# cat /etc/etcd/etcd.conf

# /etc/etcd/etcd.conf - node 1

ETCD_NAME=etcd1

ETCD_DATA_DIR=/etc/etcd/data

ETCD_CERT_FILE=/etc/etcd/pki/etcd_server.crt

ETCD_KEY_FILE=/etc/etcd/pki/etcd_server.key

ETCD_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt

ETCD_CLIENT_CERT_AUTH=true

ETCD_LISTEN_CLIENT_URLS=https://172.16.10.138:2379

ETCD_ADVERTISE_CLIENT_URLS=https://172.16.10.138:2379

ETCD_PEER_CERT_FILE=/etc/etcd/pki/etcd_server.crt

ETCD_PEER_KEY_FILE=/etc/etcd/pki/etcd_server.key

ETCD_PEER_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt

ETCD_LISTEN_PEER_URLS=https://172.16.10.138:2380

ETCD_INITIAL_ADVERTISE_PEER_URLS=https://172.16.10.138:2380

ETCD_INITIAL_CLUSTER_TOKEN=etcd-cluster

ETCD_INITIAL_CLUSTER="etcd1=https://172.16.10.138:2380,etcd2=https://172.16.10.137:2380,etcd3=https://172.16.10.139:2380"

ETCD_INITIAL_CLUSTER_STATE=new

--------------------------------------------------

# /etc/etcd/etcd.conf - node 2

ETCD_NAME=etcd2

ETCD_DATA_DIR=/etc/etcd/data

ETCD_CERT_FILE=/etc/etcd/pki/etcd_server.crt

ETCD_KEY_FILE=/etc/etcd/pki/etcd_server.key

ETCD_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt

ETCD_CLIENT_CERT_AUTH=true

ETCD_LISTEN_CLIENT_URLS=https://172.16.10.137:2379

ETCD_ADVERTISE_CLIENT_URLS=https://172.16.10.137:2379

ETCD_PEER_CERT_FILE=/etc/etcd/pki/etcd_server.crt

ETCD_PEER_KEY_FILE=/etc/etcd/pki/etcd_server.key

ETCD_PEER_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt

ETCD_LISTEN_PEER_URLS=https://172.16.10.137:2380

ETCD_INITIAL_ADVERTISE_PEER_URLS=https://172.16.10.137:2380

ETCD_INITIAL_CLUSTER_TOKEN=etcd-cluster

ETCD_INITIAL_CLUSTER="etcd1=https://172.16.10.138:2380,etcd2=https://172.16.10.137:2380,etcd3=https://172.16.10.139:2380"

ETCD_INITIAL_CLUSTER_STATE=new

--------------------------------------------------------------

# /etc/etcd/etcd.conf - node 3

ETCD_NAME=etcd3

ETCD_DATA_DIR=/etc/etcd/data

ETCD_CERT_FILE=/etc/etcd/pki/etcd_server.crt

ETCD_KEY_FILE=/etc/etcd/pki/etcd_server.key

ETCD_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt

ETCD_CLIENT_CERT_AUTH=true

ETCD_LISTEN_CLIENT_URLS=https://172.16.10.139:2379

ETCD_ADVERTISE_CLIENT_URLS=https://172.16.10.139:2379

ETCD_PEER_CERT_FILE=/etc/etcd/pki/etcd_server.crt

ETCD_PEER_KEY_FILE=/etc/etcd/pki/etcd_server.key

ETCD_PEER_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt

ETCD_LISTEN_PEER_URLS=https://172.16.10.139:2380

ETCD_INITIAL_ADVERTISE_PEER_URLS=https://172.16.10.139:2380

ETCD_INITIAL_CLUSTER_TOKEN=etcd-cluster

ETCD_INITIAL_CLUSTER="etcd1=https://172.16.10.138:2380,etcd2=https://172.16.10.137:2380,etcd3=https://172.16.10.139:2380"

ETCD_INITIAL_CLUSTER_STATE=new

-----------------------------------------------------------

启动etcd 服务

systemctl restart etcd && systemctl enable etcd

[root@dellR710 pki]# etcdctl --cacert=/etc/kubernetes/pki/ca.crt --cert=/etc/etcd/pki/etcd_client.crt --key=/etc/etcd/pki/etcd_client.key --endpoints=https://172.16.10.138:2379,https://172.16.10.137:2379,https://172.16.10.139:2379 endpoint health

https://172.16.10.138:2379 is healthy: successfully committed proposal: took = 24.030101ms

https://172.16.10.137:2379 is healthy: successfully committed proposal: took = 33.012128ms

https://172.16.10.139:2379 is healthy: successfully committed proposal: took = 47.740017ms

[root@dellR710 pki]# etcdctl --cacert=/etc/kubernetes/pki/ca.crt --cert=/etc/etcd/pki/etcd_client.crt --key=/etc/etcd/pki/etcd_client.key --endpoints=https://172.16.10.138:2379,https://172.16.10.137:2379,https://172.16.10.139:2379 member list

54da171dcec87549, started, etcd2, https://172.16.10.137:2380, https://172.16.10.137:2379, false

bd4e35496584eba1, started, etcd3, https://172.16.10.139:2380, https://172.16.10.139:2379, false

d21f7997f8ebad04, started, etcd1, https://172.16.10.138:2380, https://172.16.10.138:2379, false

6. 配置k8s

配置 api-server

证书请求文件

[root@k8s01 kubernetes]# cat master_ssl.cnf

# master_ssl.cnf

[req]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[req_distinguished_name]

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[alt_names]

DNS.1 = kubernetes

DNS.2 = kubernetes.default

DNS.3 = kubernetes.default.svc

DNS.4 = kubernetes.default.svc.cluster.local

DNS.5 = k8s01

DNS.6 = k8s02

DNS.7 = k8s03

IP.1 = 169.169.0.1

IP.2 = 172.16.10.138

IP.3 = 172.16.10.137

IP.4 = 172.16.10.139

IP.5 = 172.16.10.100

IP.6 = 127.0.0.1

IP.7 = 192.168.0.1

# end master_ssl.cnf

# 创建服务端CA证书

cd /etc/kubernetes/pki

rm -rf apiserver.*

rm -rf client.*

openssl genrsa -out apiserver.key 2048

openssl req -new -key apiserver.key -config /etc/kubernetes/master_ssl.cnf -subj "/CN=172.16.10.138" -out apiserver.csr

openssl x509 -req -in apiserver.csr -CA ca.crt -CAkey ca.key -CAcreateserial -days 36500 -extensions v3_req -extfile /etc/kubernetes/master_ssl.cnf -out apiserver.crt

# 创建客户端CA证书

openssl genrsa -out client.key 2048

openssl req -new -key client.key -subj "/CN=admin" -out client.csr

openssl x509 -req -in client.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out client.crt -days 36500

创建服务

-----------------------------------

# Kubernetes各服务的配置

# vi /usr/lib/systemd/system/kube-apiserver.service

# /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/etc/kubernetes/apiserver

ExecStart=/usr/bin/kube-apiserver $KUBE_API_ARGS

Restart=always

[Install]

WantedBy=multi-user.target

-----------------------------------------

服务的配置文件

#vi /etc/kubernetes/apiserver

# /etc/kubernetes/apiserver

KUBE_API_ARGS="--secure-port=6443 \

--tls-cert-file=/etc/kubernetes/pki/apiserver.crt \

--tls-private-key-file=/etc/kubernetes/pki/apiserver.key \

--client-ca-file=/etc/kubernetes/pki/ca.crt \

--service-account-key-file=/etc/kubernetes/pki/ca.key \

--service-account-signing-key-file=/etc/kubernetes/pki/ca.key \ # 1.20以上版本必须有此参数

--service-account-issuer=https://kubernetes.default.svc.cluster.local \ # 1.20以上版本必须有此参数

--apiserver-count=3 --endpoint-reconciler-type=master-count \

--etcd-servers=https://172.16.10.138:2379,https://172.16.10.137:2379,https://172.16.10.139:2379 \

--etcd-cafile=/etc/kubernetes/pki/ca.crt \

--etcd-certfile=/etc/etcd/pki/etcd_client.crt \

--etcd-keyfile=/etc/etcd/pki/etcd_client.key \

--service-cluster-ip-range=169.169.0.0/16 \

--service-node-port-range=30000-32767 \

--allow-privileged=true \

--logtostderr=false --log-dir=/var/log/kubernetes --v=0"

启动服务

systemctl start kube-apiserver && systemctl enable kube-apiserver

systemctl status kube-apiserver

journalctl -u kube-apiserver

测试api server 有返回说明配置正确

[root@k8s01 bin]# curl --insecure https://172.16.10.138:6443/

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {

},

"status": "Failure",

"message": "Unauthorized",

"reason": "Unauthorized",

"code": 401

}

碰到的问题

-------------问题----------------------

journalctl -u kube-apiserver

Aug 12 11:36:01 k8s01 kube-apiserver[565796]: Flag --insecure-port has been deprecated, This flag has no effect now and will be removed in v>

Aug 12 11:36:01 k8s01 kube-apiserver[565796]: Error: [service-account-issuer is a required flag, --service-account-signing-key-file and --service-account-issuer are required flags]

Aug 12 11:36:01 k8s01 systemd[1]: kube-apiserver.service: Main process exited, code=exited, status=1/FAILURE

参考文档

https://blog.51cto.com/u_13053917/2596613 kubernetes高可用集群安装(二进制安装、v1.20.2版)

创建 kubeconfig

---------------------

#vi /etc/kubernetes/kubeconfig

apiVersion: v1

kind: Config

clusters:

- name: default

cluster:

server: https://172.16.10.138:6443

certificate-authority: /etc/kubernetes/pki/ca.crt

users:

- name: admin

user:

client-certificate: /etc/kubernetes/pki/client.crt

client-key: /etc/kubernetes/pki/client.key

contexts:

- context:

cluster: default

user: admin

name: default

current-context: default

--------------------测试

[root@k8s01 bin]# kubectl --kubeconfig=/etc/kubernetes/kubeconfig cluster-info

Kubernetes control plane is running at https://172.16.10.138:6443

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

[root@k8s01 bin]# kubectl --kubeconfig=/etc/kubernetes/kubeconfig get all

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 169.169.0.1 <none> 443/TCP 26m

配置kube-controller-manager

#vi /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/etc/kubernetes/controller-manager

ExecStart=/usr/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_ARGS

Restart=always

[Install]

WantedBy=multi-user.target

#vi /etc/kubernetes/controller-manager

KUBE_CONTROLLER_MANAGER_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig \

--leader-elect=true \

--service-cluster-ip-range=169.169.0.0/16 \

--service-account-private-key-file=/etc/kubernetes/pki/apiserver.key \

--root-ca-file=/etc/kubernetes/pki/ca.crt \

--log-dir=/var/log/kubernetes --logtostderr=false --v=0"

systemctl start kube-controller-manager && systemctl enable kube-controller-manager

systemctl status kube-controller-manager

配置kube-scheduler

#vi /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/etc/kubernetes/scheduler

ExecStart=/usr/bin/kube-scheduler $KUBE_SCHEDULER_ARGS

Restart=always

[Install]

WantedBy=multi-user.target

#vi /etc/kubernetes/scheduler

KUBE_SCHEDULER_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig \

--leader-elect=true \

--logtostderr=false --log-dir=/var/log/kubernetes --v=0"

systemctl start kube-scheduler && systemctl enable kube-scheduler

systemctl status kube-scheduler

8.启动节点服务

启动kubelet,注意修改IP 并在3个节点中启动

#vi /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet Server

Documentation=https://github.com/kubernetes/kubernetes

After=docker.target

[Service]

EnvironmentFile=/etc/kubernetes/kubelet

ExecStart=/usr/bin/kubelet $KUBELET_ARGS

Restart=always

[Install]

WantedBy=multi-user.target

#vi /etc/kubernetes/kubelet

KUBELET_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig --config=/etc/kubernetes/kubelet.config \

--hostname-override=172.16.10.138 \

--network-plugin=cni \

--logtostderr=false --log-dir=/var/log/kubernetes --v=0"

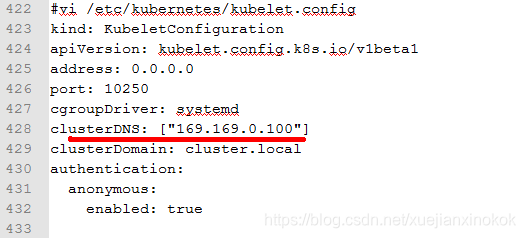

#vi /etc/kubernetes/kubelet.config

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

cgroupDriver: systemd

clusterDNS: ["169.169.0.100"]

clusterDomain: cluster.local

authentication:

anonymous:

enabled: true

systemctl enable kubelet && systemctl start kubelet && systemctl status kubelet

启动kube-proxy,注意修改IP 并在3个节点中启动

#vi /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

EnvironmentFile=/etc/kubernetes/proxy

ExecStart=/usr/bin/kube-proxy $KUBE_PROXY_ARGS

Restart=always

[Install]

WantedBy=multi-user.target

#vi /etc/kubernetes/proxy

KUBE_PROXY_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig \

--hostname-override=172.16.10.138 \

--proxy-mode=iptables \

--logtostderr=false --log-dir=/var/log/kubernetes --v=0"

systemctl enable kube-proxy && systemctl start kube-proxy && systemctl status kube-proxy

kubectl --kubeconfig=/etc/kubernetes/kubeconfig get all

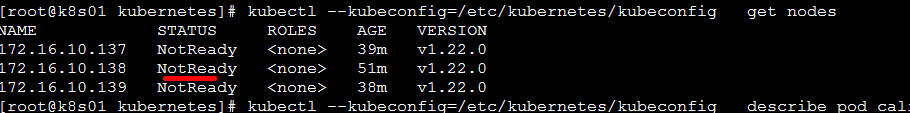

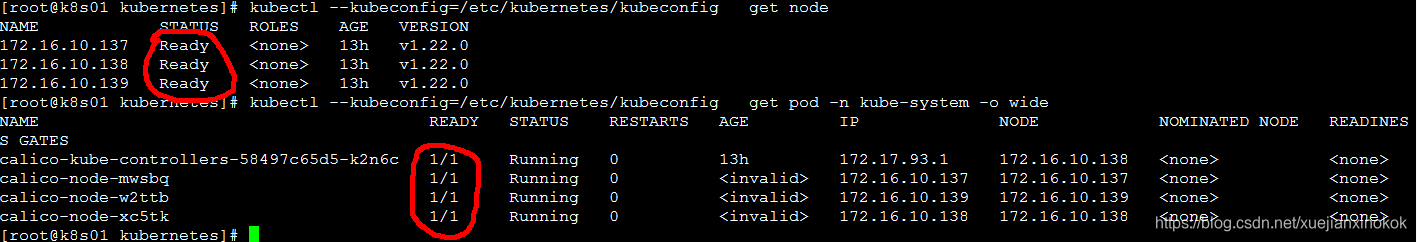

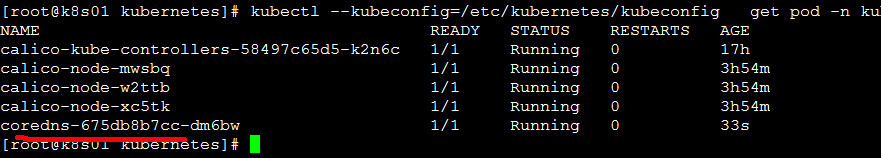

9. 配置网络

--下载calico 网络配置

wget https://docs.projectcalico.org/manifests/calico.yaml

kubectl --kubeconfig=/etc/kubernetes/kubeconfig apply -f calico.yaml

kubectl --kubeconfig=/etc/kubernetes/kubeconfig get all -n kube-system

kubectl --kubeconfig=/etc/kubernetes/kubeconfig describe pod calico-kube-controllers-58497c65d5-k2n6c -n kube-system

如果 pod 长期处于pending 状态,查看容器为什么pending

[root@k8s01 kubernetes]# kubectl --kubeconfig=/etc/kubernetes/kubeconfig describe pod calico-node-nc8hz -n kube-system

是由于pause镜像下载不下来,需要单独下载镜像

docker pull registry.aliyuncs.com/google_containers/pause:3.5

docker tag registry.aliyuncs.com/google_containers/pause:3.5 k8s.gcr.io/pause:3.5

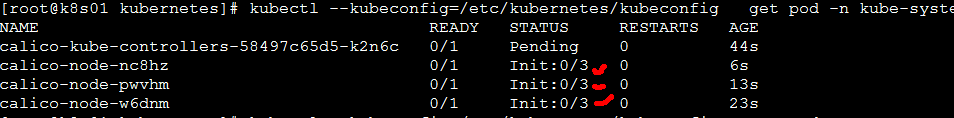

发现节点pod 没有READY

进入问题节点pod 查看问题

kubectl --kubeconfig=/etc/kubernetes/kubeconfig exec -it calico-node-pt8kn -n kube-system --bash

pod 内部

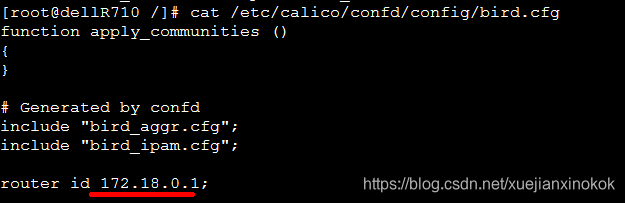

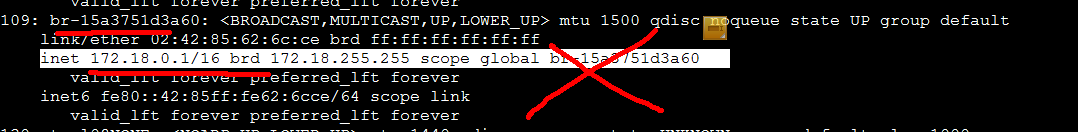

[root@dellR710 /]# cat /etc/calico/confd/config/bird.cfg

上面的ip是网桥IP,calico的BGP采用物理设备(网卡)作为虚拟路由器实现路由功能

基本可以确定是139节点的calico的BGP网卡设备识别错误导致

基本可以确定是139节点的calico的BGP网卡设备识别错误导致

指定多个网卡,由于我的网卡是eno1,eno2,em1,em2 之类的所以需要指定多个正则

# Specify interface

- name: IP_AUTODETECTION_METHOD

value: "interface=eno.*,em*"

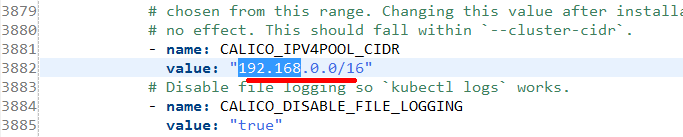

修改POD ip地址范围

网络问题 参考文档

https://blog.csdn.net/samginshen/article/details/105424652 K8S网络异常 calico/node is not ready: BIRD is not ready: BGP not established异常解决

https://blog.csdn.net/JH200811/article/details/115872480 calico网络故障排查(calico/node is not ready: BIRD is not ready)

https://github.com/projectcalico/calico/issues/2561 calico/node is not ready: BIRD is not ready: BGP not established (Calico 3.6 / k8s 1.14.1) #2561

https://blog.csdn.net/vah101/article/details/110234028 calico多网口配置

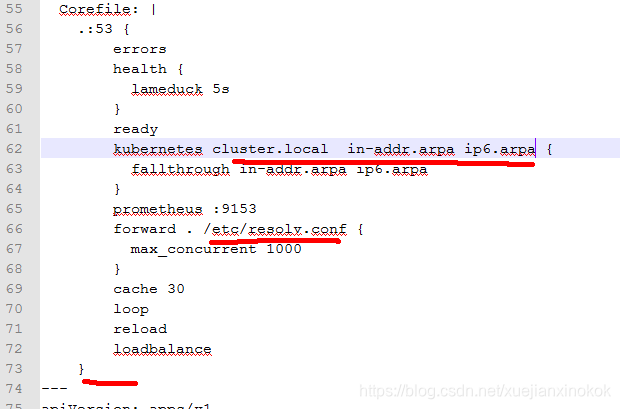

10.配置coreDNS

下载

https://raw.githubusercontent.com/coredns/deployment/master/kubernetes/coredns.yaml.sed

保存为coredns.yaml

由于clusterDNS 设置为 169.169.1.100

kubectl --kubeconfig=/etc/kubernetes/kubeconfig apply -f coredns.yaml

[root@k8s01 kubernetes]# kubectl --kubeconfig=/etc/kubernetes/kubeconfig logs coredns-675db8b7cc-d4nd9 -n kube-system

/etc/coredns/Corefile:18 - Error during parsing: Unknown directive '}STUBDOMAINS'

[root@k8s01 kubernetes]# kubectl --kubeconfig=/etc/kubernetes/kubeconfig logs coredns-675db8b7cc-7bbjb -n kube-system

plugin/forward: not an IP address or file: "UPSTREAMNAMESERVER"

修改为(红色部分)

--------------------------------------------yaml 全文---------------------------------

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. Default is 1.

# 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

containers:

- name: coredns

image: coredns/coredns:1.8.4

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 169.169.0.100

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

-------------------------------------------------------------------------------------------

11. 测试

-------------------------------------------------

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx-controller

spec:

replicas: 2

selector:

name: nginx

template:

metadata:

labels:

name: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service-nodeport

spec:

ports:

- port: 80

targetPort: 80

nodePort: 30001

protocol: TCP

type: NodePort

selector:

name: nginx

-------------------------------------------------------

kubectl --kubeconfig=/etc/kubernetes/kubeconfig apply -f nginx.yaml

进入pod 内部测试

[root@k8s01 kubernetes]# kubectl --kubeconfig=/etc/kubernetes/kubeconfig exec -it nginx-controller-8g4vw -- /bin/bash

root@nginx-controller-8g4vw:/# curl http://nginx-service-nodeport

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

在浏览器中访问测试

版权声明:本文为CSDN博主「xuejianxinokok」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/xuejianxinokok/article/details/119604432

目录 返回

首页