ELK日志系统终极架构

ELK终极架构

ELK终极架构

- ELK终极架构

-

- 1.ELK终极架构图

- 2.部署终极ELK架构

-

- 2.2.部署两台redis

- 2.3.配置nginx四层负载均衡

- 2.4.配置keepalived高可用

- 2.5.挂掉redis01查看是否会切换到redis02实现高可用

- 2.6.配置filebeat将日志存储到高可用的redis集群

- 2.7.产生日志并查看redis上是否产生的key

- 2.8.配置logstash连接redis高可用集群

- 2.9.启动logstash并查看es上是否产生索引库

- 3.在kibana上关联es索引并查看日志信息

-

- 3.1.关联es索引库

- 3.2.查看收集来的日志数据

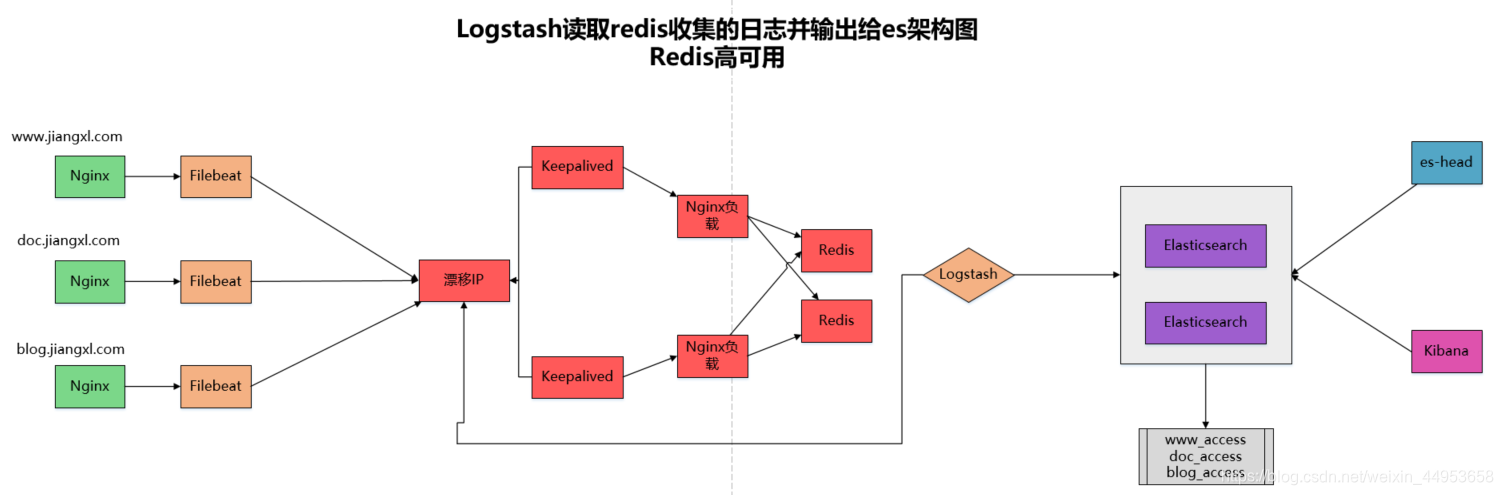

1.ELK终极架构图

最接近终极的架构图就是我们从redis中读取收集来的日志最后由logstash存储到es库,但是这个架构有个缺陷假如redis挂掉,我们就无法收集日志了

redis单点问题,我们可以通过集群的方式来实现,但是redis的三种集群模式,除了主从复制,其他两个集群,filebeat均不支持将数据写入集群,但是主从复制又有弊端,假如主节点挂掉,还需要通过命令的方式把从节点改为可读可写

filebeat支持kafka集群的写入,但是kafka不太熟悉,我们还是用redis来实现

我们可以配置两个单独的redis,在通过nginx四层负载均衡+keepalvide做成高可用集群,当其中一个redis坏掉了,另一个redis接替其工作,当redis01处于工作模式时,就把redis02作为备份模式,这样redis02上面就没有数据的产生,从而可以保证数据的一致性,不会导致重复

ELK终极架构图

2.部署终极ELK架构

| IP | 服务 |

|---|---|

| 192.168.81.210 | es+kibana+logstash |

| 192.168.81.210 | nginx+keepalived+redis |

| 192.168.81.220 | es+kibana |

| 192.168.81.220 | nginx+keepalived+redis |

| 192.168.81.230 | filebeat+nginx |

2.2.部署两台redis

192.168.81.210配置

1.安装redis(epel源中有redis的rpm包)

[root@elasticsearch ~]# yum -y install redis

2.启动redis

[root@elasticsearch ~]# systemctl start redis

[root@elasticsearch ~]# systemctl enable redis

3.查看端口号

[root@elasticsearch ~]# netstat -lnpt | grep redis

tcp 0 0 127.0.0.1:6379 0.0.0.0:* LISTEN 94345/redis-server

4.登陆redis

[root@elasticsearch ~]# redis-cli

127.0.0.1:6379>

5.配置redis允许任何主机访问

[root@elasticsearch ~]# vim /etc/redis.conf

bind 0.0.0.0

[root@elasticsearch ~]# systemctl restart redis

6.创建一个key方便识别(最终测试的时候看)

[root@elasticsearch ~]# redis-cli

127.0.0.1:6379> set redis01 192.168.81.210

OK

192.168.81.220配置

1.安装redis(epel源中有redis的rpm包)

[root@node-2 ~]# yum -y install redis

2.启动redis

[root@node-2 ~]# systemctl start redis

[root@node-2 ~]# systemctl enable redis

3.查看端口号

[root@node-2 ~]# netstat -lnpt | grep redis

tcp 0 0 127.0.0.1:6379 0.0.0.0:* LISTEN 94345/redis-server

4.登陆redis

[root@node-2 ~]# redis-cli

127.0.0.1:6379>

5.配置redis允许任何主机访问

[root@node-2 ~]# vim /etc/redis.conf

bind 0.0.0.0

[root@node-2 ~]# systemctl restart redis

6.创建一个key方便识别(最终测试的时候看)

[root@node-2 ~]# redis-cli

127.0.0.1:6379> set redis02 192.168.81.220

OK

2.3.配置nginx四层负载均衡

192.168.81.210配置

[root@elasticsearch ~]# vim /etc/nginx/nginx.conf

stream {

upstream redis {

server 192.168.81.210:6379 max_fails=2 fail_timeout=10s;

server 192.168.81.220:6379 max_fails=2 fail_timeout=10s backup;

}

server {

listen 6378;

proxy_connect_timeout 1s;

proxy_timeout 3s;

proxy_pass redis;

}

}

[root@elasticsearch ~]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@elasticsearch ~]# systemctl restart nginx

192.168.81.220配置

[root@node-2 ~]# vim /etc/nginx/nginx.conf

stream {

upstream redis {

server 192.168.81.210:6379 max_fails=2 fail_timeout=10s;

server 192.168.81.220:6379 max_fails=2 fail_timeout=10s backup;

}

server {

listen 6378;

proxy_connect_timeout 1s;

proxy_timeout 3s;

proxy_pass redis;

}

}

[root@elasticsearch ~]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@node-2 ~]# systemctl restart nginx

测试nginx负载是否可用

使用两个负载均衡任意一个ip都可以负载到redis01,因为redis02是备份状态,一般高可用集群都是两套负载均衡集群连接到keepalived

[root@elasticsearch ~]# redis-cli -h 192.168.81.210 -p 6378

192.168.81.210:6378> keys *

1) "redis01

[root@elasticsearch ~]# redis-cli -h 192.168.81.220 -p 6378

192.168.81.220:6378> keys *

1) "redis01"

2.4.配置keepalived高可用

192.168.81.210配置

[root@elasticsearch ~]# vim /etc/keepalived/keepalived.conf

global_defs {

router_id lb01

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 50

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.81.211

}

}

[root@elasticsearch ~]# systemctl restart keepalived

[root@elasticsearch ~]# systemctl enable keepalived

192.168.81.220配置

[root@node-2 ~]# vim /etc/keepalived/keepalived.conf

global_defs {

router_id lb02

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 50

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.81.211

}

}

[root@node-2 ~]# systemctl restart keepalived

[root@node-2 ~]# systemctl enable keepalived

测试keepalived

1.漂移IP已经在主节点

[root@elasticsearch ~]# ip a |grep virbr0

3: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

2.使用虚拟IP登陆redis

[root@elasticsearch ~]# redis-cli -h 192.168.81.211 -p 6378

192.168.81.211:6378> keys *

1) "redis01

3.关闭主节点,查看ip是否会漂移

[root@elasticsearch ~]# systemctl stop keepalived

在backup节点查看,漂移成功

[root@node-2 ~]# ip a | grep vir

3: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

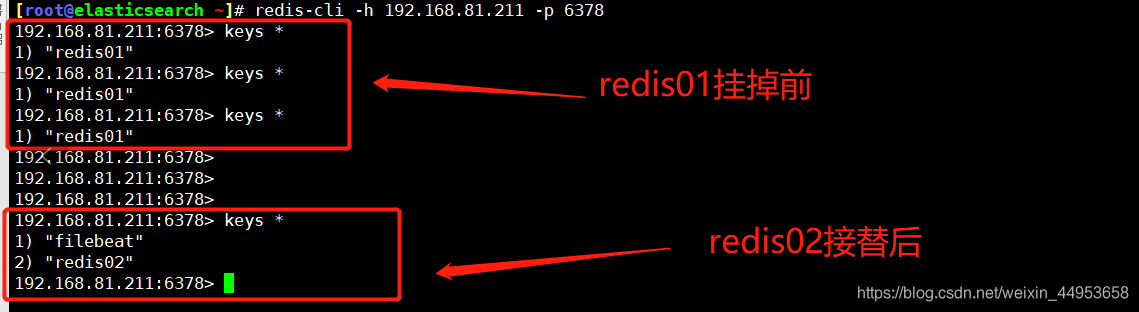

2.5.挂掉redis01查看是否会切换到redis02实现高可用

[root@elasticsearch ~]# redis-cli -h 192.168.81.211 -p 6378 192.168.81.211:6378> keys *1) "redis01"192.168.81.211:6378> keys *1) "redis01"192.168.81.211:6378> keys *1) "redis01"192.168.81.211:6378> 192.168.81.211:6378> 192.168.81.211:6378> 192.168.81.211:6378> keys *1) "filebeat"2) "redis02"192.168.81.211:6378>

完美的实现了高可用,可以看到redis01挂掉后redis02里面进行了工作

2.6.配置filebeat将日志存储到高可用的redis集群

只需要修改传输给redis的地址为漂移ip地址即可

[root@nginx ~]# vim /etc/filebeat/filebeat.yml

output.redis:

hosts: ["192.168.81.211:6379"]

key: "nginx-all-key"

db: 0

timeout: 5

[root@nginx ~]# systemctl restart filebeat

2.7.产生日志并查看redis上是否产生的key

1.产生日志

ab -c 100 -n 1000 http://www.jiangxl.com/

ab -c 100 -n 1000 http://bbs.jiangxl.com/

ab -c 100 -n 1000 http://blog.jiangxl.com/

2.查看redis上是否产生了key

192.168.81.211:6378> keys *

1) "nginx-all-key" #已经产生了key

2) "redis01"

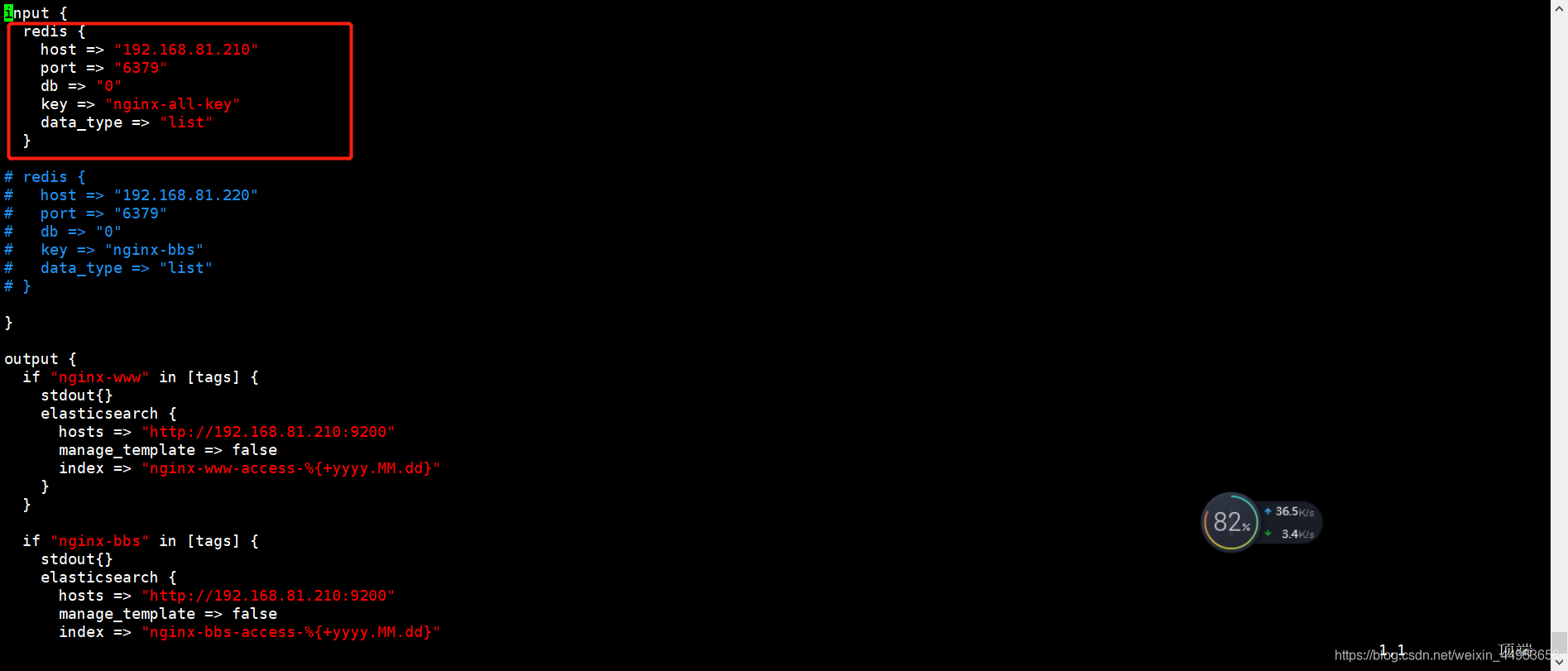

2.8.配置logstash连接redis高可用集群

[root@elasticsearch ~]# vim /etc/logstash/conf.d/redis.conf

input {

redis {

host => "192.168.81.210"

port => "6379"

db => "0"

key => "nginx-all-key"

data_type => "list"

}

}

只需要改redis地址这一行即可

2.9.启动logstash并查看es上是否产生索引库

[root@elasticsearch ~]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/redis.conf

logstash输出

es上已经有数据索引产生了

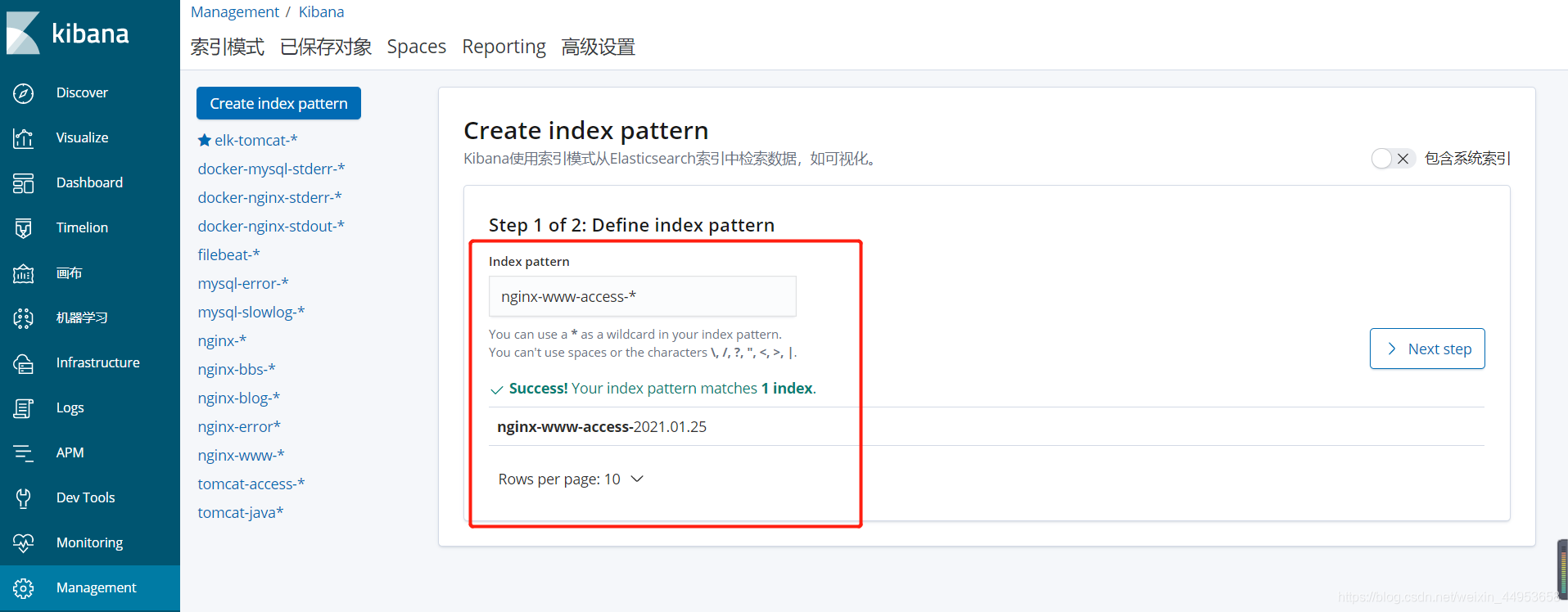

3.在kibana上关联es索引并查看日志信息

3.1.关联es索引库

nginx-www-access索引

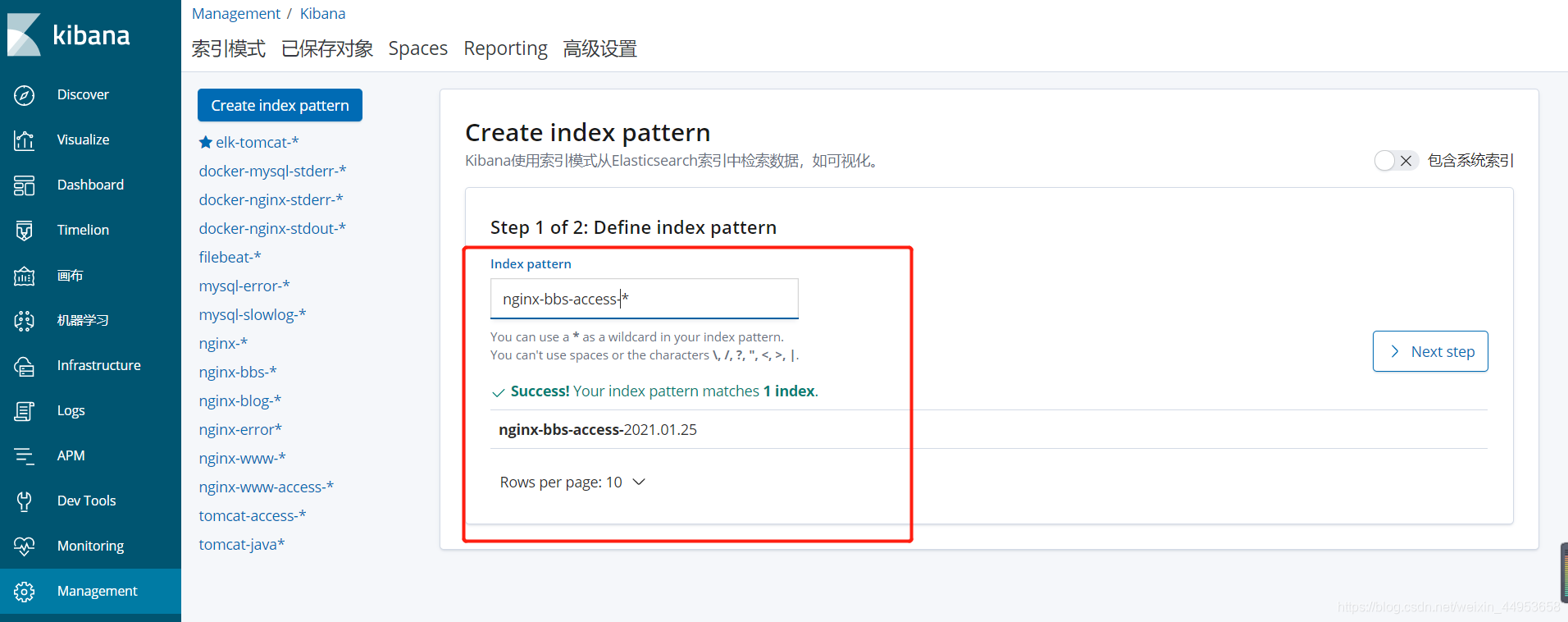

nginx-bbs-access索引

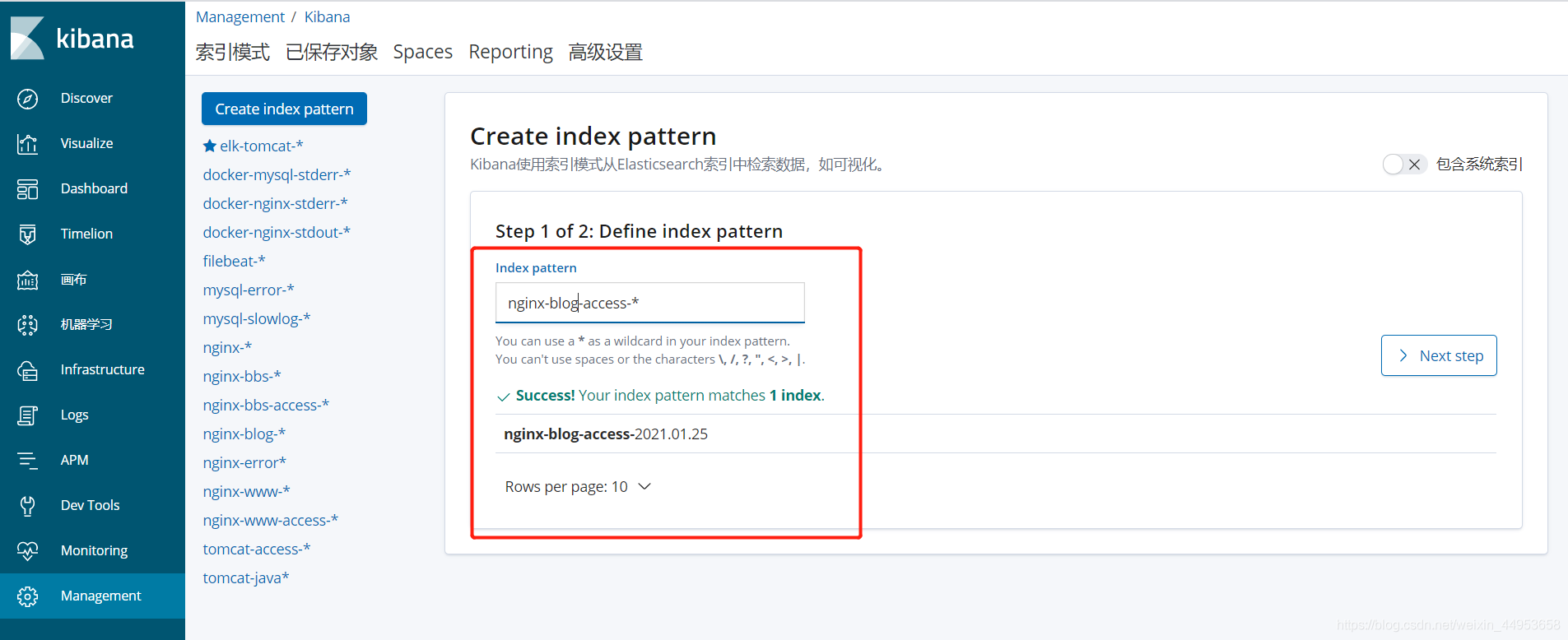

nginx-blog-access索引

3.2.查看收集来的日志数据

nginx-www-access索引

nginx-bbs-access索引

nginx-blog-access索引

目录 返回

首页