Kubernetes PV 动态供给(StorageClass)

PV静态供给明显的缺点是维护成本太高了!因此,K8s开始支持PV动态供给,使用StorageClass对象实现。

Storgess帮你创建pvc,只需要在pvc里面定义使用哪个storgeclass,storgess可以标志使用什么存储比如nfs ceph。 并不是所有存储支持storgess,有的需要插件

支持动态供给的存储插件:https://kubernetes.io/docs/concepts/storage/storage-classes/

Provisioner

Each StorageClass has a provisioner that determines what volume plugin is used for provisioning PVs. This field must be specified.

| Volume Plugin | Internal Provisioner | Config Example |

|---|---|---|

| AWSElasticBlockStore | AWS EBS | |

| AzureFile | Azure File | |

| AzureDisk | Azure Disk | |

| CephFS | - | - |

| Cinder | OpenStack Cinder | |

| FC | - | - |

| FlexVolume | - | - |

| Flocker | - | |

| GCEPersistentDisk | GCE PD | |

| Glusterfs | Glusterfs | |

| iSCSI | - | - |

| Quobyte | Quobyte | |

| NFS | - | NFS |

| RBD | Ceph RBD | |

| VsphereVolume | vSphere | |

| PortworxVolume | Portworx Volume | |

| ScaleIO | ScaleIO | |

| StorageOS | StorageOS | |

| Local | - | Local |

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: example-nfs

provisioner: example.com/external-nfs

parameters:

server: nfs-server.example.com

path: /share

readOnly: falseserver: Server is the hostname or IP address of the NFS server.path: Path that is exported by the NFS server.readOnly: A flag indicating whether the storage will be mounted as read only (default false).

Kubernetes doesn't include an internal NFS provisioner. You need to use an external provisioner to create a StorageClass for NFS. Here are some examples:

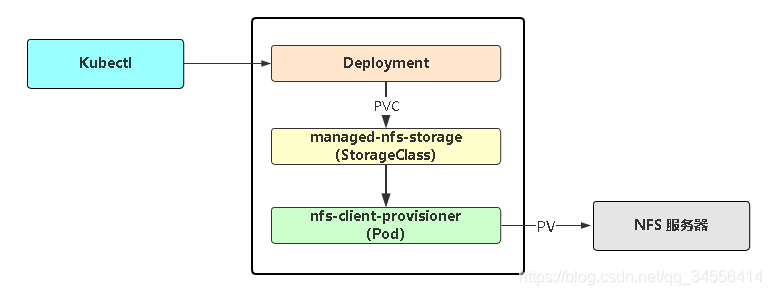

PV 动态供给(StorageClass)

基于NFS实现PV动态供给流程图

[root@k8s-master nfs-client]# ls

class.yaml deployment.yaml rbac.yaml

[root@k8s-master nfs-client]# kubectl apply -f .

[root@k8s-master nfs-client]# cat deployment.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-client-provisioner

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 192.168.179.102

- name: NFS_PATH

value: /ifs/kubernetes

volumes:

- name: nfs-client-root

nfs:

server: 192.168.179.102

path: /ifs/kubernetes

[root@k8s-master ~]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/nfs-client-provisioner-855d45d4f-l5h9w 1/1 Running 0 4m58s部署NFS实现自动创建PV插件:

- git clone https://github.com/kubernetes-incubator/external-storage

- cd nfs-client/deploy

- kubectl apply -f rbac.yaml # 授权访问apiserver

- kubectl apply -f deployment.yaml # 部署插件,需修改里面NFS服务器地址与共享目录

- kubectl apply -f class.yaml # 创建存储类

当前申请的pv请求会给到storageClass,storageClass会将请求交给之前创建的扩展插件处理,自动创建pv,也就是不需要手工创建pv

[root@k8s-master ~]# cat deployment-sc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment-sc

spec:

replicas: 3

selector:

matchLabels:

app: nginx-sc

template:

metadata:

labels:

app: nginx-sc

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: wwwroot

mountPath: /usr/share/nginx/html

ports:

- containerPort: 80

volumes:

- name: wwwroot

persistentVolumeClaim:

claimName: my-pvc3

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: my-pvc3

spec:

storageClassName: "managed-nfs-storage"

accessModes:

- ReadWriteMany

resources:

requests:

storage: 18Gi

[root@k8s-master nfs-client]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-d033b273-a2c2-4c78-bd7e-074114a32ee6 18Gi RWX Delete Bound default/my-pvc managed-nfs-storage 88s

[root@k8s-master nfs-client]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

my-pvc Bound pvc-d033b273-a2c2-4c78-bd7e-074114a32ee6 18Gi RWX managed-nfs-storage 98s

[root@k8s-master nfs-client]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

managed-nfs-storage fuseim.pri/ifs Delete Immediate false 4m

[root@k8s-master nfs-client]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deployment-sc-c5d6db95-95xt4 1/1 Running 0 7m17s 10.244.36.77 k8s-node1 <none> <none>

deployment-sc-c5d6db95-g4vrh 1/1 Running 0 7m17s 10.244.169.141 k8s-node2 <none> <none>

deployment-sc-c5d6db95-g5cwx 1/1 Running 0 7m17s 10.244.169.140 k8s-node2 <none> <none>

nfs-client-provisioner-776769c5c7-2zx55 1/1 Running 0 8m16s 10.244.169.139 k8s-node2 <none> <none>这个目录实际上就是插件帮你创建的,这个目录就是为一组pod提供存储(目录名称为pvc名字+pv名字)

[root@reg ~]# cd /ifs/kubernetes/

[root@reg kubernetes]# ls

default-my-pvc-pvc-d033b273-a2c2-4c78-bd7e-074114a32ee6

[root@reg kubernetes]# cd default-my-pvc-pvc-d033b273-a2c2-4c78-bd7e-074114a32ee6/

[root@reg default-my-pvc-pvc-d033b273-a2c2-4c78-bd7e-074114a32ee6]# ls

[root@reg default-my-pvc-pvc-d033b273-a2c2-4c78-bd7e-074114a32ee6]# echo "default my-mypvc" >> index.html

[root@k8s-master nfs-client]# curl 10.244.169.140

default my-mypvc当你使用了delete回收策略,当pvc删除,对应的pv也会删除,并且之前的数据会被清理

[root@k8s-master nfs-client]# kubectl delete -f deployment-sc.yaml

deployment.apps "deployment-sc" deleted

persistentvolumeclaim "my-pvc" deleted

[root@k8s-master nfs-client]# kubectl get pvc,pv

No resources found后端并没有被删除,将之前的目录做了一个归档,如果不想要归档设置存储类

[root@k8s-master nfs-client]# vim class.yaml

archiveOnDelete: "false"[root@reg ~]# cd /ifs/kubernetes/

[root@reg kubernetes]# ls

archived-default-my-pvc-pvc-d033b273-a2c2-4c78-bd7e-074114a32ee6

[root@reg kubernetes]# ls archived-default-my-pvc-pvc-d033b273-a2c2-4c78-bd7e-074114a32ee6/

index.html总结

pvc匹配pv 容量匹配 访问模式匹配

你可能的疑问?

Q:PV与PVC什么关系?

A:一对一

Q:PVC与PV怎么匹配的?

A:访问模式和存储容量

Q:容量匹配策略

A:匹配就近的符合的容量(向上)

Q:存储容量是真的用于限制吗?

A:存储容量取决于后端存储,容量字段主要还是用于匹配

目录 返回

首页