ELK Logstash 输入file插件

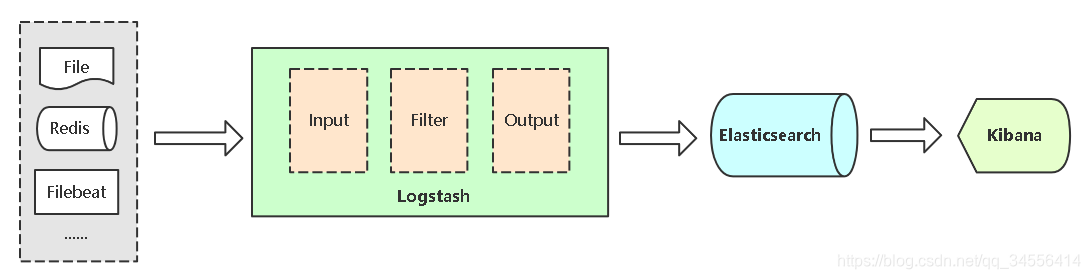

Logstash 介绍

- Input:输入,输入数据可以是Stdin、File、TCP、Redis、Syslog等。

- Filter:过滤,将日志格式化。有丰富的过滤插件:Grok正则捕获、Date时间处理、Json编解码、Mutate数据修改等。

- Output:输出,输出目标可以是Stdout、File、TCP、Redis、ES等

输入插件(Input)

输入阶段:从哪里获取日志,常用插件:

- Stdin(一般用于调试)

- File

- Redis

- Beats(例如filebeat)

File输入插件

Description

Stream events from files, normally by tailing them in a manner similar to tail -0F but optionally reading them from the beginning.

从文件中流式传输事件,通常以类似于tail -0F但可选地从头开始读取它们的方式尾随它们。

Normally, logging will add a newline to the end of each line written. By default, each event is assumed to be one line and a line is taken to be the text before a newline character. If you would like to join multiple log lines into one event, you’ll want to use the multiline codec. The plugin loops between discovering new files and processing each discovered file. Discovered files have a lifecycle, they start off in the "watched" or "ignored" state. Other states in the lifecycle are: "active", "closed" and "unwatched"

通常,日志记录会在写入的每一行的末尾添加一个换行符。默认情况下,每个事件都假定为一行,并且一行被视为换行符之前的文本。如果要将多个日志行连接到一个事件中,则需要使用多行编解码器。该插件在发现新文件和处理每个发现的文件之间循环。发现的文件具有生命周期,它们以“监视”或“已忽略”状态开始。生命周期中的其他状态是:“活动”,“关闭”和“未监视”

exclude

- Value type is array

- There is no default value for this setting.

Exclusions (matched against the filename, not full path). Filename patterns are valid here, too. For example, if you have

path => "/var/log/*"In Tail mode, you might want to exclude gzipped files:

exclude => "*.gz"path

- This is a required setting.

- Value type is array

- There is no default value for this setting.

The path(s) to the file(s) to use as an input. You can use filename patterns here, such as /var/log/*.log. If you use a pattern like /var/log/**/*.log, a recursive search of /var/log will be done for all *.log files. Paths must be absolute and cannot be relative.

You may also configure multiple paths. See an example on the Logstash configuration page.

For example, this input section configures two file inputs:

input {

file {

path => "/var/log/messages"

type => "syslog"

}

file {

path => "/var/log/apache/access.log"

type => "apache"

}

}start_position

- Value can be any of:

beginning,end - Default value is

"end"

Choose where Logstash starts initially reading files: at the beginning or at the end. The default behavior treats files like live streams and thus starts at the end. If you have old data you want to import, set this to beginning.

选择Logstash最初从哪个位置开始读取文件:在开头还是结尾。默认行为将文件视为实时流,因此从结尾开始。如果您要导入的旧数据,请将其设置为Beginning。

输入插件:通用配置字段

- add_field 添加一个字段到一个事件,放到事件顶部,一般用于标记日志来源。例如属于哪个项目,哪个应用

- tags 添加任意数量的标签,用于标记日志的其他属性,例如表明访问日志还是错误日志

- type 为所有输入添加一个字段,例如表明日志类型

输入插件:File

File插件:用于读取指定日志文件,常用字段:

• path 日志文件路径,可以使用通配符

• exclude 排除采集的日志文件

• start_position 指定日志文件什么位置开始读,默认从结尾开始,指定beginning表示从头开始读

示例:读取日志文件并输出到文件,为了便于测试输出到另外一个文件

input {

file {

path => "/var/log/test.log"

start_position => "beginning"

}

}

filter {

}

output {

file {

path => "/tmp/test.log"

}

}重载Logstash配置

[root@localhost ~]# kill -HUP 11033观查结果

[root@localhost ~]# echo "test file" >> /var/log/test.log

[root@localhost ~]# tail -f /tmp/test.log

{"@version":"1","host":"localhost.localdomain","path":"/var/log/test.log","@timestamp":"2020-12-10T13:28:41.191Z","tags":["_grokparsefailure","_geoip_lookup_failure"],"message":"test file"}

{"@version":"1","host":"localhost.localdomain","path":"/var/log/test.log","@timestamp":"2020-12-10T13:28:41.242Z","tags":["_grokparsefailure","_geoip_lookup_failure"],"message":"test file"}如果你要采集的是目录,不是具体的文件

[root@localhost ~]# cat /usr/local/logstash/conf.d/test.conf

input {

file {

path => "/var/log/*.log"

exclude => "error.log"

start_position => "beginning"

}

}

filter {

}

output {

elasticsearch {

hosts => ["192.168.179.102:9200"]

index => "test-%{+YYYY.MM.dd}"

}

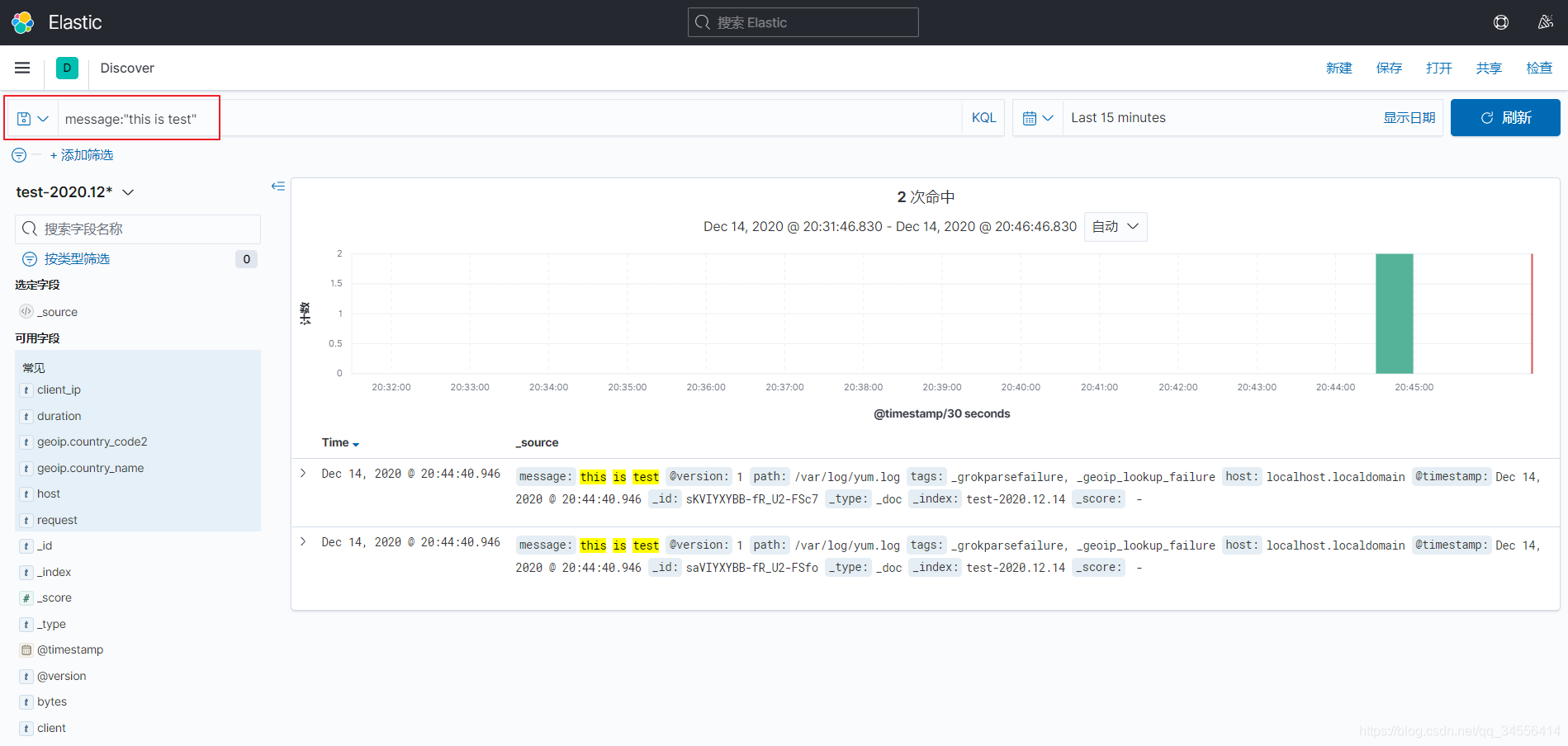

}测试一下,写条数据

[root@localhost ~]# echo "this is test" >> /var/log/yum.log

配置通用字段

- add_field 添加一个字段到一个事件,放到事件顶部,一般用于标记日志来源。例如属于哪个项目,哪个应用

- tags 添加任意数量的标签,用于标记日志的其他属性,例如表明访问日志还是错误日志

- type 为所有输入添加一个字段,例如表明日志类型

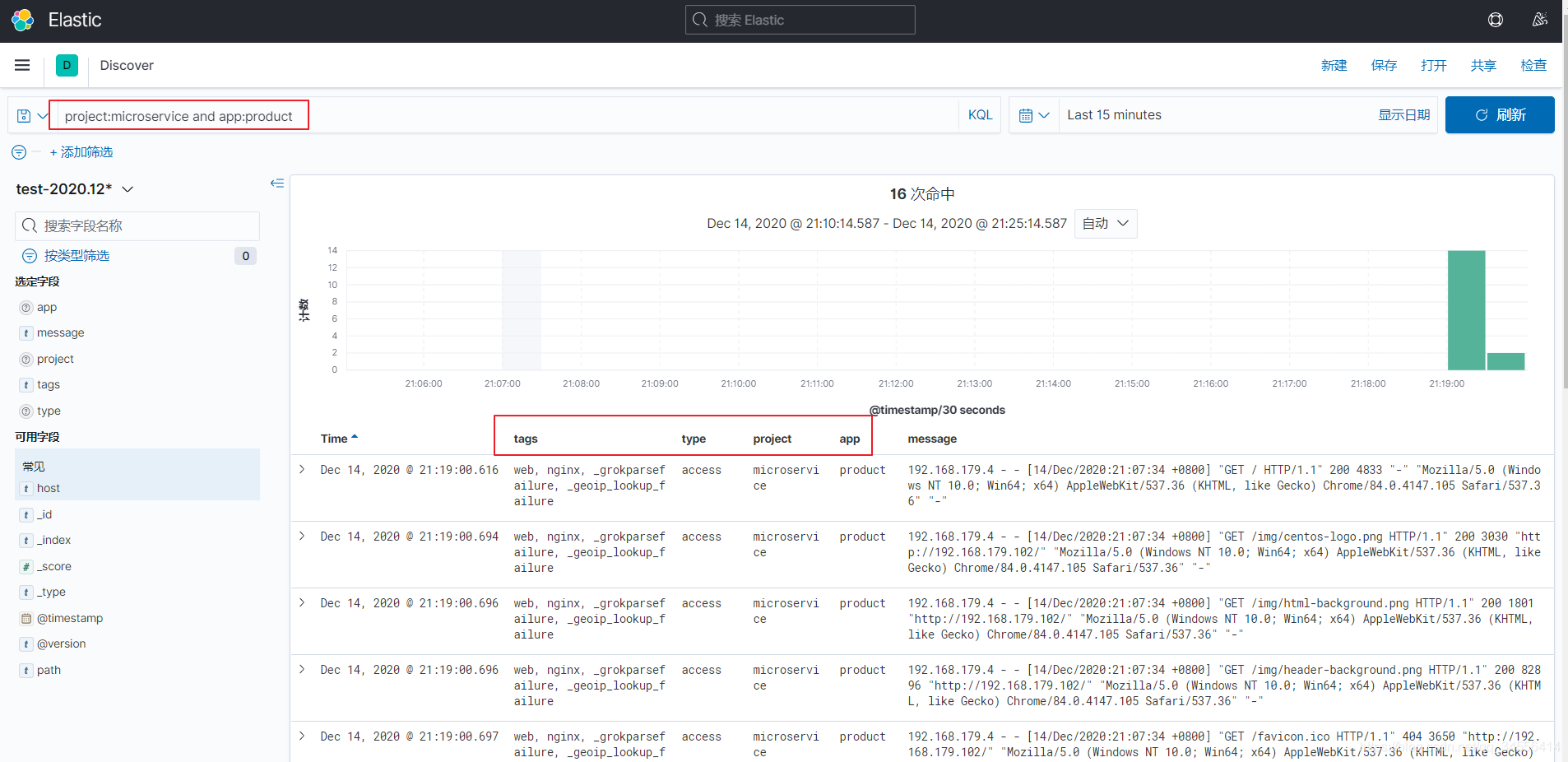

在采集日志的时候有多台服务器,并且会有多个应用。这个时候要标记采集日志的来源

示例:配置日志来源:

可以打标签,标签的意思是给对象添加属性信息。同时添加的字段都会被收集到es里面

"project" => "microservice" 指定项目名称

"app" => "product" 商品服务

在es里面可以通过上面两个精确查询

[root@localhost ~]# cat /usr/local/logstash/conf.d/test.conf

input {

file {

path => "/var/log/nginx/*.log"

exclude => "error.log"

start_position => "beginning"

tags => "web"

tags => "nginx"

type => "access"

add_field => {

"project" => "microservice"

"app" => "product"

}

}

}

filter {

}

output {

elasticsearch {

hosts => ["192.168.179.102:9200"]

index => "test-%{+YYYY.MM.dd}"

}

}

[root@localhost ~]# kill -HUP 22839

目录 返回

首页