使用ELK收集k8s集群日志

1.收集哪些日志?

K8S系统的组件日志

K8S Cluster里面部署的应用程序日志

- 标准输出

- 日志文件 (输出到指定文件里)

- 日志轮转(本地保留30天)

- 日志格式 (json,kv)

如果是kubeadm方式部署的k8s 日志是收集的 /var/log/message

如果是二进制部署的k8s 日志是配置文件中定义的日志路径

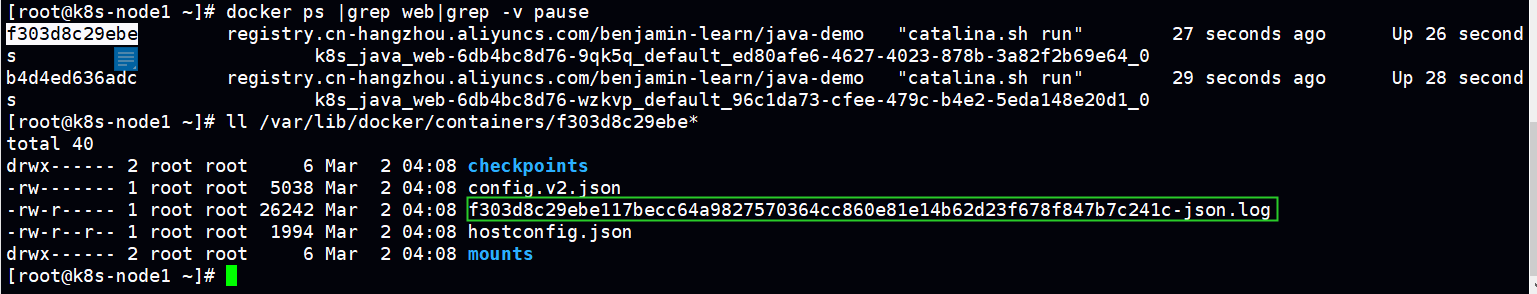

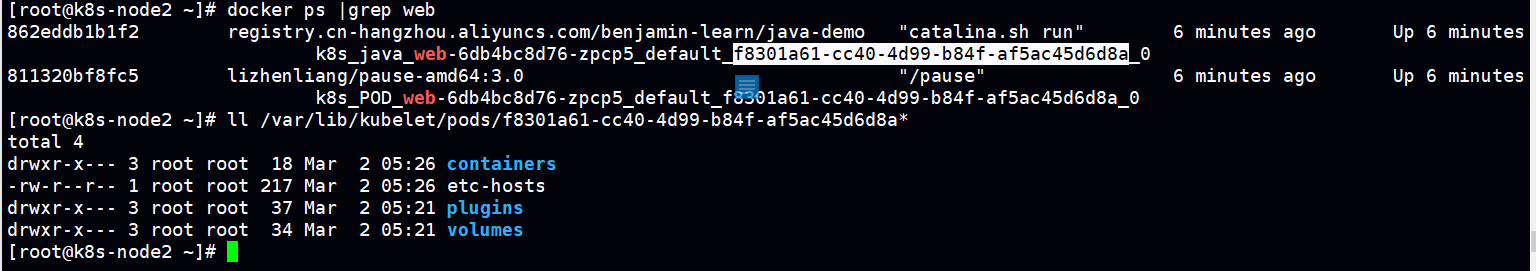

应用容器日志

/var/lib/docker/contianers/*/*-json.log

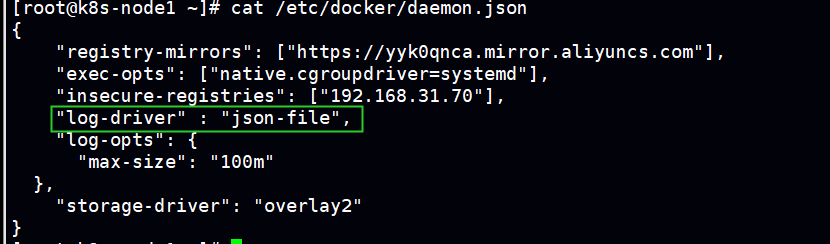

docker配置文件中定义了 默认日志格式为json

pod的日志路径

/var/lib/kubelet/pods/*/volumes/

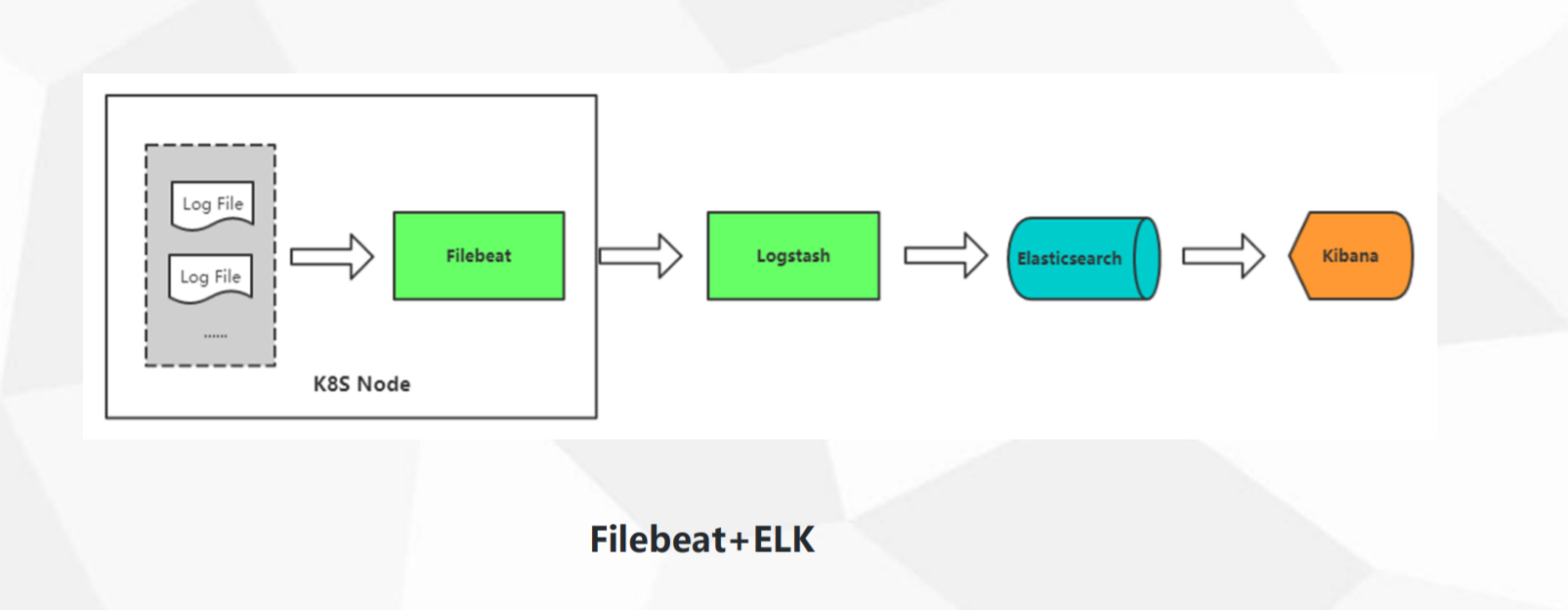

2.ELK收集日志架构

其中logstash 是非必选的组件,如果日志场景比较复杂的时候可以加上logstash做出更好的预处理然后存入ES。

3.容器中的日志怎么收集

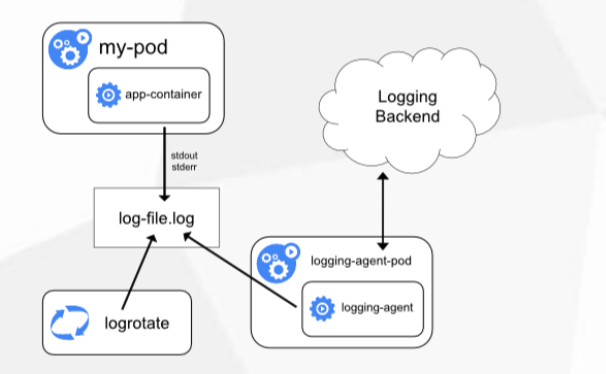

方案一:

Node上部署一个日志收集程序

DaemonSet方式部署日志收集程序

对本节点/var/log/kubelet/pods和 /var/lib/docker/containers/两个目录下的日志进 行采集

Pod中容器日志目录挂载到宿主机统一目录上

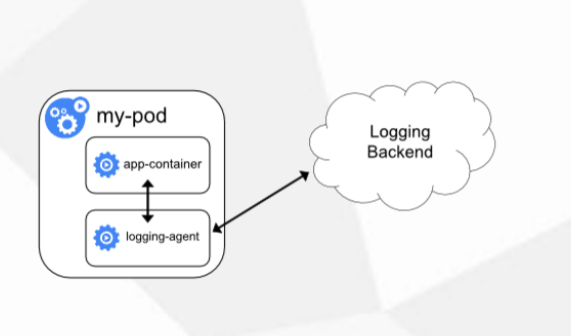

方案二:

Pod中附加专用日志收集的容器

每个运行应用程序的Pod中增加一个日志 收集容器,使用emtyDir共享日志目录让 日志收集程序读取到。

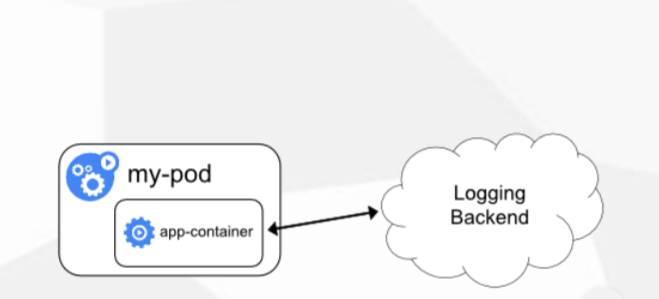

方案三:

应用程序直接推送日志

超出Kubernetes范围

方案比较:

方式 | 优点 | 缺点 |

方案一:Node上部署一个日志收集程序 | 每个Node仅需部署一个日志收集程序, 资源消耗少,对应用无侵入 | 应用程序日志如果写到标准输出和标准错误输出, 那就不支持多行日志。 |

方案二:Pod中附加专用日志收集的容器 | 低耦合 | 每个Pod启动一个日志收集代理,增加资源消耗, 并增加运维维护成本 |

| 方案三:应用程序直接推送日志 | 无需额外收集工具 | 浸入应用,增加应用复杂度 |

4.k8s部署efk

mkdir efk && cd efk

elasticsearch.yaml

apiVersion: apps/v1 kind: StatefulSet metadata: name: elasticsearch namespace: kube-system labels: k8s-app: elasticsearch spec: serviceName: elasticsearch selector: matchLabels: k8s-app: elasticsearch template: metadata: labels: k8s-app: elasticsearch spec: containers: - image: elasticsearch:7.3.2 name: elasticsearch resources: limits: cpu: 1 memory: 2Gi requests: cpu: 0.5 memory: 500Mi env: - name: "discovery.type" value: "single-node" - name: ES_JAVA_OPTS value: "-Xms512m -Xmx2g" ports: - containerPort: 9200 name: db protocol: TCP volumeMounts: - name: elasticsearch-data mountPath: /usr/share/elasticsearch/data volumeClaimTemplates: - metadata: name: elasticsearch-data spec: storageClassName: "managed-nfs-storage" accessModes: [ "ReadWriteOnce" ] resources: requests: storage: 20Gi --- apiVersion: v1 kind: Service metadata: name: elasticsearch namespace: kube-system spec: clusterIP: None ports: - port: 9200 protocol: TCP targetPort: db selector: k8s-app: elasticsearch

[object Object]

kibana.yml

apiVersion: apps/v1 kind: Deployment metadata: name: kibana namespace: kube-system labels: k8s-app: kibana spec: replicas: 1 selector: matchLabels: k8s-app: kibana template: metadata: labels: k8s-app: kibana spec: containers: - name: kibana image: kibana:7.3.2 resources: limits: cpu: 1 memory: 500Mi requests: cpu: 0.5 memory: 200Mi env: - name: ELASTICSEARCH_HOSTS value: http://elasticsearch:9200 ports: - containerPort: 5601 name: ui protocol: TCP --- apiVersion: v1 kind: Service metadata: name: kibana namespace: kube-system spec: type: NodePort ports: - port: 5601 protocol: TCP targetPort: ui nodePort: 30601 selector: k8s-app: kibana --- apiVersion: extensions/v1beta1 kind: Ingress metadata: name: kibana namespace: kube-system spec: rules: - host: kibana.ctnrs.com http: paths: - path: / backend: serviceName: kibana servicePort: 5601

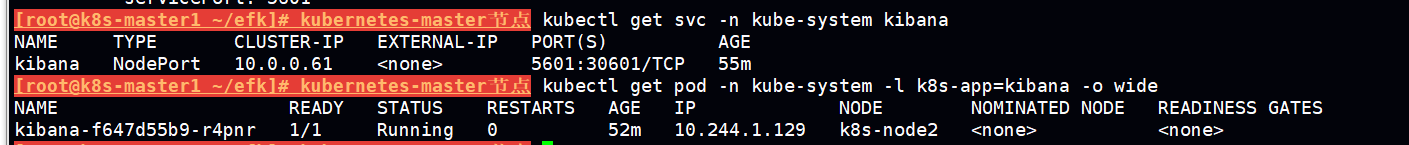

使用node ip:30601 访问kibana

kibana页面

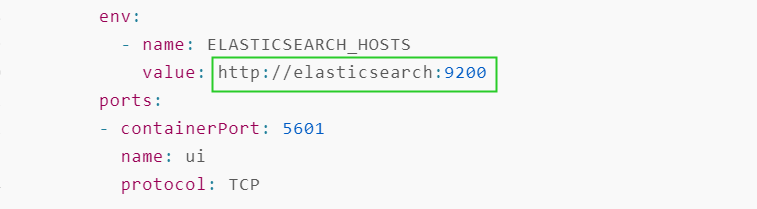

由于kibana.yaml中定义了数据来源为http://elasticsearch:9200

kibana会自动获取存在elasticsearch中日志数据

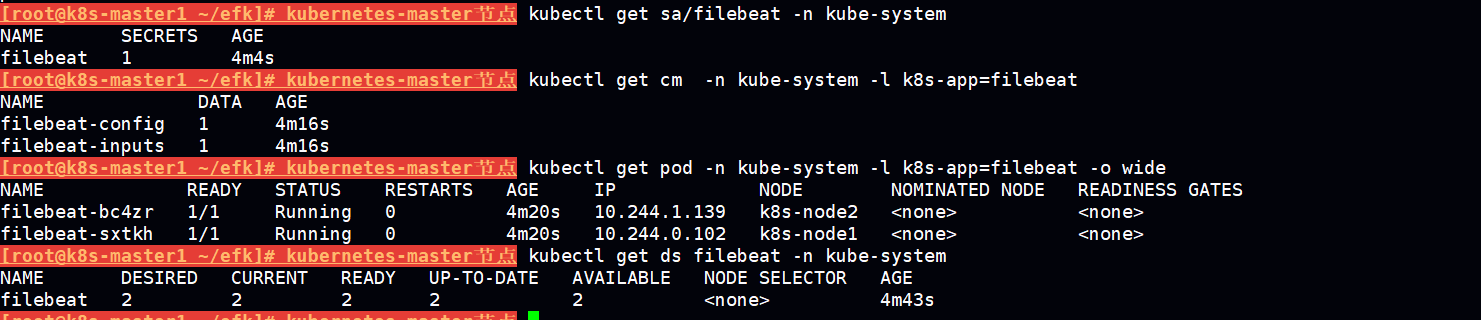

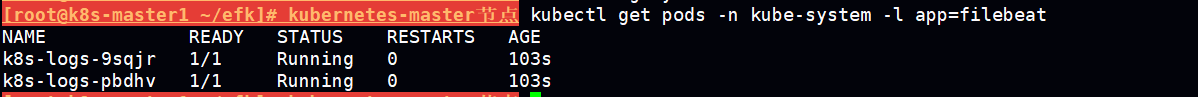

filebeat-kubernetes.yaml

--- apiVersion: v1 kind: ConfigMap metadata: name: filebeat-config namespace: kube-system labels: k8s-app: filebeat data: filebeat.yml: |- filebeat.config: inputs: # Mounted `filebeat-inputs` configmap: path: ${path.config}/inputs.d/*.yml # Reload inputs configs as they change: reload.enabled: false modules: path: ${path.config}/modules.d/*.yml # Reload module configs as they change: reload.enabled: false # To enable hints based autodiscover, remove `filebeat.config.inputs` configuration and uncomment this: #filebeat.autodiscover: # providers: # - type: kubernetes # hints.enabled: true output.elasticsearch: hosts: ['${ELASTICSEARCH_HOST:elasticsearch}:${ELASTICSEARCH_PORT:9200}'] --- apiVersion: v1 kind: ConfigMap metadata: name: filebeat-inputs namespace: kube-system labels: k8s-app: filebeat data: kubernetes.yml: |- - type: docker containers.ids: - "*" processors: - add_kubernetes_metadata: in_cluster: true --- apiVersion: apps/v1 kind: DaemonSet metadata: name: filebeat namespace: kube-system labels: k8s-app: filebeat spec: selector: matchLabels: k8s-app: filebeat template: metadata: labels: k8s-app: filebeat spec: serviceAccountName: filebeat terminationGracePeriodSeconds: 30 containers: - name: filebeat image: elastic/filebeat:7.3.2 args: [ "-c", "/etc/filebeat.yml", "-e", ] env: - name: ELASTICSEARCH_HOST value: elasticsearch - name: ELASTICSEARCH_PORT value: "9200" securityContext: runAsUser: 0 # If using Red Hat OpenShift uncomment this: #privileged: true resources: limits: memory: 200Mi requests: cpu: 100m memory: 100Mi volumeMounts: - name: config mountPath: /etc/filebeat.yml readOnly: true subPath: filebeat.yml - name: inputs mountPath: /usr/share/filebeat/inputs.d readOnly: true - name: data mountPath: /usr/share/filebeat/data - name: varlibdockercontainers mountPath: /var/lib/docker/containers readOnly: true volumes: - name: config configMap: defaultMode: 0600 name: filebeat-config - name: varlibdockercontainers hostPath: path: /var/lib/docker/containers - name: inputs configMap: defaultMode: 0600 name: filebeat-inputs # data folder stores a registry of read status for all files, so we don't send everything again on a Filebeat pod restart - name: data hostPath: path: /var/lib/filebeat-data type: DirectoryOrCreate --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: filebeat subjects: - kind: ServiceAccount name: filebeat namespace: kube-system roleRef: kind: ClusterRole name: filebeat apiGroup: rbac.authorization.k8s.io --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRole metadata: name: filebeat labels: k8s-app: filebeat rules: - apiGroups: [""] # "" indicates the core API group resources: - namespaces - pods verbs: - get - watch - list --- apiVersion: v1 kind: ServiceAccount metadata: name: filebeat namespace: kube-system labels: k8s-app: filebeat ---

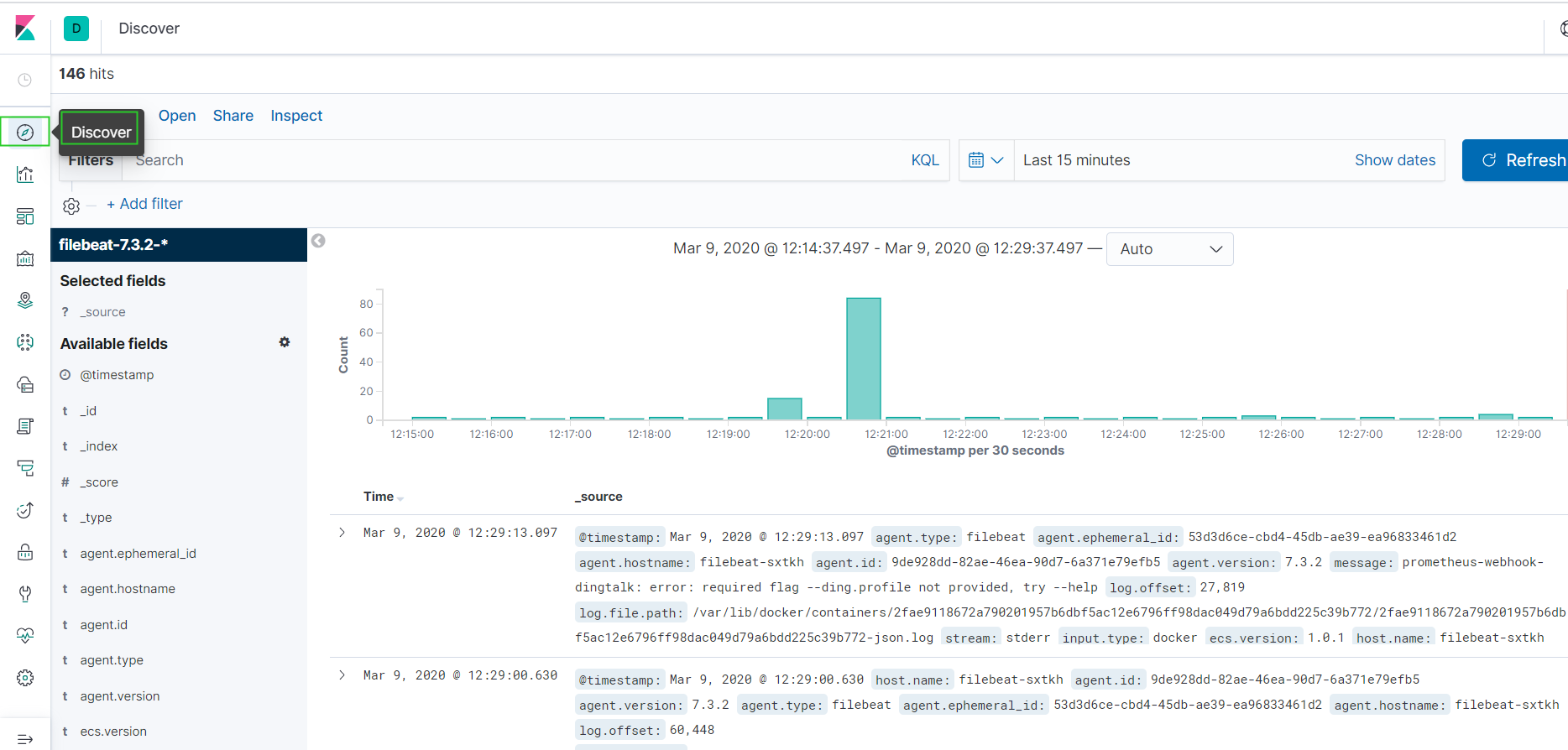

当filebeat起来之后 kibana会收到来自 filebeat处理后存在elasticsearch中的日志

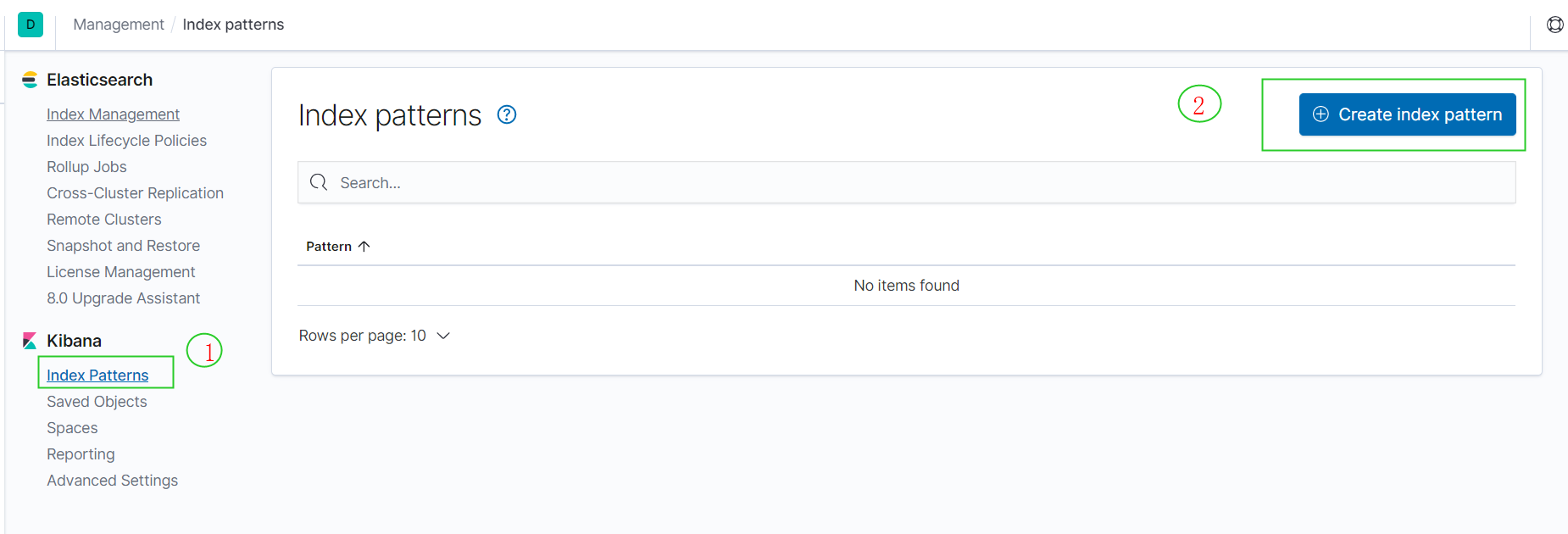

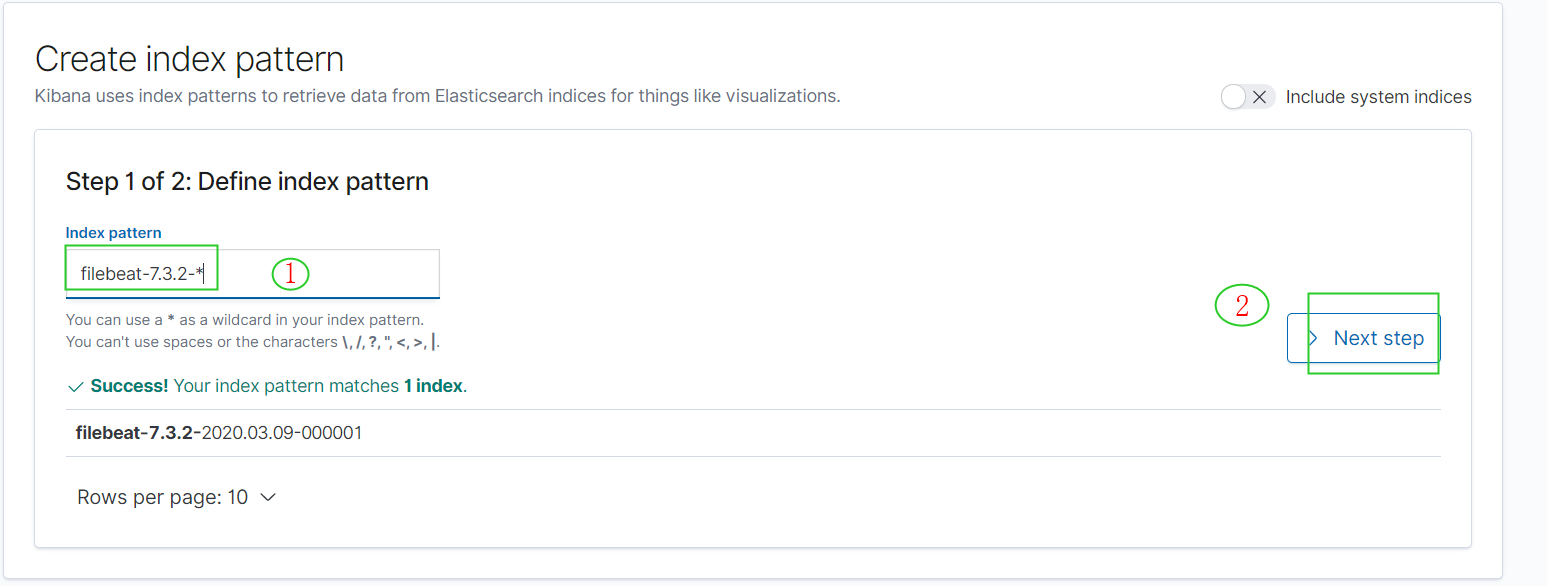

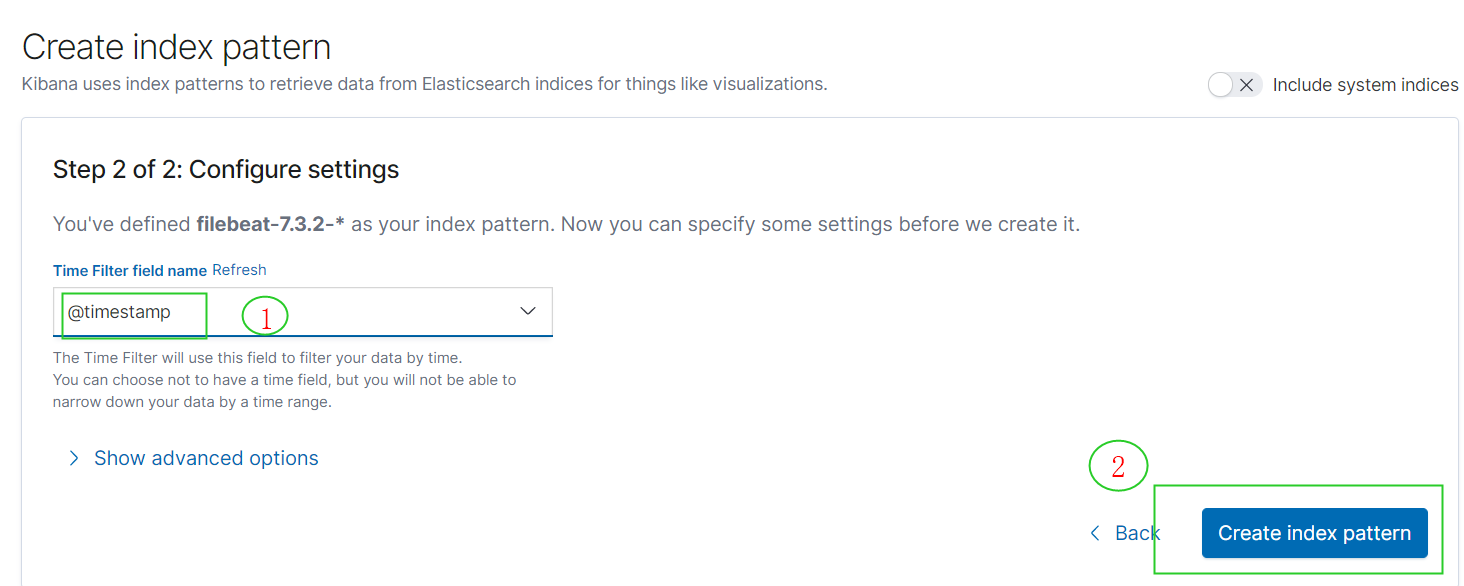

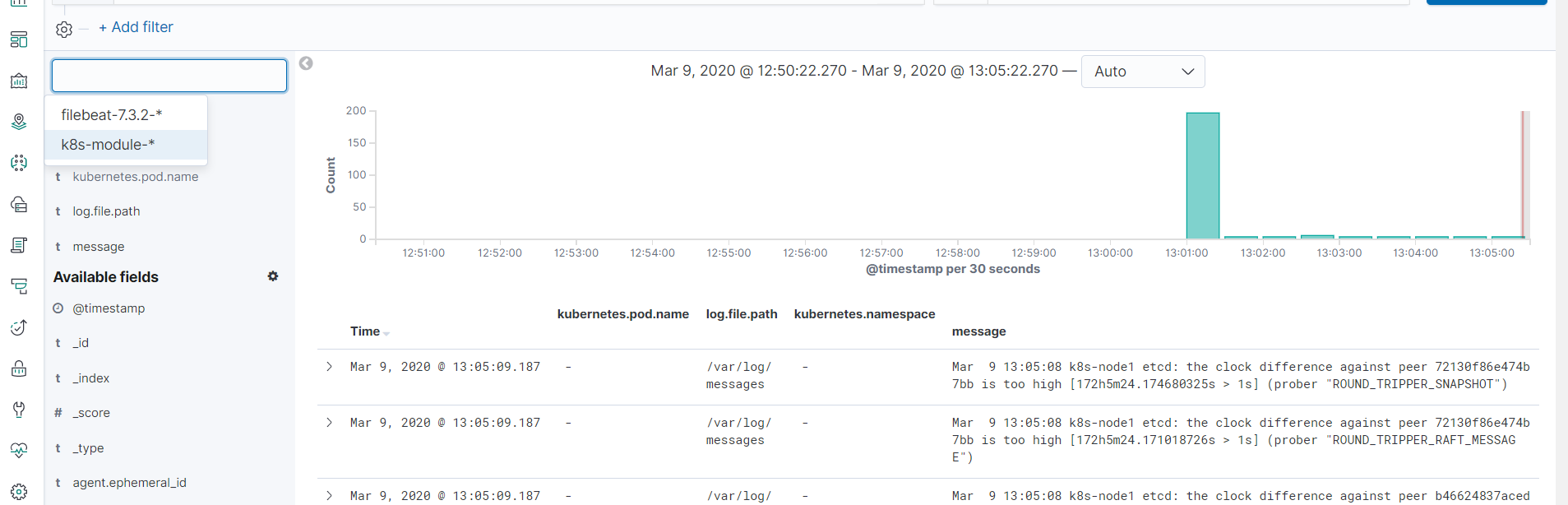

创建索引匹配

数据展示

可以调节左侧边栏的avaliable fields 自定义展示对应字段。

5.方案二:Pod中附加专用日志收集的容器

k8s-logs.yaml

apiVersion: v1 kind: ConfigMap metadata: name: k8s-logs-filebeat-config namespace: kube-system data: filebeat.yml: | filebeat.inputs: - type: log paths: - /var/log/messages fields: app: k8s type: module fields_under_root: true setup.ilm.enabled: false setup.template.name: "k8s-module" setup.template.pattern: "k8s-module-*" output.elasticsearch: hosts: ['elasticsearch.kube-system:9200'] index: "k8s-module-%{+yyyy.MM.dd}" --- apiVersion: apps/v1 kind: DaemonSet metadata: name: k8s-logs namespace: kube-system spec: selector: matchLabels: project: k8s app: filebeat template: metadata: labels: project: k8s app: filebeat spec: containers: - name: filebeat image: elastic/filebeat:7.3.2 args: [ "-c", "/etc/filebeat.yml", "-e", ] resources: requests: cpu: 100m memory: 100Mi limits: cpu: 500m memory: 500Mi securityContext: runAsUser: 0 volumeMounts: - name: filebeat-config mountPath: /etc/filebeat.yml subPath: filebeat.yml - name: k8s-logs mountPath: /var/log/messages volumes: - name: k8s-logs hostPath: path: /var/log/messages - name: filebeat-config configMap: name: k8s-logs-filebeat-config

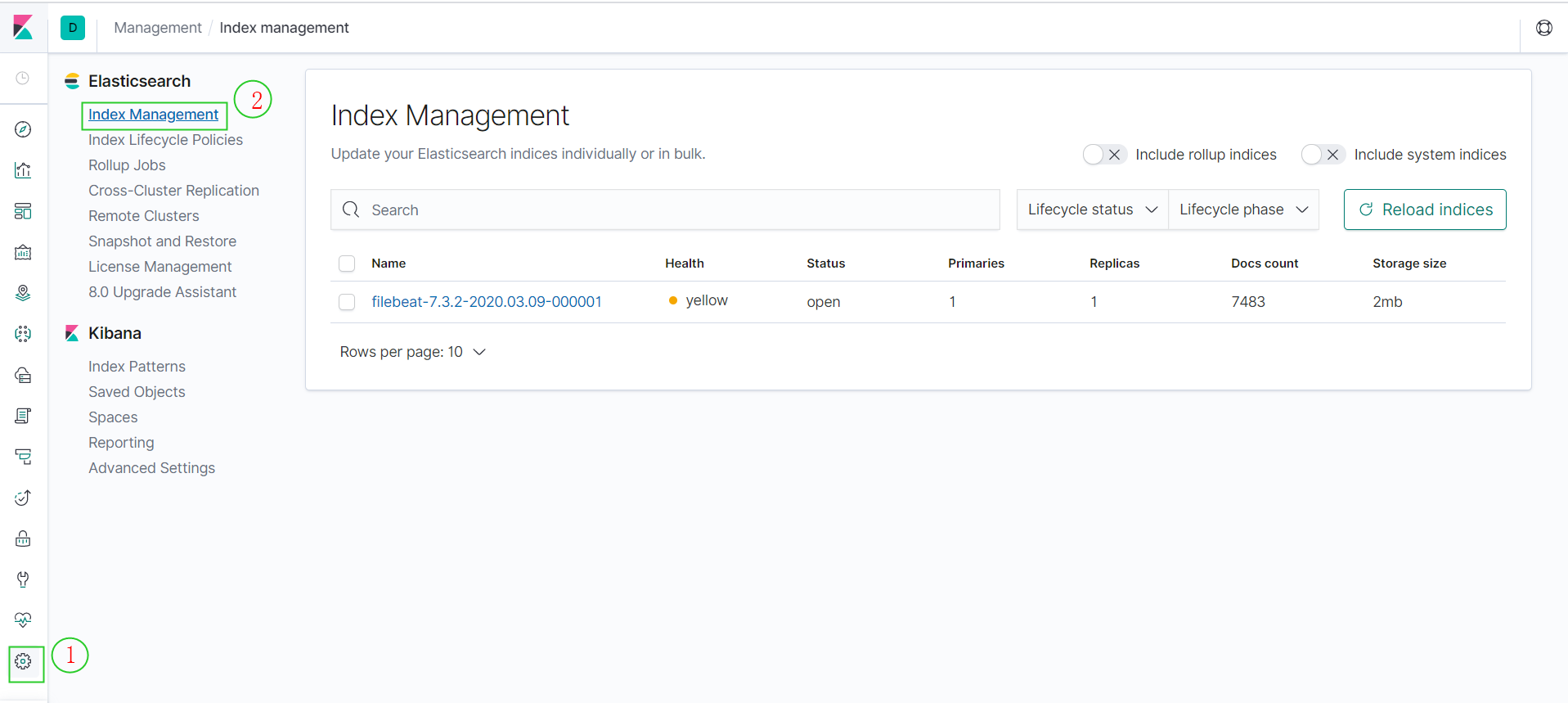

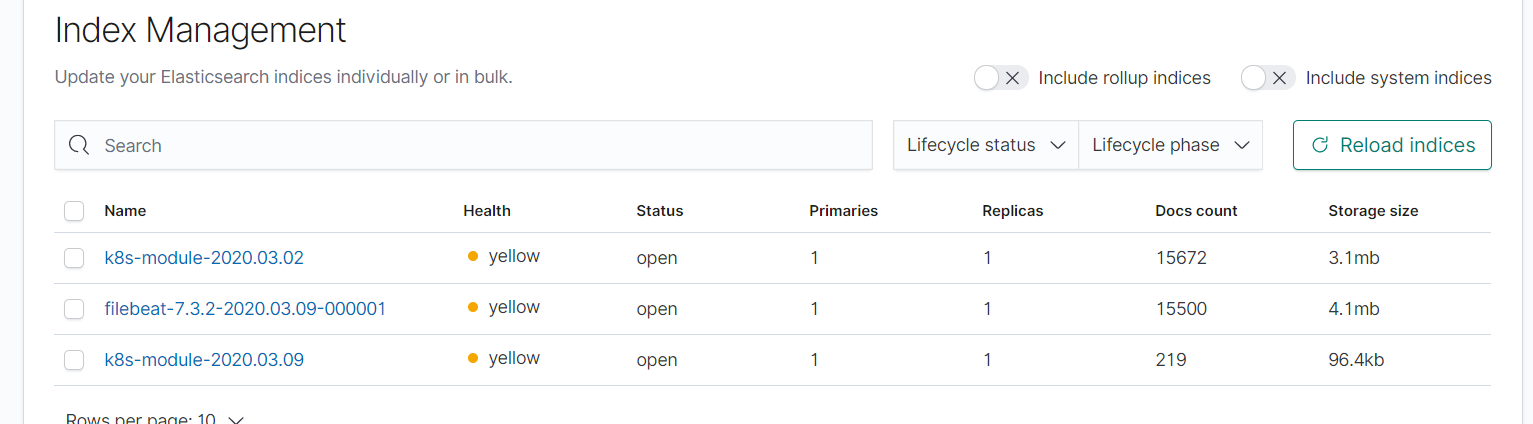

查看索引管理

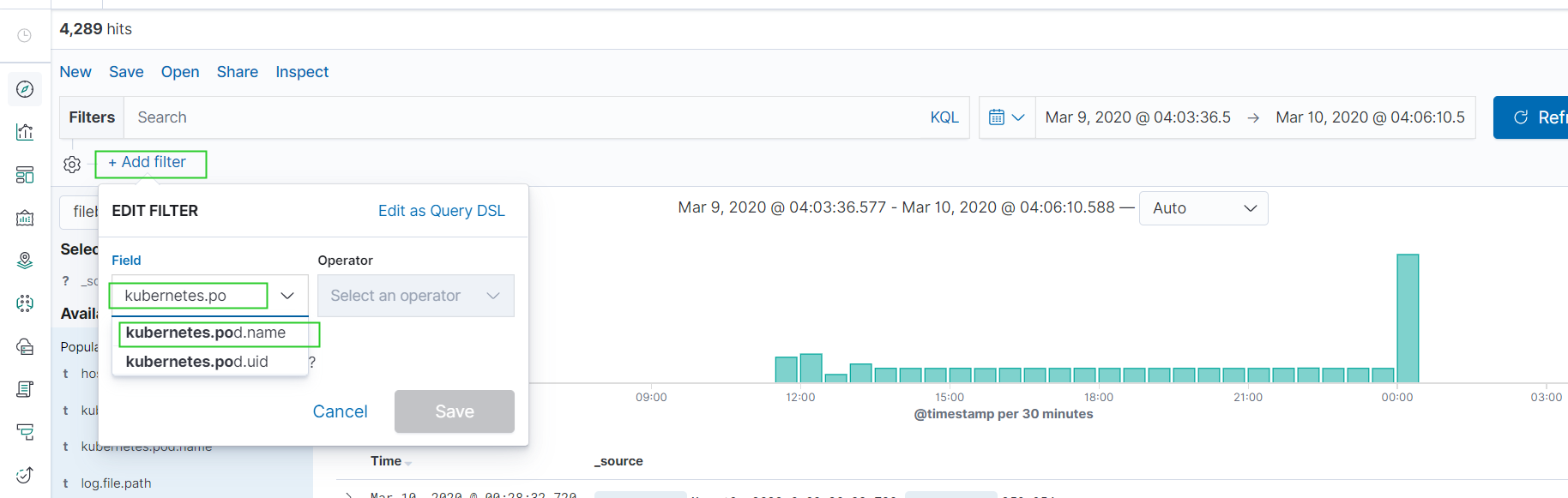

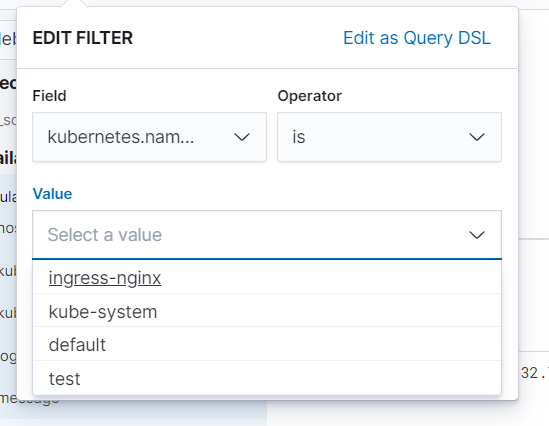

使用过滤器

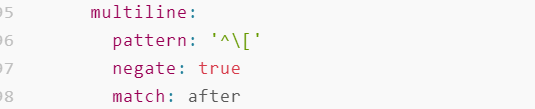

对于java异常的日志,需要多行匹配

一个java应用的 日志收集demo

apiVersion: apps/v1 kind: Deployment metadata: name: java-demo namespace: test spec: replicas: 2 selector: matchLabels: project: www app: java-demo template: metadata: labels: project: www app: java-demo spec: imagePullSecrets: - name: "docker-regsitry-auth" containers: - image: 192.168.31.70/demo/java-demo:v2 name: java imagePullPolicy: Always ports: - containerPort: 8080 name: web protocol: TCP resources: requests: cpu: 0.5 memory: 1Gi limits: cpu: 1 memory: 2Gi livenessProbe: httpGet: path: / port: 8080 initialDelaySeconds: 60 timeoutSeconds: 20 readinessProbe: httpGet: path: / port: 8080 initialDelaySeconds: 60 timeoutSeconds: 20 volumeMounts: - name: tomcat-logs mountPath: /usr/local/tomcat/logs - name: filebeat image: elastic/filebeat:7.3.2 args: [ "-c", "/etc/filebeat.yml", "-e", ] resources: limits: memory: 500Mi requests: cpu: 100m memory: 100Mi securityContext: runAsUser: 0 volumeMounts: - name: filebeat-config mountPath: /etc/filebeat.yml subPath: filebeat.yml - name: tomcat-logs mountPath: /usr/local/tomcat/logs volumes: - name: tomcat-logs emptyDir: {} - name: filebeat-config configMap: name: filebeat-config --- apiVersion: v1 kind: ConfigMap metadata: name: filebeat-config namespace: test data: filebeat.yml: |- filebeat.inputs: - type: log paths: - /usr/local/tomcat/logs/catalina.* # tags: ["tomcat"] fields: app: www type: tomcat-catalina fields_under_root: true multiline: pattern: '^\[' negate: true match: after setup.ilm.enabled: false setup.template.name: "tomcat-catalina" setup.template.pattern: "tomcat-catalina-*" output.elasticsearch: hosts: ['elasticsearch.kube-system:9200'] index: "tomcat-catalina-%{+yyyy.MM.dd}" 针对java异常设置的多行匹配规则。

待日志写入到 es时,配置索引匹配