ELK之六-----logstash结合redis收集系统日志和nginx访问日志

一、logstash结合redis收集系统日志

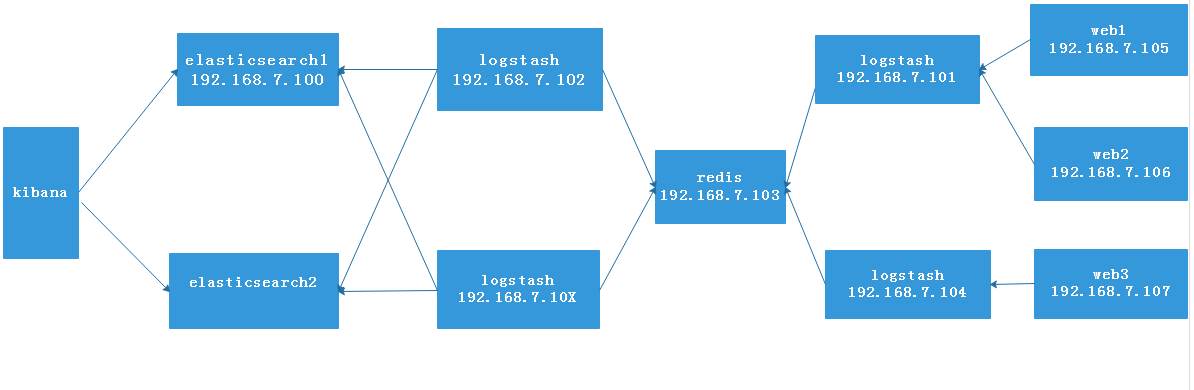

架构图:

环境准备:

A主机:elasticsearch主机 IP地址:192.168.7.100

B主机:logstash主机 IP地址:192.168.7.102

C主机:redis主机 IP地址:192.168.7.103

D主机:logstash主机/nginx主机 IP地址:192.168.7.101

1、安装并配置redis

1、安装并配置redis服务,并启动redis服务

[root@redis ~]# yum install redis -y [root@redis ~]# vim /etc/redis.conf bind 0.0.0.0 # 监听本地的所有地址 requirepass 123456 #为了redis安全,设置一个密码 [root@redis ~]# systemctl restart redis # 启动redis服务

2、在logstash-D主机安装logstash服务

1、先安装JDK、并创建软链接

[root@logstash-1 ~]# cd /usr/local/src [root@logstash-1 src]# ls jdk1.8.0_212 jdk-8u212-linux-x64.tar.gz sonarqube-6.7.7 sonarqube-6.7.7.zip [root@logstash-1 src]# tar xvf jdk-8u212-linux-x64.tar.gz [root@logstash-1 src]# ln -s /usr/local/src/jdk-8u212-linux-x64.tar.gz /usr/local/jdk [root@logstash-1 src]# ln -s /usr/local/jdk/bin/java /usr/bin/

2、配置JDK的环境变量,并使其生效

[root@logstash-1 src]# vim /etc/profile.d/jdk.sh # 设置JDK环境变量 export HISTTIMEFORMAT="%F %T `whoami`" export export LANG="en_US.utf-8" export JAVA_HOME=/usr/local/jdk export CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar export PATH=$PATH:$JAVA_HOME/bin [root@logstash-1 src]# . /etc/profile.d/jdk.sh # 使JDK环境变量生效

3、安装logstash

[root@logstash-1 src]# yum install logstash-6.8.1.rpm -y [root@logstash-1 ~]# vim /etc/profile.d/logstash.sh # 定义logstash环境变量 export PATH=$PATH:/usr/share/logstash/bin/ [root@logstash-1 ~]# . /etc/profile.d/logstash.sh # 使环境变量生效

4、在/etc/logstash/conf.d目录下创建一个写入到redis日志的文件:redis-es.conf

input {

file {

path => "/var/log/messages" # 收集档期那logstash日志文件

type => "message-101" # 日志类型

start_position => "beginning"

stat_interval => "2" # 间隔2s

#codec => "json"

}

}

output {

if [type] == "message-101" {

redis {

host => "192.168.7.103" # 将日志传到103的redis主机

port => "6379" # redis的监听端口号

password => "123456" # redisa的登陆密码

db => "1" # redis的数据库类型,默认是0

key => "linux-7-101-key" #自定义的key

data_type => "list" # 数据类型改为list

}

}

}

5、如果logstash服务是以logstash用户启动,将logstash系统日志的权限改为644,否则logstash系统日志无法访问。

[root@logstash-1 conf.d]# vim /etc/systemd/system/logstash.service [Unit] Description=logstash [Service] Type=simple User=root #以root方式启动logstash服务,生产中最好以logstash服务启动 Group=root # Load env vars from /etc/default/ and /etc/sysconfig/ if they exist. # Prefixing the path with '-' makes it try to load, but if the file doesn't # exist, it continues onward. EnvironmentFile=-/etc/default/logstash EnvironmentFile=-/etc/sysconfig/logstash ExecStart=/usr/share/logstash/bin/logstash "--path.settings" "/etc/logstash" Restart=always WorkingDirectory=/ Nice=19 LimitNOFILE=16384 [Install] WantedBy=multi-user.target [root@logstash-1 conf.d]# chmod 644 /var/log/messages # 将系统日志权限进行修改。

6、启动logstash服务

# systemctl start logstash

3、开始测试logstash服务

1、在logstash服务上对/var/log/messages系统日志进行输入信息

[root@logstash-1 src]# echo 11 >> /var/log/messages

2、在redis服务器上查登陆redis客户端查看此时显示的KEYS值

[root@redis ~]# redis-cli -h 192.168.7.103 192.168.7.103:6379> auth 123456 OK 192.168.7.103:6379> SELECT 1 OK 192.168.7.103:6379[1]> KEYS * 1) "linux-7-101-key" # 可以看到此时的logstash服务将logstash服务器的系统日志已经传递到redis服务器上

此时在第二台logstash主机上可以将系统日志传到redis主机上。

4、在logstash-B主机上配置

1、在/etc/logstash/conf.d目录下创建一个提取redis缓存日志文件

input {

redis {

host => "192.168.7.103" # redis主机IP地址

port => "6379"

password => "123456"

db => "1"

key => "linux-7-101-key" # 取出对应的KEY值

data_type => "list"

}

}

output {

if [type] == "message-101" { # 提取与第二台logstash主机的log类型一致

elasticsearch {

hosts => ["192.168.7.100:9200"] # elasticsearch主机的IP地址

index => "message-7-101-%{+YYYY.MM.dd}"

}

}

}

2、重启B主机的logstash服务

# systemctl restart logstash

3、此时在redis服务器上查看数据已经被logstash服务器采集到并传到了elasticsearch服务器上。

192.168.7.103:6379[1]> KEYS * (empty list or set) # 此时的redis服务器数据为空

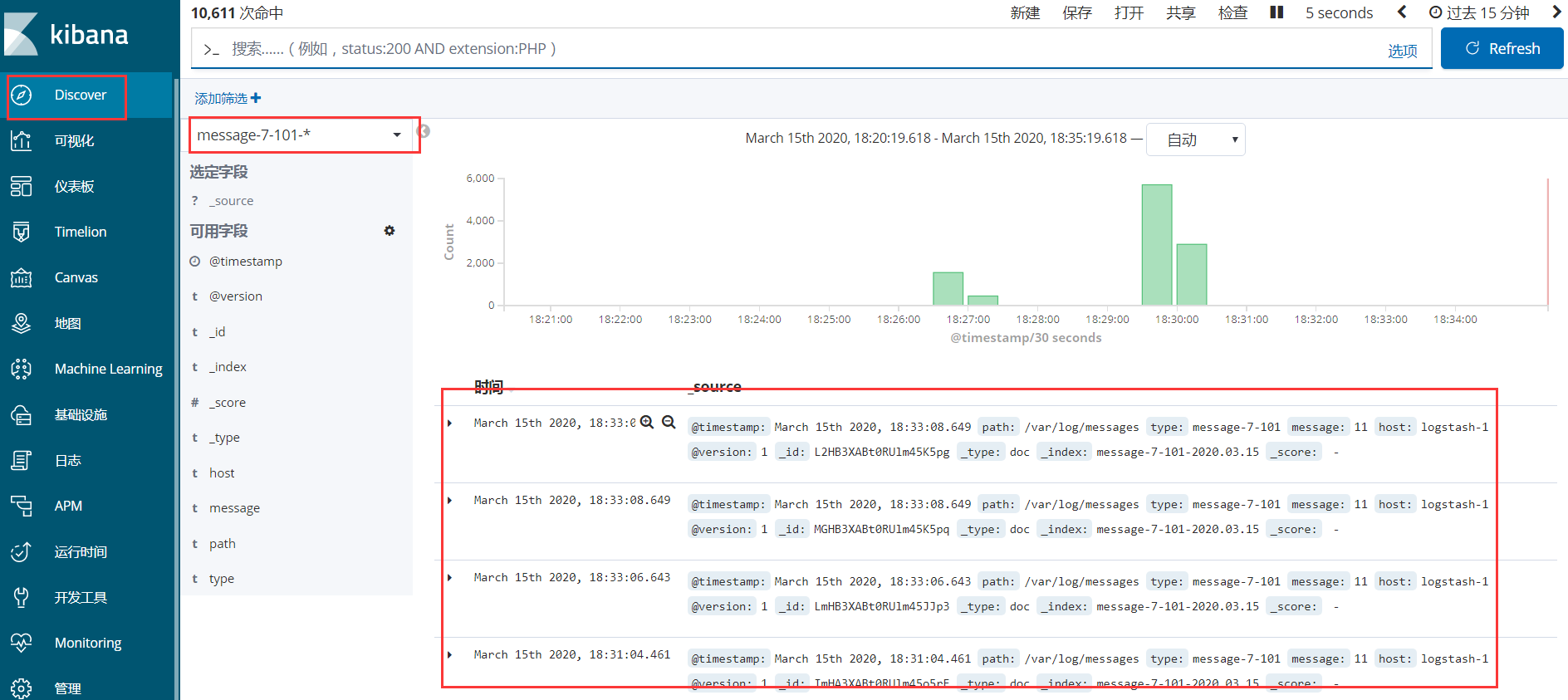

5、在kibana控制台创建索引

1、创建收集到redis数据的索引

2、在discover选项中查看收集到的信息

二、logstash结合redis收集nginx访问日志

1、在D主机安装nginx服务并将log日志配置为json格式

1、安装nginx服务,最好是源码编译,方便后期升级nginx版本

[root@logstash-1 ~]# cd /usr/local/src [root@logstash-1 src]# wget http://nginx.org/download/nginx-1.14.2.tar.gz [root@logstash-1 src]# tar xvf nginx-1.14.2.tar.gz [root@logstash-1 nginx-1.14.2]# ./configure --prefix=/apps/nginx # 安装nginx,并制定安装目录 [root@logstash-1 nginx-1.14.2]# make -j 2 && make install # 编译安装nginx

2、修改nginx配置文件,并将log日志改为json格式/apps/nginx/conf/nginx.conf

log_format access_json '{"@timestamp":"$time_iso8601",'

'"host":"$server_addr",'

'"clientip":"$remote_addr",'

'"size":$body_bytes_sent,'

'"responsetime":$request_time,'

'"upstreamtime":"$upstream_response_time",'

'"upstreamhost":"$upstream_addr",'

'"http_host":"$host",'

'"url":"$uri",'

'"domain":"$host",'

'"http_user_agent":"$http_user_agent",'

'"xff":"$http_x_forwarded_for",'

'"referer":"$http_referer",'

'"status":"$status"}';

access_log /var/log/nginx/access.log access_json; # 定义json格式的日志,并指定存放在/var/log/nginx目录下

[root@logstash-1 nginx-1.14.2]# mkdir /var/log/nginx # 创建一个存放log日志的目录

3、启动nginx服务,并查看启动的80端口

[root@logstash-1 nginx-1.14.2]# /apps/nginx/sbin/nginx [root@logstash-1 nginx-1.14.2]# ss -nlt State Recv-Q Send-Q Local Address:Port Peer Address:Port LISTEN 0 100 127.0.0.1:25 *:* LISTEN 0 511 *:80 *:* LISTEN 0 128 *:22 *:* LISTEN 0 100 [::1]:25 [::]:* LISTEN 0 50 [::ffff:127.0.0.1]:9600 [::]:* LISTEN 0 128 [::]:22 [::]:*

2、修改第logstash-D主机的配置文件

1、在第二台logstash主机的/etc/logstash/conf.d目录下创建配置文件

input {

file {

path => "/var/log/messages"

type => "message-7-101"

start_position => "beginning"

stat_interval => "2"

#codec => "json"

}

file {

path => "/var/log/nginx/access.log"

type => "nginx-accesslog-7-101"

start_position => "beginning"

stat_interval => "2"

codec => "json"

}

}

output {

if [type] == "message-7-101" {

redis {

host => "192.168.7.103"

port => "6379"

password => "123456"

db => "1"

key => "linux-7-101-key"

data_type => "list"

}}

if [type] == "nginx-accesslog-7-101" {

redis {

host => "192.168.7.103"

port => "6379"

password => "123456"

db => "1"

key => "linux-nginxlog-7-101-key"

data_type => "list"

}

}

}

2、重启logstash服务,将第一台的logstash主机服务停掉。

[root@logstash-1 conf.d]# systemctl restart logstash

3、在redis主机上查看logstash主机是否已经将数据传到redis上

192.168.7.103:6379[1]> KEYS * 1) "linux-7-101-key" # 系统日志 2) "linux-nginxlog-7-101-key" # nginx日志

3、在logstash-A主机上修改配置文件

1、在/etc/logstash/conf.d目录下创建一个提取redis数据的配置文件

input {

redis {

host => "192.168.7.103"

port => "6379"

password => "123456"

db => "1"

key => "linux-7-101-key" # 与第二台的logstash服务器key对应

data_type => "list"

}

redis {

host => "192.168.7.103"

port => "6379"

password => "123456"

db => "1"

key => "linux-nginxlog-7-101-key" # 与第二台logstash服务器对应

data_type => "list"

}

}

output {

if [type] == "message-7-101" { # 与第二台logstash服务器对应

elasticsearch {

hosts => ["192.168.7.100:9200"]

index => "message-7-101-%{+YYYY.MM.dd}"

}

}

if [type] == "nginx-accesslog-7-101" { # 与第二台logstash服务器对应

elasticsearch {

hosts => ["192.168.7.100:9200"]

index => "nginx-accesslog-7-101-%{+YYYY.MM.dd}"

}

}

}

2、重启logstash服务

# systemctl restart logstash

3、查看reids主机的数据,此时数据已经为空,被此台logstash服务器已经取走

192.168.7.103:6379[1]> KEYS * (empty list or set) 192.168.7.103:6379[1]> KEYS * (empty list or set)

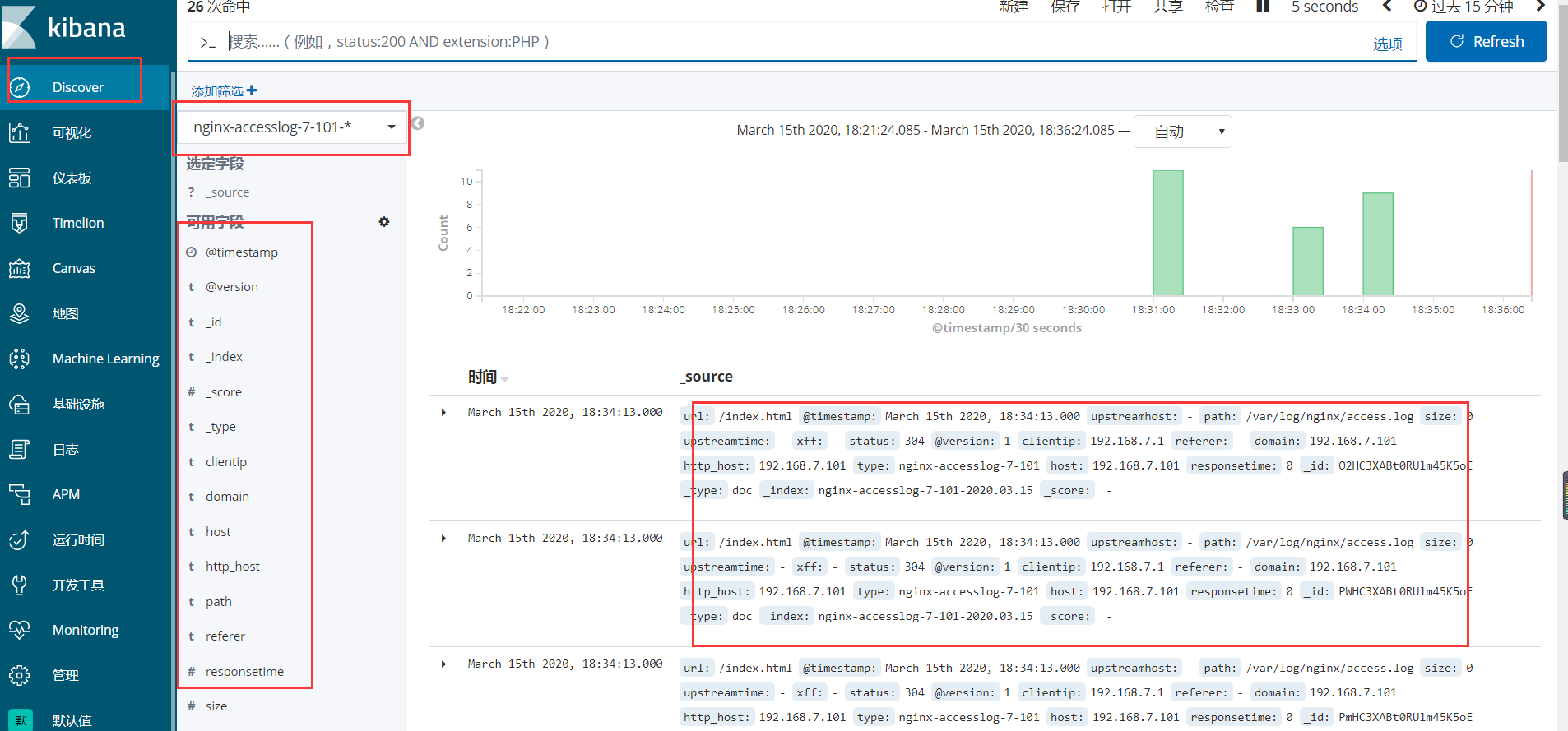

4、在kibaba网页上创建索引

1、在kibana网页上创建索引

2、查看discover选项添加的索引信息

目录 返回

首页