ELK之三-----JAVA日志多行合并、tomcat json日志收集与处理

实战一:通过Logstash收集tomcat服务器的日志

1、配置JDK环境

1、解压JDK包,创建软链接

[root@tomcat-web1 src]# tar xvf jdk-8u212-linux-x64.tar.gz [root@tomcat-web1 src]# ln -sv /usr/local/src/jdk1.8.0_212/ /usr/local/jdk ‘/usr/local/jdk/jdk1.8.0_212’ -> ‘/usr/local/src/jdk1.8.0_212/’ [root@tomcat-web1 src]# ln -sv /usr/local/jdk/bin/java /usr/bin

2、配置java的环境变量

[root@tomcat-web1 ~]# vim /etc/profile.d/jdk.sh #配置环境变量 export HISTTIMEFORMAT="%F %T `whoami`" export export LANG="en_US.utf-8" export JAVA_HOME=/usr/local/jdk export CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar export PATH=$PATH:$JAVA_HOME/bin [root@tomcat-web1 ~]# . /etc/profile.d/jdk.sh #将环境变量生效

3、查看版本信息及java家目录信息

[root@tomcat-web1 src]# java -version java version "1.8.0_212" Java(TM) SE Runtime Environment (build 1.8.0_212-b10) Java HotSpot(TM) 64-Bit Server VM (build 25.212-b10, mixed mode) [root@tomcat-web1 src]# echo $JAVA_HOME /usr/local/jdk

2、配置tomcat服务并启动

1、解压tomcat服务器的安装包,并创建tomcat软链接

[root@tomcat-web1 ~]# mkdir /apps [root@tomcat-web1 ~]# cd /apps/ [root@tomcat-web1 apps]# ls apache-tomcat-8.5.42 apache-tomcat-8.5.42.tar.gz tomcat [root@tomcat-web1 apps]# tar xvf apache-tomcat-8.5.42.tar.gz [root@tomcat-web1 apps]# ln -s /apps/apache-tomcat-8.5.42 /apps/tomcat #创建tomcat软链接

2、启动tomcat服务

[root@tomcat-web1 apps]# /apps/tomcat/bin/startup.sh Using CATALINA_BASE: /apps/tomcat Using CATALINA_HOME: /apps/tomcat Using CATALINA_TMPDIR: /apps/tomcat/temp Using JRE_HOME: /usr/local/jdk Using CLASSPATH: /apps/tomcat/bin/bootstrap.jar:/apps/tomcat/bin/tomcat-juli.jar Tomcat started.

3、设置访问网站路径

[root@tomcat-web1 apps]# vim /apps/tomcat/conf/server.xml <Host name="localhost" appBase="/data/tomcat/tomcat_webdir"

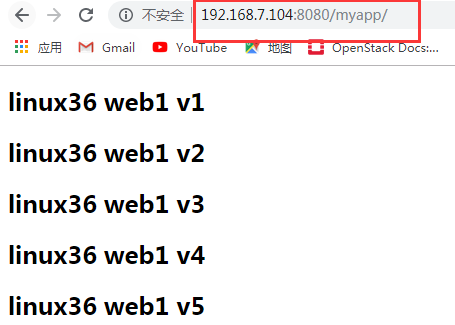

4、查看tomcat访问页面,此访问页面是前面自制的页面,能访问,说明tomcat服务正常。

5、修改tomcat服务的配置文件,收集log日志为json格式:/apps/tomcat/bin/server.xml,实际的tomcat访问log日志文件目录在:/apps/tomcat/logs/tomcat_access_log.2020-03-13.log

<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"

prefix="tomcat_access_log" suffix=".log" # 将log日志文件名进行修改,以.log为后缀的文件

pattern="{"clientip":"%h","ClientUser":"%l","authenticated":"%u",&quo #修改为json格式日志文件。

t;AccessTime":"%t","method":"%r","status":"%s","SendBytes":"%b","

Query?string":"%q","partner":"%{Referer}i","AgentVersion":"%{User-Agent}i"}"/>

访问此时的tomcat日志文件:/apps/tomcat/logs/tomcat_access_log.2020-03-13.log ,验证日志是否是json格式的,可以在网上查看。

[root@logstash ~]# tail -f /apps/tomcat/logs/tomcat_access_log.2020-03-13.log

{"clientip":"192.168.7.1","ClientUser":"-","authenticated":"-","AccessTime":"[13/Mar/2020:15:19:16 +0800]","method":"GET / HTTP/1.1","status":"404","SendBytes":"1078","Query?string":"","partner":"-","AgentVersion":"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36"}

{"clientip":"192.168.7.1","ClientUser":"-","authenticated":"-","AccessTime":"[13/Mar/2020:15:19:16 +0800]","method":"GET /favicon.ico HTTP/1.1","status":"404","SendBytes":"1078","Query?string":"","partner":"http://192.168.7.102:8080/","AgentVersion":"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36"}

{"clientip":"192.168.7.1","ClientUser":"-","authenticated":"-","AccessTime":"[13/Mar/2020:15:22:09 +0800]","method":"GET / HTTP/1.1","status":"404","SendBytes":"1078","Query?string":"","partner":"-","AgentVersion":"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36"}

{"clientip":"192.168.7.1","ClientUser":"-","authenticated":"-","AccessTime":"[13/Mar/2020:15:22:38 +0800]","method":"GET /myapp HTTP/1.1","status":"302","SendBytes":"-","Query?string":"","partner":"-","AgentVersion":"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36"}

{"clientip":"192.168.7.1","ClientUser":"-","authenticated":"-","AccessTime":"[13/Mar/2020:15:22:38 +0800]","method":"GET /myapp/ HTTP/1.1","status":"200","SendBytes":"14","Query?string":"","partner":"-","AgentVersion":"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36"}

3、收集tomcat日志文件

需要将logstash主机启动脚本改为root启动用户,否则无法收集到tomcat的日志文件

[root@logstash conf.d]# vim /etc/systemd/system/logstash.service User=root Group=root

重启logstash服务

[root@logstash conf.d]# systemctl restart logstash

创建/etc/logstash/conf.d目录下的配置文件:tomcat-java-log.conf

input {

file {

path => "/var/log/logstash/logstash-plain.log" #收集java的日志文件目录

start_position => "beginning"

stat_interval => 3

type => "java-log"

}

file {

path => "/apps/tomcat/logs/tomcat_access_log.*.log" # 收集tomcat日志的文件目录

start_position => "beginning"

stat_interval => 3

type => "tomcat-access-log"

codec => "json" # 输出tomcat 的json日志格式

}

}

output {

if [type] == "java-log" {

elasticsearch {

hosts => ["192.168.7.100:9200"]

index => "javalog-7-102-%{+YYYY.MM.dd}"

}

}

if [type] == "tomcat-access-log" {

elasticsearch {

hosts => ["192.168.7.100:9200"]

index => "tomcat-access-log-7-102-%{+YYYY.MM.dd}"

}

}

}

在kibana网站上创建tomcat日志的索引

在discover选项中,查看添加后的tomcat日志文件

3、收集java日志json格式的文件

1、在/etc/logstash/conf.d/目录下创建一个java.conf文件,修改此配置文件,将logstash的日志文件收集到elasticsearch主机上。

input {

file {

path => "/var/log/logstash/logstash-plain.log"

start_position => "beginning"

stat_interval => 3

type => "java-log"

}

}

output {

if [type] == "java-log" {

elasticsearch {

hosts => ["192.168.7.100:9200"]

index => "javalog-7-102-%{+YYYY.MM.dd}"

}

}

}

2、重启logstash服务,并观察logstash启动情况

# systemctl restart logstash

在/var/log/logstash/logstash-plain.log文件中可以查看此时的logstash服务器启动情况。

[root@logstash conf.d]# tail -f /var/log/logstash/logstash-plain.log

[2020-03-13T15:49:04,872][INFO ][logstash.outputs.elasticsearch] New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>["//192.168.7.100:9200"]}

[2020-03-13T15:49:11,231][INFO ][logstash.inputs.file ] No sincedb_path set, generating one based on the "path" setting {:sincedb_path=>"/var/lib/logstash/plugins/inputs/file/.sincedb_7d5605c109b000fd1e6e680ae503330d", :path=>["/var/log/logstash/logstash-plain.log"]}

[2020-03-13T15:49:11,291][INFO ][logstash.inputs.file ] No sincedb_path set, generating one based on the "path" setting {:sincedb_path=>"/var/lib/logstash/plugins/inputs/file/.sincedb_452905a167cf4509fd08acb964fdb20c", :path=>["/var/log/messages"]}

[2020-03-13T15:49:11,297][INFO ][logstash.inputs.file ] No sincedb_path set, generating one based on the "path" setting {:sincedb_path=>"/var/lib/logstash/plugins/inputs/file/.sincedb_d883144359d3b4f516b37dba51fab2a2", :path=>["/var/log/nginx/access.log"]}

[2020-03-13T15:49:11,387][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>"main", :thread=>"#<Thread:0x35cee74 run>"}

[2020-03-13T15:49:11,453][INFO ][filewatch.observingtail ] START, creating Discoverer, Watch with file and sincedb collections

[2020-03-13T15:49:11,456][INFO ][filewatch.observingtail ] START, creating Discoverer, Watch with file and sincedb collections

[2020-03-13T15:49:11,478][INFO ][filewatch.observingtail ] START, creating Discoverer, Watch with file and sincedb collections

[2020-03-13T15:49:11,622][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2020-03-13T15:49:12,874][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600} # 查看到此信息,说明logstash已经启动了。

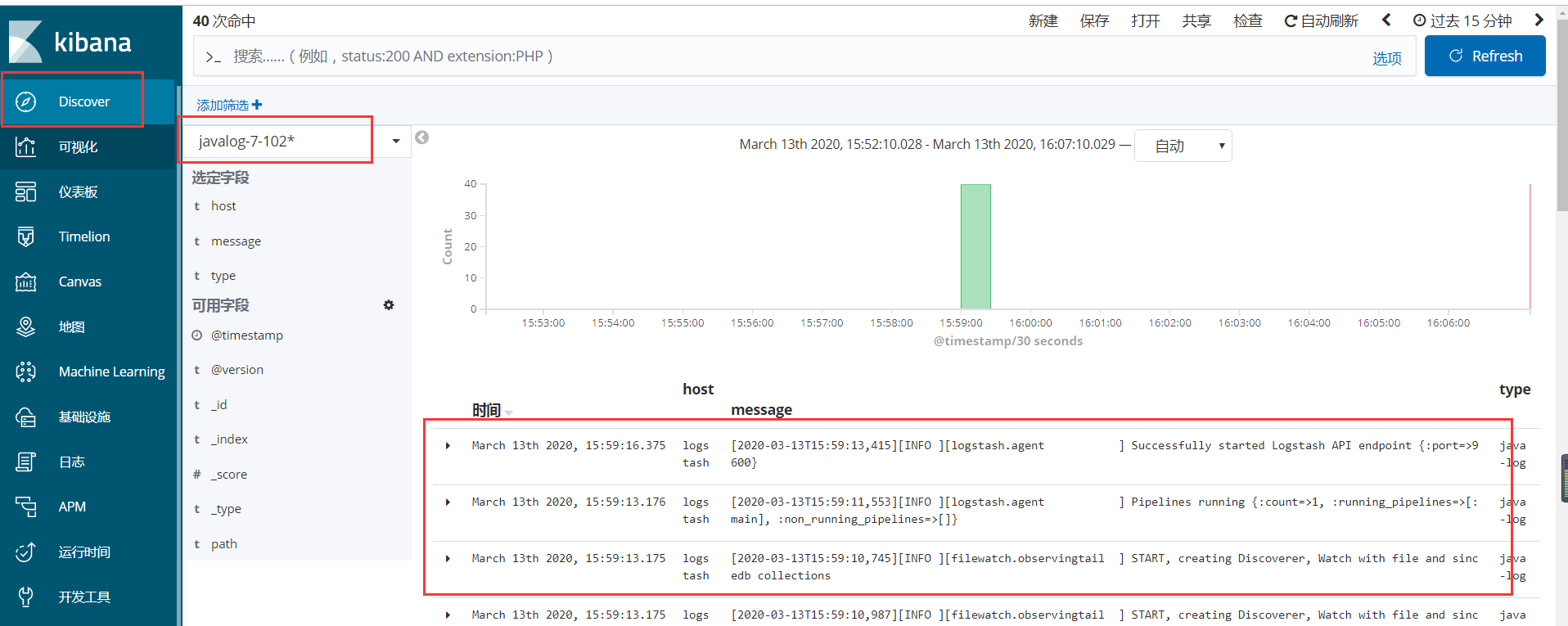

3、在kibana控制台添加java日志文件

4、此时在discover选项可以看到添加的javalog日志。

5、日志多行合并处理—multiline插件(重点)

目前5.5版本支持此插件,6.x版本已经不需要此版本,可以自动合并日志文件。

官方文档:https://www.elastic.co/guide/en/logstash/current/plugins-codecs-multiline.html

介绍multiline

pattern:正则匹配从哪行合并

negate:true/false,匹配到pattern 部分开始合并,还是不配到的合并

input {

file {

path => "/var/log/logstash/logstash-plain.log" # 要采集的log日志

start_position => "beginning"

codec => multiline {

pattern => "^\[" # 以[开头开始匹配

negate => true

what => "previous"

}

}

}

output { # 输出到elasticsearch主机上

elasticsearch {

hosts => ["192.168.7.100:9200"]

index => "logstash-log-7-100-%{+YYYY.MM.dd}"

}}

目录 返回

首页