ETCD 集群的备份和恢复

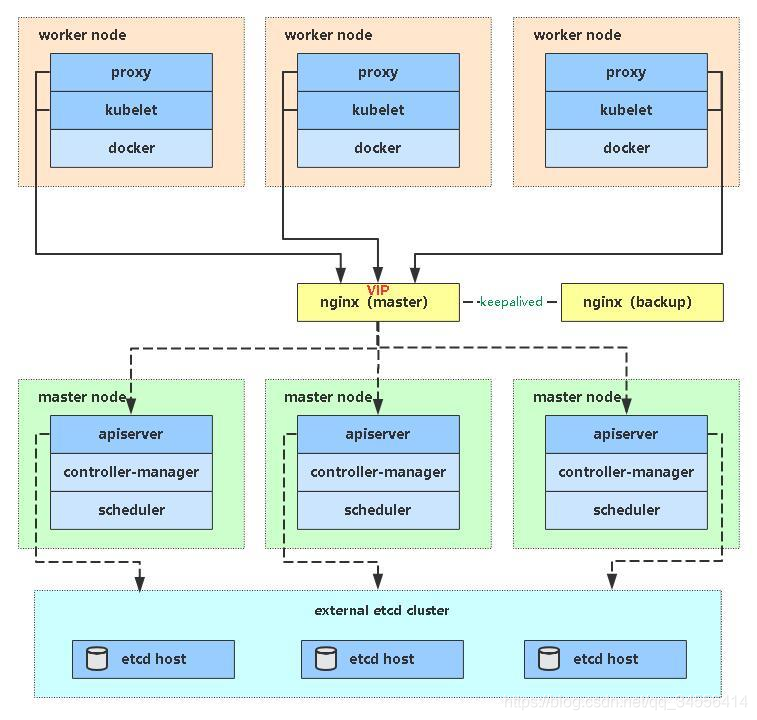

Kubernetes 高可用方案

- Etcd高可用

- kube-apiserver高可用

- kube-controller-manager与kube-scheduler高可用

- CoreDNS高可用

Kubernetes 使用 Etcd 数据库实时存储集群中的数据,安全起见,一定要备份

Etcd v3版本是主流版本,2版本也有很多在用,etcdctl对v3 v2两个版本都支持,在备份的时候需要指定默认的话是v2版本,所以在备份v3版本需要声明一下

snapshot save snap.db

将当前etcd存储的数据备份到文件当中

恢复是从上面备份的文件当中给恢复回去

Etcd数据库备份与恢复

Kubernetes 使用 Etcd 数据库实时存储集群中的数据,安全起见,一定要备份!

查看集群状态

[root@k8s-master ~]# /opt/etcd/bin/etcdctl --help

--cacert="" verify certificates of TLS-enabled secure servers using this CA bundle

--cert="" identify secure client using this TLS certificate file

--key="" identify secure client using this TLS key file

--endpoints=[127.0.0.1:2379] gRPC endpoints

[root@k8s-master ~]# ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.179.99:2379,https://192.168.179.100:2379,https://192.168.179.101:2379" member list

1cd5f52adf869d89, started, etcd-1, https://192.168.179.99:2380, https://192.168.179.99:2379, false

55857deef69d787b, started, etcd-2, https://192.168.179.100:2380, https://192.168.179.100:2379, false

8bcf42695ccd8d89, started, etcd-3, https://192.168.179.101:2380, https://192.168.179.101:2379, false

[root@k8s-master ~]# ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.179.99:2379,https://192.168.179.100:2379,https://192.168.179.101:2379" endpoint health

https://192.168.179.100:2379 is healthy: successfully committed proposal: took = 33.373965ms

https://192.168.179.101:2379 is healthy: successfully committed proposal: took = 41.146436ms

https://192.168.179.99:2379 is healthy: successfully committed proposal: took = 41.593452ms这三个节点的信息是相互同步的,要去备份只需要备份一个节点就行了,连接其中一个节点备份就行。

ETCDCTL_API=3 /opt/etcd/bin/etcdctl \

snapshot save snap.db \

--endpoints=https://192.168.179.99:2379 \

--cacert=/opt/etcd/ssl/ca.pem \

--cert=/opt/etcd/ssl/server.pem \

--key=/opt/etcd/ssl/server-key.pem

[root@k8s-master ~]# ETCDCTL_API=3 etcdctl \

> snapshot save snap.db \

> --endpoints=https://192.168.179.99:2379 \

> --cacert=/opt/etcd/ssl/ca.pem \

> --cert=/opt/etcd/ssl/server.pem \

> --key=/opt/etcd/ssl/server-key.pem

{"level":"info","ts":1608451206.8816888,"caller":"snapshot/v3_snapshot.go:119","msg":"created temporary db file","path":"snap.db.part"}

{"level":"info","ts":"2020-12-20T16:00:06.895+0800","caller":"clientv3/maintenance.go:200","msg":"opened snapshot stream; downloading"}

{"level":"info","ts":1608451206.8958433,"caller":"snapshot/v3_snapshot.go:127","msg":"fetching snapshot","endpoint":"https://192.168.179.99:2379"}

{"level":"info","ts":"2020-12-20T16:00:07.222+0800","caller":"clientv3/maintenance.go:208","msg":"completed snapshot read; closing"}

{"level":"info","ts":1608451207.239597,"caller":"snapshot/v3_snapshot.go:142","msg":"fetched snapshot","endpoint":"https://192.168.179.99:2379","size":"3.4 MB","took":0.357763211}

{"level":"info","ts":1608451207.2398226,"caller":"snapshot/v3_snapshot.go:152","msg":"saved","path":"snap.db"}

Snapshot saved at snap.db

[root@k8s-master ~]# ll /opt/etcd/ssl/

total 16

-rw------- 1 root root 1679 Sep 15 11:37 ca-key.pem

-rw-r--r-- 1 root root 1265 Sep 15 11:37 ca.pem

-rw------- 1 root root 1675 Sep 15 11:37 server-key.pem

-rw-r--r-- 1 root root 1338 Sep 15 11:37 server.pem[root@k8s-master ~]# kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

kubia 3/3 3 3 142d

[root@k8s-master ~]# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

kubia-859d757f8c-74g6s 1/1 Running 0 142d

kubia-859d757f8c-97znt 1/1 Running 0 142d

kubia-859d757f8c-9mjf9 1/1 Running 0 142d

nginx-f89759699-jttrw 1/1 Running 0 49s

现在需要恢复了,对所有的etcd节点都做暂停。如果是多master那么上面apisrevr都要停止

1.先暂停kube-apiserver和etcd

[root@k8s-master ~]# systemctl stop kube-apiserver

[root@k8s-master ~]# systemctl stop etcd

[root@k8s-node1 ~]# systemctl stop etcd

[root@k8s-node2 ~]# systemctl stop etcd2.在每个节点上恢复

先来看看我的配置

[root@k8s-master ~]# cat /opt/etcd/cfg/etcd.conf

#[Member]

ETCD_NAME="etcd-1"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.179.99:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.179.99:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.179.99:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.179.99:2379"

ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.179.99:2380,etcd-2=https://192.168.179.100:2380,etcd-3=https://192.168.179.101:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"在第一个节点恢复

ETCDCTL_API=3 etcdctl snapshot restore /root/snap.db \

--name etcd-1 \

--initial-cluster="etcd-1=https://192.168.179.99:2380,etcd-2=https://192.168.179.100:2380,etcd-3=https://192.168.179.101:2380" \

--initial-cluster-token=etcd-cluster \

--initial-advertise-peer-urls=https://192.168.179.99:2380 \

--data-dir=/var/lib/etcd/default.etcd

--name etcd-1 \ #需要修改为当前节点名称

--initial-advertise-peer-urls=https://192.168.179.99:2380 \ #当前节点IP

[root@k8s-master ~]# ETCDCTL_API=3 etcdctl snapshot restore /root/snap.db \

> --name etcd-1 \

> --initial-cluster="etcd-1=https://192.168.179.99:2380,etcd-2=https://192.168.179.100:2380,etcd-3=https://192.168.179.101:2380" \

> --initial-cluster-token=etcd-cluster \

> --initial-advertise-peer-urls=https://192.168.179.99:2380 \

> --data-dir=/var/lib/etcd/default.etcd

{"level":"info","ts":1608453271.6452653,"caller":"snapshot/v3_snapshot.go:296","msg":"restoring snapshot","path":"/root/snap.db","wal-dir":"/var/lib/etcd/default.etcd/member/wal","data-dir":"/var/lib/etcd/default.etcd","snap-dir":"/var/lib/etcd/default.etcd/member/snap"}

{"level":"info","ts":1608453271.7769744,"caller":"mvcc/kvstore.go:380","msg":"restored last compact revision","meta-bucket-name":"meta","meta-bucket-name-key":"finishedCompactRev","restored-compact-revision":93208}

{"level":"info","ts":1608453271.8183022,"caller":"membership/cluster.go:392","msg":"added member","cluster-id":"1b21d5d68d61885a","local-member-id":"0","added-peer-id":"1cd5f52adf869d89","added-peer-peer-urls":["https://192.168.179.99:2380"]}

{"level":"info","ts":1608453271.8184474,"caller":"membership/cluster.go:392","msg":"added member","cluster-id":"1b21d5d68d61885a","local-member-id":"0","added-peer-id":"55857deef69d787b","added-peer-peer-urls":["https://192.168.179.100:2380"]}

{"level":"info","ts":1608453271.818473,"caller":"membership/cluster.go:392","msg":"added member","cluster-id":"1b21d5d68d61885a","local-member-id":"0","added-peer-id":"8bcf42695ccd8d89","added-peer-peer-urls":["https://192.168.179.101:2380"]}

{"level":"info","ts":1608453271.8290143,"caller":"snapshot/v3_snapshot.go:309","msg":"restored snapshot","path":"/root/snap.db","wal-dir":"/var/lib/etcd/default.etcd/member/wal","data-dir":"/var/lib/etcd/default.etcd","snap-dir":"/var/lib/etcd/default.etcd/member/snap"}

[root@k8s-master ~]# ls /var/lib/etcd/

default.etcd default.etcd.bak拷贝到其他节点,再去恢复

[root@k8s-master ~]# scp snap.db root@192.168.179.100:~

root@192.168.179.100's password:

snap.db 100% 3296KB 15.4MB/s 00:00

[root@k8s-master ~]# scp snap.db root@192.168.179.101:~

root@192.168.179.101's password:

snap.db 在二节点恢复

[root@k8s-node1 ~]# ls /var/lib/etcd/

default.etcd.bak

ETCDCTL_API=3 etcdctl snapshot restore /root/snap.db \

--name etcd-2 \

--initial-cluster="etcd-1=https://192.168.179.99:2380,etcd-2=https://192.168.179.100:2380,etcd-3=https://192.168.179.101:2380" \

--initial-cluster-token=etcd-cluster \

--initial-advertise-peer-urls=https://192.168.179.100:2380 \

--data-dir=/var/lib/etcd/default.etcd

[root@k8s-node1 ~]# ls /var/lib/etcd/

default.etcd default.etcd.bak在三节点恢复

ETCDCTL_API=3 etcdctl snapshot restore /root/snap.db \

--name etcd-3 \

--initial-cluster="etcd-1=https://192.168.179.99:2380,etcd-2=https://192.168.179.100:2380,etcd-3=https://192.168.179.101:2380" \

--initial-cluster-token=etcd-cluster \

--initial-advertise-peer-urls=https://192.168.179.101:2380 \

--data-dir=/var/lib/etcd/default.etcd现在恢复成功,下面将服务启动

[root@k8s-master ~]# systemctl start kube-apiserver

[root@k8s-master ~]# systemctl start etcd

[root@k8s-node1 ~]# systemctl start etcd

[root@k8s-node2 ~]# systemctl start etcd启动完看看集群是否正常

[root@k8s-master ~]# ETCDCTL_API=3 etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.179.99:2379,https://192.168.179.100:2379,https://192.168.179.101:2379" endpoint health

https://192.168.179.100:2379 is healthy: successfully committed proposal: took = 25.946686ms

https://192.168.179.99:2379 is healthy: successfully committed proposal: took = 27.290324ms

https://192.168.179.101:2379 is healthy: successfully committed proposal: took = 30.621904ms[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

kubia-859d757f8c-74g6s 1/1 Running 0 142d

kubia-859d757f8c-97znt 1/1 Running 0 142d

kubia-859d757f8c-9mjf9 1/1 Running 0 142d可以看到之前的nginx消失了,即数据恢复成功

之前备份是找了其中一个节点去备份的,找任意节点去备份都行,但是建议找两个节点去备份,如果其中一个节点挂了,那么备份就会失败了。

注意在每个节点进行恢复,一个是恢复数据,一个是重塑身份

目录 返回

首页